Google Research introduces an AI-powered feedback system using Gemini 2.5 Deep Think to help theoretical computer scientists rigorously verify their papers before submission. The tool, tested at STOC 2026, identified critical errors in over 97% of papers and earned high marks from researchers for its ability to augment the peer review process.

Gemini AI Transforms Theoretical Computer Science Research with Automated Peer Review

In the high-stakes world of theoretical computer science and mathematics, the pursuit of truth hinges on impeccable proof, rigor, and clarity. While peer review serves as the crucial final check, the journey from initial draft to submission is often fraught with months of refinement, where simple errors, inconsistent variables, or subtle logical gaps can bring even the most promising research to a standstill. Now, Google Research is pioneering a solution that could revolutionize this process—a specialized AI tool that acts as an automated, rigorous collaborator for researchers before their work ever reaches human reviewers.

To test this innovative approach, Google's research team developed an experimental program for the Annual ACM Symposium on Theory of Computing (STOC 2026), one of the most prestigious venues in theoretical computer science. The program offered authors automated, pre-submission feedback generated by a specialized Gemini AI tool, designed to provide constructive suggestions and identify potential technical issues within 24 hours of submission. The results have been overwhelmingly positive, with the tool successfully identifying a variety of issues, from calculation and logic errors to inconsistencies in notation and presentation.

From Concept to Implementation: Building the AI Reviewer

The feedback tool leverages inference scaling methods in an advanced version of Gemini 2.5 Deep Think, Google's latest AI model optimized for complex reasoning tasks. Unlike traditional language models that follow a single, linear chain of thought, this setup enables the method to simultaneously explore and combine multiple possible solutions before arriving at a final answer. By synthesizing different reasoning and evaluation traces, the approach reduces inherent hallucinations and focuses on the most salient technical issues in the papers.

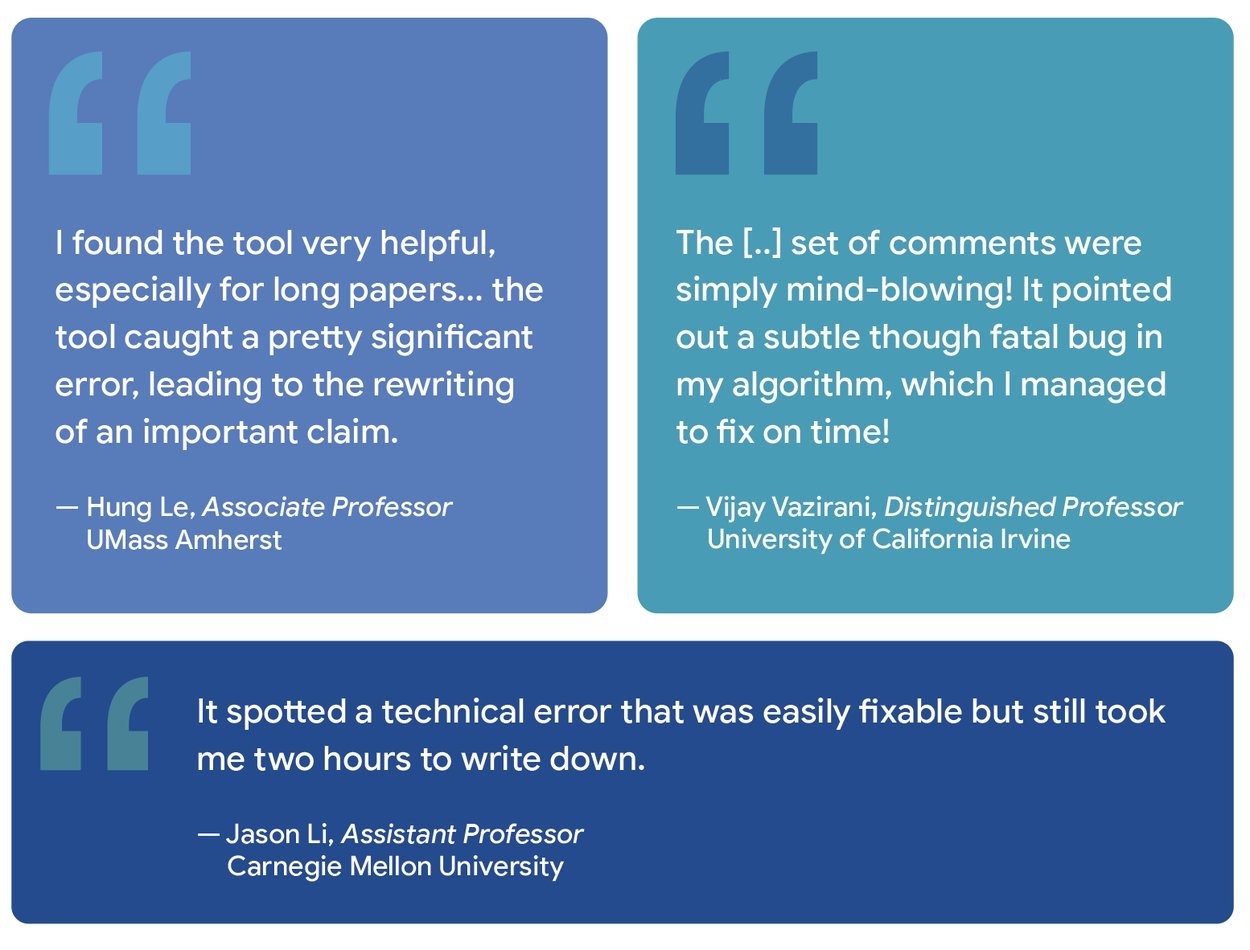

"The tool successfully identified a wide range of issues, from inconsistent variable names to complex problems like calculation errors, incorrect application of inequalities, and logical gaps in proofs," explains Vincent Cohen-Addad, a Research Scientist at Google and one of the project leaders. "As one author noted, the tool found 'a critical bug... that made our proof entirely incorrect,' further adding that it was an 'embarrassingly simple bug that evaded us for months.'"

Structured Feedback for Maximum Impact

Authors who participated in the program received structured feedback divided into key sections: a summary of the paper's contributions, a detailed list of potential mistakes and improvements (often analyzing specific lemmas or theorems), and a comprehensive list of minor corrections and typos. This structured approach ensures that researchers receive actionable insights tailored to their specific field of study.

The feedback format proved particularly valuable because it maintained a neutral tone while providing rigorous analysis. "Participants noted receiving feedback in just two days," adds David Woodruff, another Research Scientist on the project. "Others praised the 'neutral tone and rigor' of the output, finding it a useful complement to human readers."

Quantifying Success: The Numbers Behind the Innovation

The impact of this AI-powered review tool is best illustrated by the impressive statistics gathered from the experiment:

- Over 80% of submitted papers at the time the experiment ended had opted-in for AI review

- 97% of participants found the feedback helpful

- 97% would use this tool again for future submissions

- 81% reported that the model improved the clarity or readability of their papers

These numbers reflect not just satisfaction with the tool's performance, but also its acceptance as a valuable part of the research workflow. The high opt-in rate suggests that researchers see this AI assistance as complementary rather than competitive with human peer review.

Navigating the Nuances: Human-AI Collaboration

Because participants were experts in their respective fields, they were able to readily distinguish helpful insights from occasional "hallucinations" in the AI's output. While the model sometimes struggled—particularly with parsing complex notation or interpreting figures—authors weren't dismissive of the LLM's output.

"Rather than dismissing the AI's suggestions, participants carefully filtered out the noise and extracted the important and correct parts of the output," observes Cohen-Addad. "They then used this feedback as a starting point for their own verification process."

This outcome demonstrates a crucial insight about the future of AI in research: the most effective approach isn't about replacing human expertise but augmenting it. The AI serves as a collaborative partner, providing rigorous analysis that human researchers can then evaluate and incorporate as appropriate.

Educational Impact and Future Directions

Beyond immediate research applications, the STOC 2026 experiment revealed significant potential for this tool in training the next generation of computer scientists. 75% of surveyed authors believed the tool has educational value for students by offering immediate feedback on mathematical rigor and presentation clarity.

"This pilot demonstrated the potential for specialized AI tools to serve as collaborative partners in fundamental areas," says Woodruff. "Our overall goal is not to replace the critical peer review process, but rather to augment and enhance it."

Reflecting this sentiment, 88% of participants expressed strong interest in having continuous access to such a tool throughout their entire research process, not just at the submission stage. This suggests a future where AI assistants become integral to the research workflow, helping to maintain quality standards from initial concept through final publication.

The Road Ahead: AI as Research Partner

The success of this experiment at STOC 2026 represents a significant step forward in the application of AI to academic research. By focusing on a specialized domain—theoretical computer science—and leveraging advanced reasoning capabilities, Google's research team has demonstrated that AI can provide meaningful assistance without sacrificing the rigor that defines the field.

As the technology continues to evolve, we can expect to see similar tools emerge across other disciplines, each tailored to the specific needs and standards of its field. The key to success, as demonstrated by this experiment, lies in designing systems that respect human expertise while providing valuable insights that might otherwise be overlooked.

In the world of theoretical computer science, where a single error can invalidate months of work, the introduction of an AI-powered reviewer isn't just a convenience—it's a potential game-changer for how research is conducted and validated. As one participant noted, the tool found errors that "evaded us for months," highlighting the potential for AI to catch mistakes that even human experts might miss.

The future of research may not be about humans versus AI, but about humans and AI working together—each bringing their unique strengths to the pursuit of knowledge. And in that future, tools like Google's Gemini-powered reviewer will play an increasingly important role.

Comments

Please log in or register to join the discussion