From seed-stage startups to publicly-listed companies, tech businesses reveal their strategies for selecting and rolling out next-generation IDEs, CLIs, and code review tools.

Deepdive: How 10 tech companies choose the next generation of dev tools

Tech businesses from seed-stage startups to publicly-listed companies reveal how they select and roll out next-generation IDEs, CLIs and code review tools. And how they learn which ones work… and which don't.

What's new: The developer tooling landscape has transformed dramatically in the past 18 months. Where once the answer to "what to use for AI-assisted coding?" was simple (GitHub Copilot + ChatGPT), today's companies face a bewildering array of options including Cursor, Claude Code, Codex, Gemini CLI, CodeRabbit, Graphite, and Greptile.

Why it matters: Every tech company we surveyed is changing its developer tooling stack, but the approaches vary wildly based on company size, existing vendor relationships, and organizational structure. Understanding these patterns can help teams make better decisions about their own tooling investments.

How to use it: This deepdive covers measurement frameworks, selection processes, and real-world case studies from companies ranging from 5-person startups to 2,000+ employee enterprises.

1. Speed, trust, & show-and-tell: how small teams select tools

At companies with fewer than ~60 engineers, tooling decisions happen fast and informally. The decisive factor is how developers feel about the tools after short trial periods.

Seed-stage logistics startup (20 people, 5 engineers)

The head of engineering describes their approach as high-trust and developer-led: "We agreed to try new tools for 2 weeks and see how everyone felt. We didn't use any hard-and-fast measurement. TLDR: I trust our devs and their opinion is a big part of this."

Developers suggest which tools to try and decide whether to keep using them. For AI code reviews, they first tried Korbit for around a week but the tool felt "off," so they roadtested CodeRabbit which "stuck" within a few days.

"Within a few days of using CodeRabbit I could tell the devs just liked it and were embracing the suggestions, unlike with Korbit which they ignored when they'd lost trust [in it]."

Their tooling stack has evolved quickly:

- Figma for designs, integrated with Linear

- Linear for ticketing and cross-functional collaboration

- Claude Code and Cursor for development, connected to Linear via MCP

- "Show and tells" during weekly meetings help identify what works

Series A startup (30 people, 15 engineers)

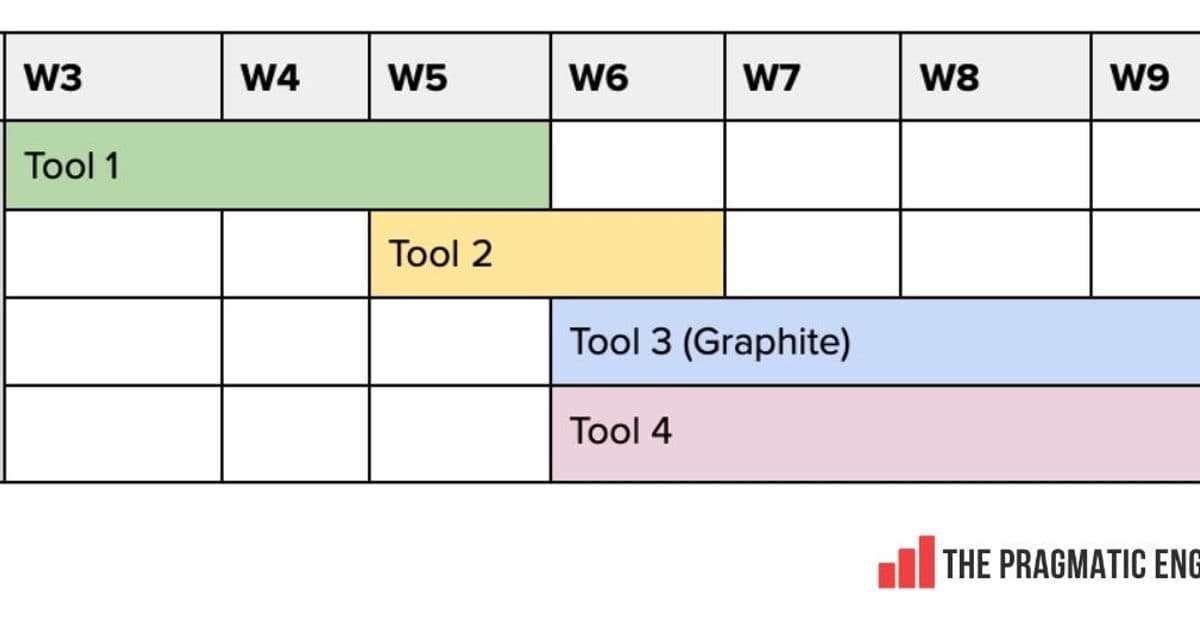

This team is split on Cursor versus Claude Code, with the latter gaining momentum. They evaluated three code review tools:

- Cursor's Bugbot (okay but not great)

- Graphite (not good)

- Greptile (good)

They're now trialing Greptile for PR approvals, taking advantage of its confidence-scoring feature.

Series D observability company (150 people, 60 engineers)

"We've tried a bunch of things (Graphite, et al.) but the one thing that's really stuck has been the company's Claude Code subscription — it's the most definite value-add. We pretty regularly bump people up a tier as their usage increases vs other tools that mostly sit idle."

Interestingly, non-engineers have jumped onto Claude Code. Product managers, solutions engineers, and technical account managers are using it more than the median engineer, handling customer bug reports by opening Claude Code PRs directly.

2. How mid-to-large companies choose

At companies with 150+ engineers, decisions become more complex. Existing vendor relationships, security reviews, compliance requirements, and executive-level budgetary considerations all come into play.

EU-based software company (500 people, 150 engineers)

This company's experience serves as a cautionary tale. Leadership declared they were "AI-first" after an offsite, rolling out Copilot Business subscriptions to anyone who asked.

"Our company's pre-existing relationship with Microsoft was probably key: we already had M365, and then they rolled out Copilot to all devs. People immediately had questions about other tools."

They got stuck for six months trying to create a formal approval process, gridlocked by legal and IT concerns about EU AI Act compliance.

Cloud infrastructure company (900 people, 300 engineers)

"We started with Copilot because it was easy to procure, since we were a Microsoft customer for M365. Then switching to Cursor took forever. Pricing keeps shifting. Meanwhile, execs read a doc and keep asking 'why aren't we on Claude Code?'"

Pricing remains a persistent headache. Claude's team plan is ~$150/month, Cursor's is ~$65, and the C-level wasn't comfortable with moving from Copilot's $40/month to Cursor's $65/month.

Public travel company (1,500 people, 800 engineers)

"Our main concern is avoiding vendor lock-in with a single solution. With this in mind, I expect to continue evaluating AI tooling this year as things keep evolving rapidly."

They rolled out GitHub Copilot last year and are now evaluating Claude Code as a replacement, remaining cautious about the steep per-engineer cost.

Public tech company (2,000 people, 700 engineers, productivity space)

The engineering leader in charge of dev productivity calls security the biggest challenge: "The biggest hurdle for us is security. We are looking for some amount of compliance, and I've found dev tools startups aren't prioritizing that until they are late Series A/Series B."

Their selection process involves:

- What they've heard from friends and colleagues at other places

- Chatter on Twitter/Reddit/Hacker News

- Knowing how to cut through hype

"Every tool has to move a metric. Those that directly impact a metric which we already care about get approved faster."

3. Measurement problem: metrics are needed but none work

If there's one theme that unites every company in this deepdive regardless of size, it's the struggle to measure whether AI tools actually work.

The lines-of-code trap

Every company debated metrics and found mostly bad options:

- Lines of code generated creates bad incentives and doesn't account for valuable uses like research, debugging, or idea generation

- Vibe-coded scripts that never hit production can feel like real productivity breakthroughs

- Copilot's telemetry only counts code written in specific IDEs, missing CLI usage entirely

"You can imagine how poorly this was received by devs! It doesn't even account for the fact that this metric from Copilot is purely based on telemetry from specific IDEs."

The productivity measurement void

"My engineering org is getting hooked on AI, but execs want metrics on value-add. I don't want to push vanity usage metrics just to justify spend, but outside of vanity metrics, I have nothing of value to show!"

Developer-productivity vendors' approaches are dismissed as "DORA+Velocity metrics combined with anything they can get from APIs of Cursor, Claude etc."

4. How Wealthsimple measured and decided

Wealthsimple, a Canadian fintech with ~1,500 employees (600 engineers), ran a thorough 2-month selection process to choose an AI code review tool.

Choosing an AI code review tool via a "shootout" process

They evaluated multiple tools using a structured scoring system across five dimensions:

- Accuracy - Does the tool catch real issues?

- Precision - Are suggestions relevant and actionable?

- Context awareness - Does it understand the codebase?

- Integration quality - How well does it work with existing workflows?

- Developer trust - Do engineers actually use and value the suggestions?

Five engineers evaluated ~100 comments across different tools, scoring each on a -3 to +3 scale.

The results

They found no AI code reviewer suitable for their codebase, highlighting that even well-resourced companies struggle to find tools that meet their needs.

For AI coding tools, the decision was more CTO-driven. Diederik van Liere pushed for Claude Code based on:

- Personal conviction about its capabilities

- Validated by usage data from Jellyfish

- Positive feedback from early adopters

5. Comparative measurements at a large fintech

One team ran Copilot, Claude, and Cursor simultaneously across ~50 PRs, scoring ~450 comments.

Their findings

- Cursor reviews were the most precise

- Claude reviews were the most balanced

- Copilot reviews were the most quality-focused

This kind of direct comparison is rare but incredibly valuable for making informed decisions.

6. Common patterns across all companies

What drives adoption

Developer trust drives adoption more than mandates. Even at large companies, tools that engineers genuinely like spread organically, while mandated tools often face resistance.

The migration path

The Copilot → Cursor → Claude Code migration path is well-trodden. Companies that started with Copilot are increasingly switching to Cursor, and many are now evaluating Claude Code despite the higher cost.

The measurement reality

Nobody has cracked productivity measurement yet. Every company struggles with this, from seed-stage startups to public enterprises. The consensus is that traditional metrics like lines of code are misleading, but better alternatives haven't emerged.

What actually works

Show-and-tell processes help small teams identify effective tools. When developers share their setups and workflows, good tools spread organically.

Extensive documentation like Agents.md and Claude.md files help maintain consistency across tools and use cases.

Trial periods of 2 weeks seem to be the sweet spot for small teams to evaluate tools effectively.

Key takeaways

Company size dramatically changes the selection process. Small teams can move fast and trust developer instincts; large companies need formal processes, security reviews, and budget approvals.

Existing vendor relationships matter. Companies with Microsoft contracts default to Copilot; those with AWS relationships consider CodeWhisperer.

Security and compliance are blockers. EU companies face particular challenges with AI Act compliance, slowing adoption.

Pricing pressure is real. The jump from Copilot's $40/month to Claude Code's $150/month is a hard sell to executives, even when engineers prefer the latter.

Measurement remains unsolved. Every company struggles to prove ROI, and traditional metrics like lines of code are widely distrusted.

Developer trust is the ultimate metric. Tools that engineers genuinely like and use consistently outperform mandated alternatives, regardless of what the metrics say.

No universal winners exist. Tools beloved by one company are loathed in others. What works depends entirely on your team, codebase, and workflow.

The goal isn't to find the "best" tool, but to find tools that work for your specific team. As one engineering leader put it: "What matters is to find tools that work for your team." And that's advice worth following, regardless of your company size or budget.

Comments

Please log in or register to join the discussion