AMD's core counts quadrupled in eight years while GPU tensor cores now handle 1024-bit operations, yet memory bandwidth struggles to keep pace. As AI workloads dominate silicon production, developers face unprecedented challenges in harnessing this parallel power while battling persistent latency constraints.

Main article image: Mossy rose gall, an analogy for hardware evolution exploiting system constraints (Credit: Justin Cormack)

The Unseen Architecture Shift

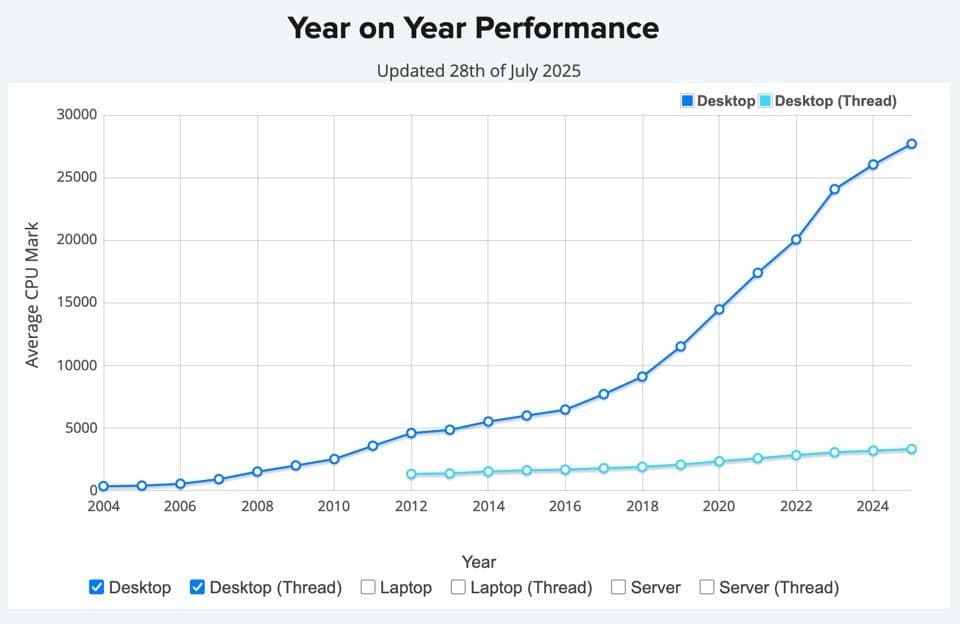

The relentless growth in computing power resembles nature's gall wasps—organisms that hijack plant growth patterns. Similarly, modern hardware exploits silicon in increasingly sophisticated ways, but with profound consequences for software. Since 2017, AMD EPYC processors have quadrupled core counts from 32 to 192, while vector processing units ballooned from 128-bit SSE to 512-bit AVX operations. Yet memory bandwidth per core has halved during this period, creating what engineers call "the memory wall."

Parallelism Versus the Physics Bottleneck

- CPU Evolution: Zen 5c now packs 192 cores, but DDR5 bandwidth can't scale proportionally. With PCIe 6.0 enabling 400Gb Ethernet and 14GB/s NVMe storage, I/O improvements mask but don't solve the core issue: latency remains stubbornly high.

- GPU Revolution: Nvidia Blackwell GPUs process four-bit floats 16x faster than traditional 64-bit operations. Their secret? Eight HBM3E memory stacks with 1024-bit wide tensor cores—architectural brute force that bypasses branch prediction by running 32-thread warps executing identical instructions.

"Writing code that runs efficiently on CPUs let alone GPUs is hard. We're executing both sides of conditionals and selecting results via boolean masks—that's how alien modern architectures have become," observes Justin Cormack.

The AI Hardware Dominance

TSMC's production tells the story: 50% of server spending now targets accelerated compute. AI clusters standardize around 2-CPU/8-GPU nodes with eight dedicated 800Gb network links. This isn't just about training—inference workloads require batched processing across machines to utilize available memory bandwidth.

# Traditional vs. AI-optimized conditional logic

# CPU approach

if condition:

result = compute_a()

else:

result = compute_b()

# GPU-optimized approach

result = condition * compute_a() + (1 - condition) * compute_b()

The Software Imperative

Three tectonic shifts demand rethinking development:

- Queue Depth Revolution: Networked systems require exponentially deeper queues to offset latency

- Precision Tradeoffs: Scientific computing's 64-bit floats give way to 4-bit quantized models

- Distributed-By-Design: Even inference scales horizontally now, necessitating fundamentally distributed architectures

As Cormack notes: "GenAI accelerated the hardware transformation. Now we need a software transformation to match." The mossy rose gall grows—not through raw speed, but by redefining growth patterns. Similarly, tomorrow's software must embrace parallelism, accept lower precision, and conquer latency through architectural innovation, not clock cycles.

Comments

Please log in or register to join the discussion