Analysis of how disinformation spread through digital platforms transformed online radicalization into real-world violence, with evidence from court documents and participant testimony.

The events of January 6th, 2021, represent a case study in how digital platforms can accelerate political extremism. At 1:42 AM on December 19, 2020, then-President Donald Trump's Twitter post declaring the election 'statistically impossible' to lose became the ignition point for what federal prosecutors would later describe as 'a violent insurrection.' This wasn't abstract political rhetoric—it manifested in physical violence through individuals like David Nicholas Dempsey, whose journey from online radicalization to armed insurrectionist illustrates the dangerous pipeline social media enables.

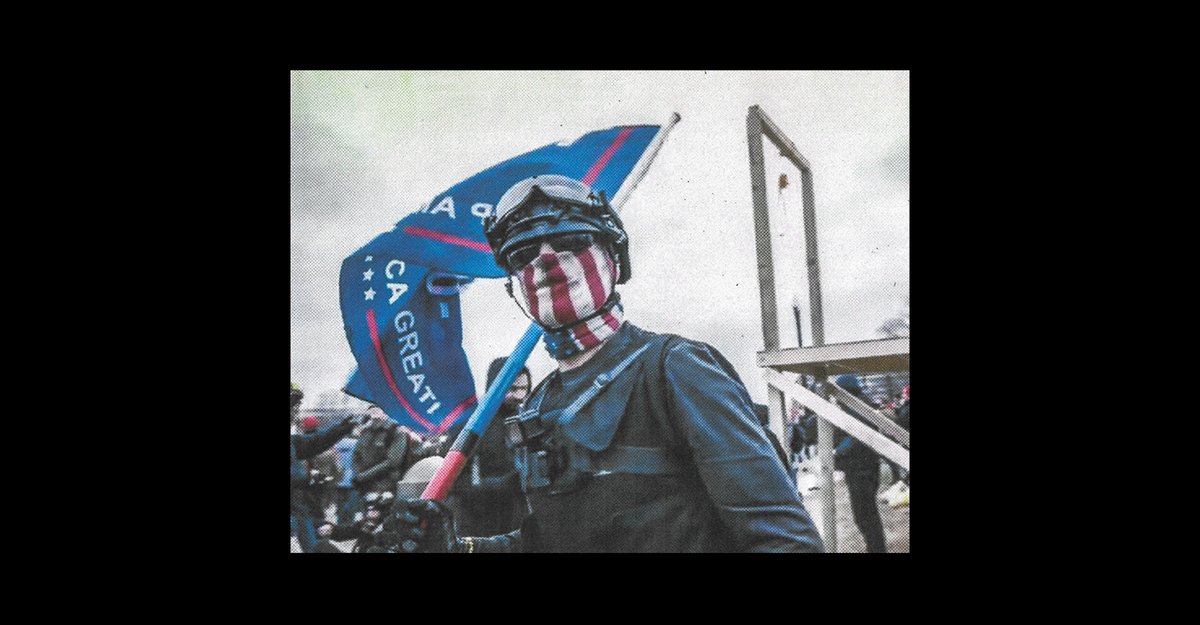

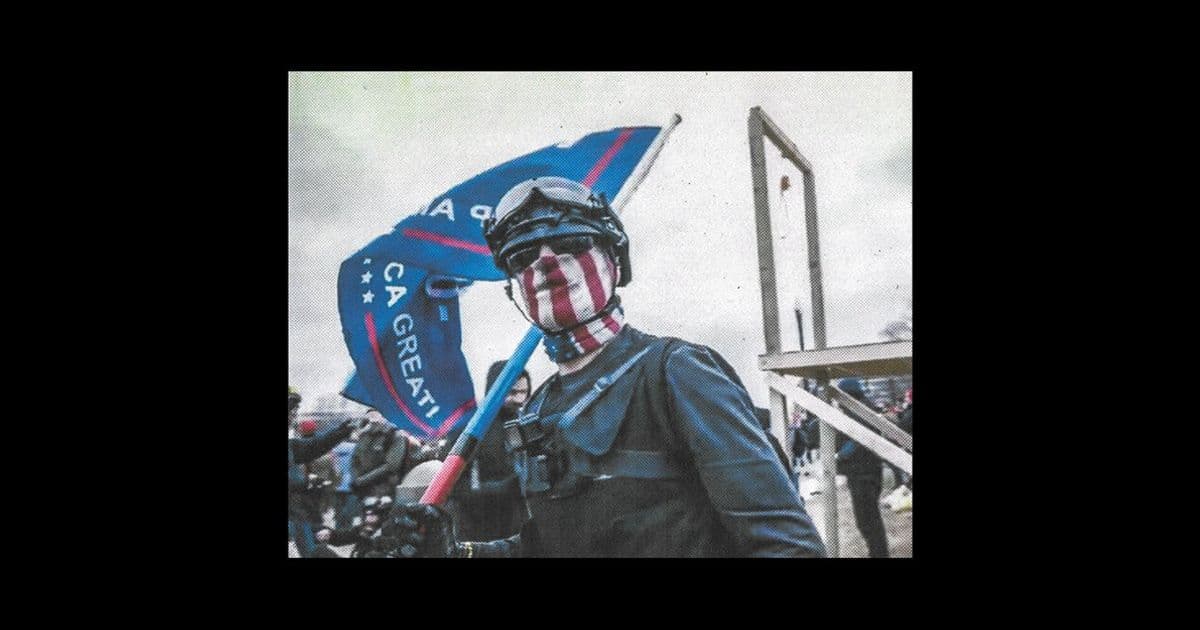

Court documents reveal Dempsey, a California resident with prior felony convictions, immediately responded to Trump's call. Wearing tactical gear and recorded near a gallows erected for Mike Pence, Dempsey echoed violent fantasies cultivated in online echo chambers: 'They need to hang from these motherfuckers while everybody videotapes it and spreads it on YouTube.' His subsequent attacks on police officers—using pepper spray, metal crutches, and broken furniture—resulted in over 140 officer injuries, with one detective testifying he believed he would die during Dempsey's assault.

This pattern extends beyond Dempsey. Research from the University of Chicago shows 85% of January 6th defendants engaged with extremist content on mainstream platforms before the attack. The transformation from online rage to physical violence occurred through predictable stages: algorithmic amplification of conspiracy theories, normalization of violent rhetoric in niche communities, and finally, real-world mobilization through viral calls to action. Facebook's internal studies, later revealed by whistleblower Frances Haugen, showed their algorithms actively pushed users toward extremist groups.

Counter-perspectives emphasize free speech concerns. Some legal scholars argue platforms shouldn't bear responsibility for user-generated content, citing Section 230 protections. Others contend that removing extremist content constitutes censorship that could push radicalization further underground. However, this view conflicts with findings from the National Consortium for the Study of Terrorism, which documents how deplatforming reduces the reach of violent movements.

The aftermath reveals complex tensions. While platforms like Twitter implemented stricter moderation policies post-January 6th, alternative platforms like Truth Social emerged as havens for election disinformation. Meanwhile, Dempsey's 63-month prison sentence underscores the legal system's recognition of digital incitement's consequences. As federal prosecutors noted in his case, the transition from online threats to physical violence represents 'a direct line from the digital sphere to bodily harm'—a phenomenon requiring ongoing scrutiny of how technology companies manage inflammatory content during volatile political periods.

Comments

Please log in or register to join the discussion