Search Results: "MI300X"

Found 3 articles

Chips

ISSCC 2026: AMD's MI355X doubles throughput with fewer compute units - and challenges Nvidia's GB200

2/27/2026

AI

AMD Achieves Milestone in Large-Scale MoE Pretraining with ZAYA1 on MI300X and Pollara

AMD Achieves Milestone in Large-Scale MoE Pretraining with ZAYA1 on MI300X and Pollara The quest for hardware diversit...

11/27/2025

Hardware

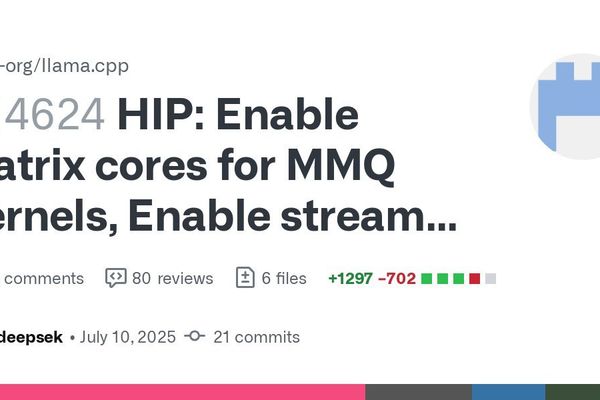

AMD Matrix Cores Supercharge Llama.cpp: MFMA and Stream-K Unlock 9.5K Tokens/sec on MI300X

The CDNA 3 Breakthrough A landmark pull request in llama.cpp has fundamentally transformed AMD GPU performance for l...

7/28/2025