Cursor has published Agent Trace, an open specification for tracking AI-generated code contributions in version control systems, addressing the growing need for attribution as AI coding assistants become mainstream.

Cursor has published Agent Trace, a draft open specification aimed at standardizing how AI-generated code is attributed in software projects. Released as a Request for Comments (RFC), the proposal defines a vendor-neutral format for recording AI contributions alongside human authorship in version-controlled codebases.

The Attribution Challenge in AI-Assisted Development

As AI coding assistants like Cursor, GitHub Copilot, and others become ubiquitous in development workflows, a critical gap has emerged in how we track code provenance. Traditional version control systems like Git provide detailed histories of who changed what and when, but they cannot distinguish between human-written code and AI-generated contributions.

This limitation becomes particularly problematic as AI agents take on larger roles in software development. When reviewing code changes, developers currently have no way to know whether a particular function was written by a colleague, an AI assistant, or a combination of both. This lack of context can complicate code reviews, debugging sessions, and long-term maintenance.

How Agent Trace Works

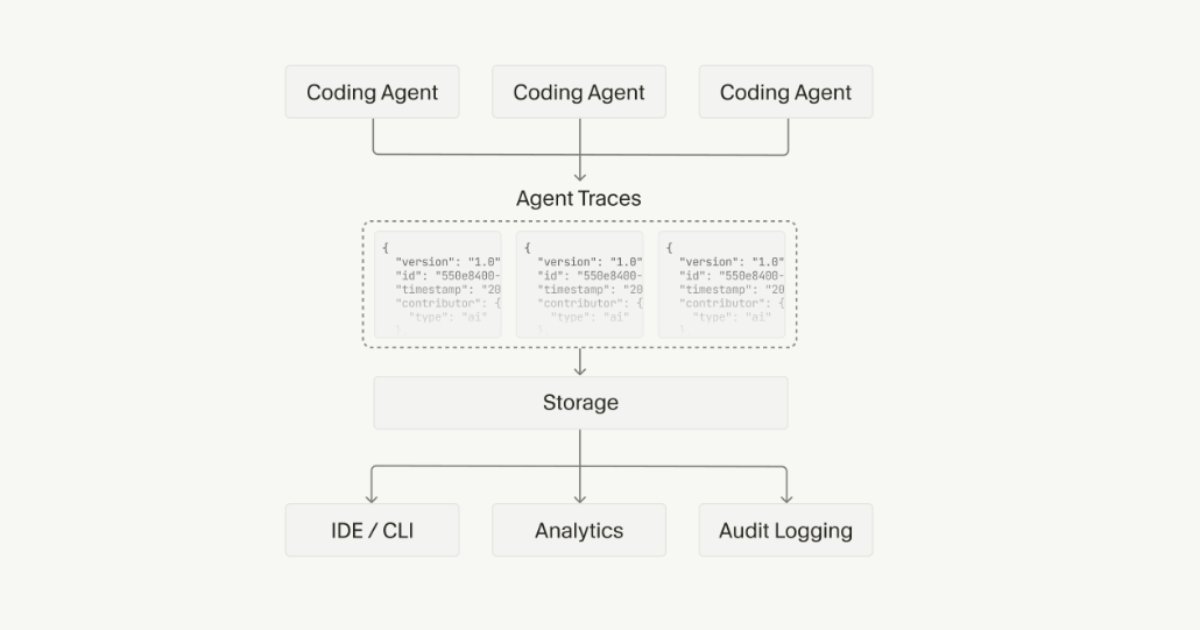

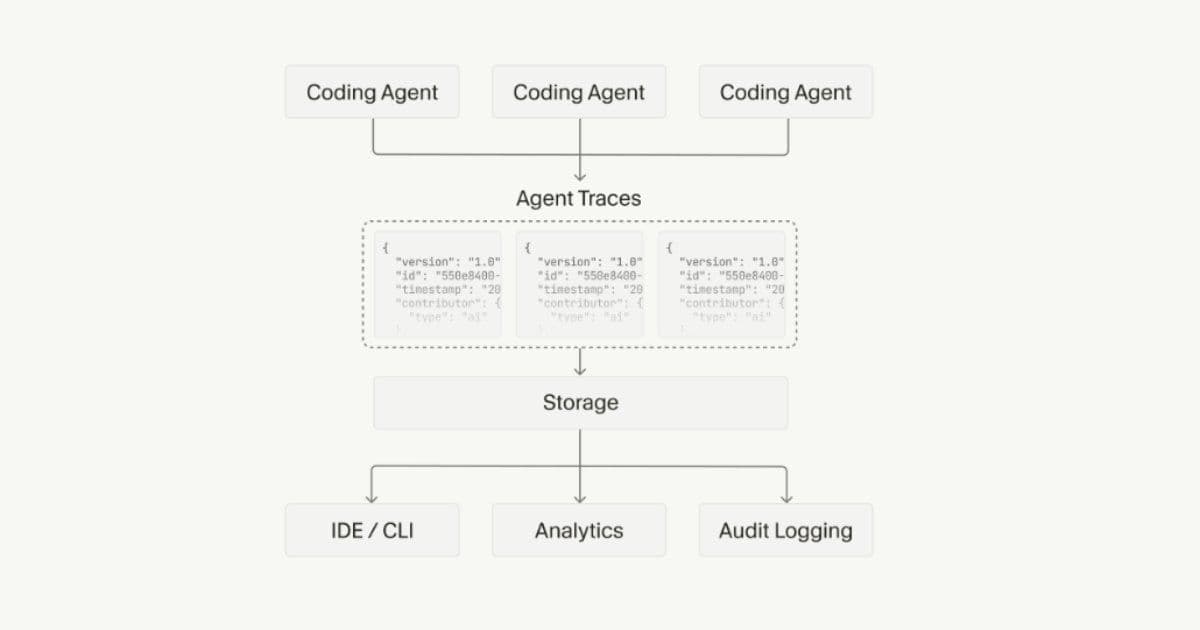

Agent Trace introduces a JSON-based "trace record" format that connects code ranges to the conversations and contributors behind them. The specification allows for granular attribution at both file and line levels, categorizing contributions as human, AI, mixed, or unknown.

Key features of the specification include:

- Structured attribution: Each code change can be linked to specific conversations and contributors

- Model identification: Optional model identifiers for AI-generated code, enabling precise attribution without vendor lock-in

- Storage flexibility: The spec is intentionally storage-agnostic, allowing implementations to store trace records as files, git notes, database entries, or other mechanisms

- Version control compatibility: Supports multiple systems including Git, Jujutsu, and Mercurial

- Content hashing: Optional hashes help track attributed code even when moved or refactored

- Extensibility: Namespaced keys allow vendors to add metadata without breaking compatibility

Design Philosophy and Scope

The specification deliberately avoids defining UI requirements or ownership semantics, focusing narrowly on attribution and traceability. It also does not attempt to assess code quality or track training data provenance, leaving these concerns to other tools and standards.

This focused approach reflects Cursor's intent to create a foundational layer that can be built upon by the broader development community. By not prescribing specific storage mechanisms or user interfaces, Agent Trace can be adopted across different development environments and workflows.

Early Developer Reactions

Early feedback from developers has been notably positive, with particular emphasis on practical benefits for common development challenges.

One developer on X wrote: "This is what you do when you're serious about improving the state of agent-generated mess. Can't wait to try this out in reviews."

Another highlighted reproducibility as a key benefit: "Teams stop shipping when they can't debug why an agent veered off path. Trace solves that. Good move opening it up."

These reactions suggest that developers see immediate value in having better context for AI-generated code, particularly for debugging and review workflows where understanding the origin of code changes is crucial.

Implementation and Adoption

Cursor has included a reference implementation demonstrating how AI coding agents can automatically capture trace records as files change. While the example is built around Cursor's own tooling, the patterns are intended to be reusable across other editors and agents.

The open nature of the specification, combined with its practical focus, positions it well for adoption across the development tool ecosystem. As more teams adopt AI-assisted development practices, having standardized attribution becomes increasingly important for collaboration, code review, and long-term project maintenance.

Open Questions and Future Development

As an RFC, Agent Trace intentionally leaves several questions unresolved, including how to handle merges, rebases, and large-scale agent-driven changes. These are complex challenges that will require community input and real-world testing to address effectively.

Cursor presents this proposal as a starting point for a shared standard rather than a complete solution. The company is actively seeking feedback from the development community to refine the specification and address edge cases that may arise in production environments.

The Broader Context

The introduction of Agent Trace comes amid rapid evolution in AI-assisted development tools. Recent announcements from major players in the space include:

- Vercel's Skills.sh, an open ecosystem for agent commands

- OpenAI's Prism, a free LaTeX-native workspace with integrated AI

- Anthropic's updated Constitution for Claude

- Google's TranslateGemma open models

These developments reflect a broader industry trend toward more sophisticated AI integration in development workflows, making standards like Agent Trace increasingly relevant.

Implications for Development Teams

For development teams adopting AI coding assistants, Agent Trace offers several practical benefits:

- Improved code reviews: Reviewers can understand which parts of a change came from AI assistance

- Better debugging: When issues arise, teams can trace whether problems originated from AI-generated code

- Knowledge transfer: Understanding which team members (human or AI) contributed to different parts of the codebase

- Compliance and auditing: Clear attribution records for regulatory or organizational requirements

As AI agents become more common in software development workflows, having standardized attribution mechanisms will be essential for maintaining code quality, enabling effective collaboration, and ensuring long-term project sustainability.

The success of Agent Trace will depend on adoption by major development tool providers and the community's willingness to implement and extend the standard. Given the growing importance of AI in development workflows, the need for such attribution standards is likely to become increasingly urgent in the coming years.

Comments

Please log in or register to join the discussion