A developer's experiment demonstrates how AI agentic loops—where models iteratively debug and refine their own code—enabled building a complete flexbox layout algorithm in record time. By designing precise feedback mechanisms and harnessing autonomous iteration, this approach achieved what traditionally took weeks, signaling a paradigm shift in AI-assisted development.

For decades, software engineering demanded meticulous human debugging and testing. Now, AI agentic loops are rewriting the rules—enabling models to autonomously iterate toward solutions with minimal intervention. A recent experiment by a developer at Scott Logic showcases this transformative potential: implementing CSS flexbox layout algorithms in just three hours using GitHub Copilot in agent mode.

What Are Agentic Loops?

Traditional AI-assisted coding relies on "one-shot" generation—where developers hope an LLM emits correct code from a single prompt. While effective for small snippets, this approach falters with complexity. Agentic loops mimic human development workflows:

Think → Write → Execute → Verify → Repeat

By granting AI agents tools to evaluate progress (e.g., test runners, reference implementations) and permission to iterate, they autonomously debug and refine code until objectives are met. Simon Willison has called designing such loops "a critical skill"—a sentiment echoed in this experiment.

The Flexbox Challenge

The project took inspiration from Christopher Chedeau's 2015 feat: building React Native's JavaScript flexbox implementation in two weeks via "extreme TDD." Recreating this with AI required:

- Seed Code: A trivial initial implementation (~10 lines) for "single-line, fixed-size items."

- Reference Validation: A Node.js harness to compare outputs against browser-rendered layouts (ground truth).

- Autonomous Workflow: Copilot instructions directing the agent to:

- Generate test cases

- Validate against browser reference

- Implement incremental features

- Fix failures until tests pass

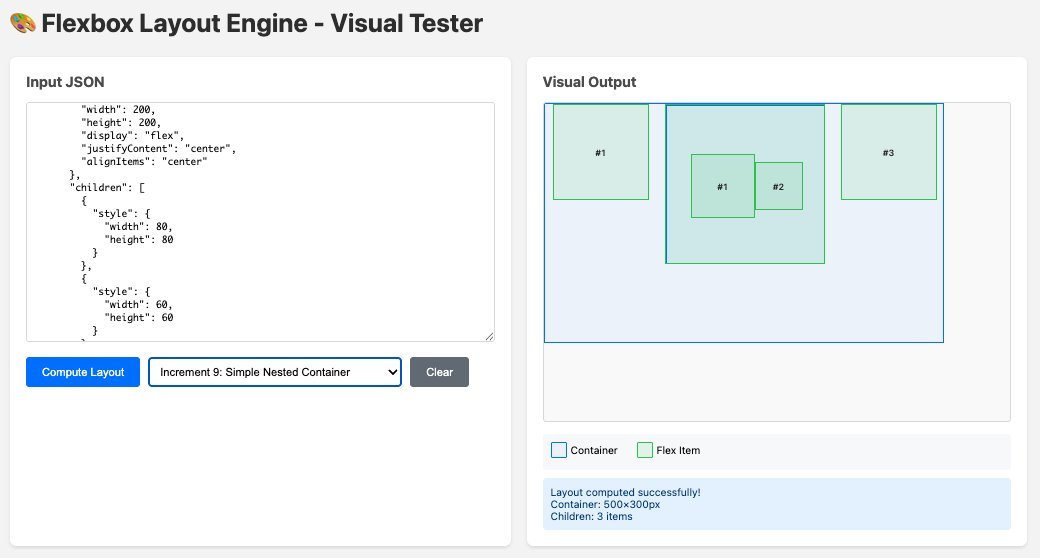

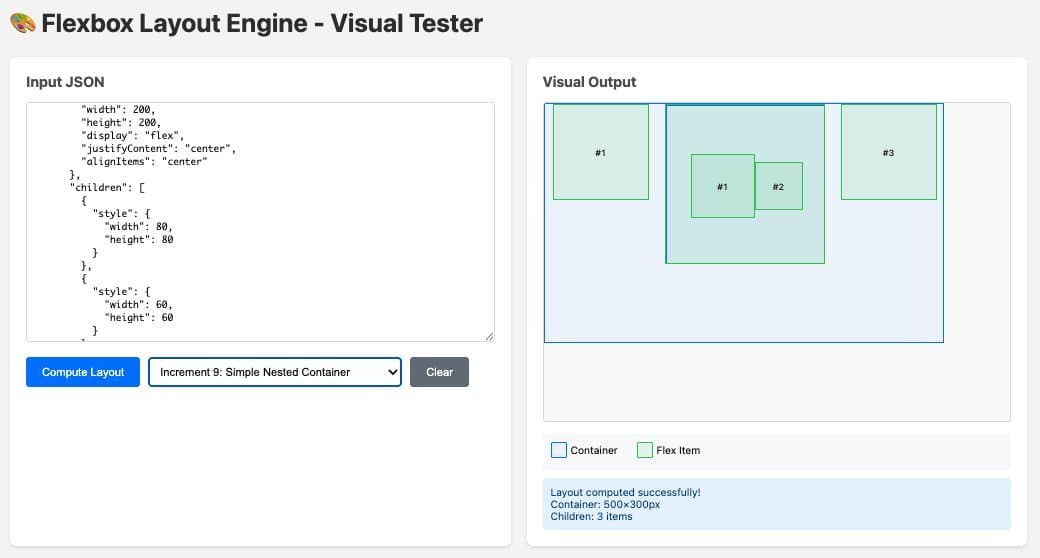

The visual test suite confirmed the AI-generated flexbox implementation matched browser behavior.

The visual test suite confirmed the AI-generated flexbox implementation matched browser behavior.

The Autonomous Workflow in Action

The agent progressed through nine increments—adding features like justify-content, cross-axis alignment, and flex growth. Key observations:

- Precision Issues: Early rounding errors prompted fuzzy equality checks (±0.02px).

- Creative Debugging: When tests failed, the agent logged intermediate steps, explored dead ends, and backtracked—mirroring human problem-solving. Its chain-of-thought read like a developer's internal monologue:

"Actually wait... this is strange... my understanding is wrong."

- Self-Improvement: After each increment, the agent updated its own instructions with learned optimizations (e.g., handling margins in cross-axis calculations).

- Resilience: It recovered from a file corruption by restoring from Git and proceeding cautiously.

After three hours, the agent produced an 800-line algorithm with 350 tests—surpassing Chedeau's two-week timeline.

Lessons and Implications

- Feedback Trumps Prompt Engineering: A robust validation mechanism proved more valuable than perfect prompts. The browser reference implementation enabled rapid, accurate iteration.

- Loop Design is Key: Developers must architect observable, tunable workflows. Tweaks mid-process—like adding CSS property validation—prevented recurring failures.

- Not All Problems Fit: Flexbox was ideal—well-documented, with clear validation criteria. Legacy migrations or ambiguous tasks may need different approaches.

As AI agents evolve, their ability to "learn" within loops—via reflection and instruction updates—could democratize complex domains. One participant noted: "Agents can clearly think."

The experiment underscores a seismic shift: Developers who master agentic loop design will unlock unprecedented productivity. Potential applications span UI automation, test generation, and legacy modernization. As AI begins to truly collaborate—not just assist—the future of coding is iterative, autonomous, and relentlessly self-correcting.

Source: The Power of Agentic Loops by Colin Eberhardt, Scott Logic

Comments

Please log in or register to join the discussion