NewStore is transforming its QA process by deploying AI agents powered by Gemini 2.5 Flash to test shopping apps across 40+ brands, slashing manual effort by 20-45%. This deep dive explores their custom Python framework, which uses accessibility APIs and dynamic tooling to adapt to UI changes, offering a scalable alternative to flaky traditional automation. The move signals a broader shift where AI isn't just a tool but a collaborative tester in CI/CD pipelines.

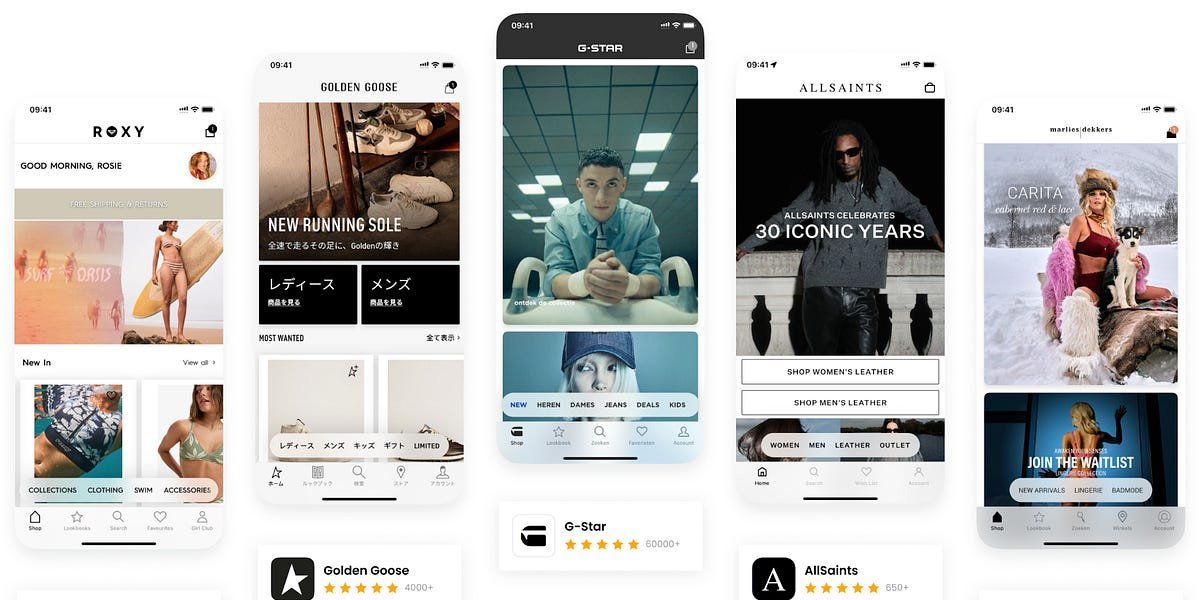

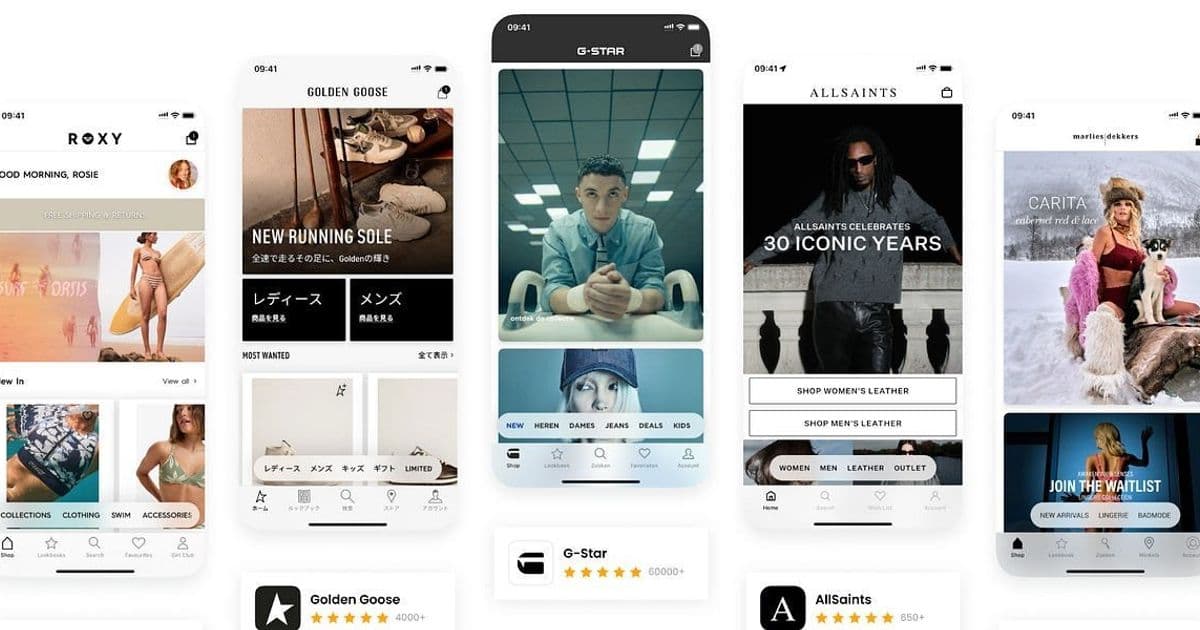

AI's infiltration into software engineering is no longer theoretical—it's reshaping workflows in real time. At NewStore, a platform powering shopping apps for over 20 brands like Nike and Ganni across 40 countries, engineers are confronting the inefficiencies of manual testing head-on. As Thomas Visser details in the NewStore Tech Blog, their solution? An AI agent that simulates user behavior to validate apps, turning weeks of repetitive checks into automated, adaptive workflows. This isn't just about speed; it's a fundamental rethinking of quality assurance in a multi-app ecosystem.

The Testing Bottleneck: Scale Meets Human Limits

NewStore's architecture hinges on shared native frameworks (iOS/Android) handling 99% of app logic, with brand-specific tweaks making up the rest. While frameworks undergo rigorous automated testing via Maestro and snapshots, the apps themselves—each a unique combination of framework and branding—require manual validation. With 40+ apps updated bi-weekly, the math is brutal: every test case multiplied by 40 platforms. "Anything times 40 quickly adds up," notes Visser. Previous attempts at traditional UI automation failed due to environmental volatility—sales, stock changes, or UI tweaks broke static scripts. The team needed a tester that could think, not just follow a map.

Enter the AI Agent: Gemini-Powered and Tool-Driven

The breakthrough came from treating large language models (LLMs) as "users" who intuitively navigate apps. NewStore's Python-based agent, built on Google's Gemini 2.5 Flash API, interacts with devices through a suite of tools that mirror real-world actions. Here’s how it works:

- Test Cases as English Scripts: The agent starts with human-readable instructions, like validating search functionality: "Launch the app, navigate to a category, remember a product, search for it, and verify results."

- Observations via Accessibility APIs: Using

ui_describe, the agent pulls a JSON hierarchy of on-screen elements from iOS/UIAutomation or Android/Maestro, assigning IDs for reference. This leverages the same data screen readers use, ensuring robustness across brands. - Interaction Tools: The LLM calls actions like

tap,type, orscrollbased on the UI description. Crucially, it provides rationales for each step, guided by a system prompt enforcing expert behavior:

"You are a helpful expert UI tester agent... Use ONLY the provided tools. When you call a tool, you MUST also respond with a rationale for the action."

- Dynamic Knowledge Integration: Brand-specific context comes from

agent.mdfiles loaded at runtime, allowing the agent to adapt without hardcoded selectors.

Integration and Impact: From CI Pipelines to Slack Alerts

The agent runs on an M4 Mac Mini in GitLab CI, launching simulated devices, proxying network traffic, and capturing artifacts like HAR files and screen recordings. Results output as JUnit XML for GitLab dashboards, with failures flagged in Slack. Already, it handles core flows like checkout validation—"if the agent completes checkout, so can a user"—freeing engineers from 20% of manual work, targeting 45%. But it's not flawless. LLM unpredictability sometimes causes "left turns," requiring artifact reviews to tweak prompts or knowledge files. Yet, compared to traditional UI tests, this approach thrives on change: tests written in English require minimal maintenance and run against production environments.

Why This Matters Beyond NewStore

This experiment underscores AI's role in solving procedural problems. By treating testing as a language-guided exploration, NewStore sidesteps the brittleness of element-specific scripts. The cost-effectiveness of Gemini 2.5 Flash ($0.50 per million tokens) makes it feasible at scale, though future shifts to more capable models could reduce quirks. As Visser hints, the next phase involves multi-agent collaboration for bug reproduction—turning the AI into a "testing buddy." For developers drowning in cross-platform validation, this isn't just efficiency; it's a blueprint for human-AI symbiosis in quality engineering. The days of all-manual app testing are numbered, and the replacement is learning on the job.

Comments

Please log in or register to join the discussion