Apple researchers have developed VSSFlow, a unified AI model that can generate both sound effects and speech from silent videos using a single system, achieving state-of-the-art results through innovative joint training.

Apple researchers, in collaboration with academics from Renmin University of China, have unveiled VSSFlow, an AI model that can generate both sound effects and speech from silent videos using a single unified system. The breakthrough addresses a long-standing challenge in AI where models typically excel at either sound effects or speech generation, but rarely both.

The challenge of unified audio generation

Traditional video-to-sound models have historically struggled with a fundamental limitation: they're either good at generating environmental sounds or speech, but not both. Text-to-speech models, while proficient at generating spoken words, fail to produce non-speech sounds because they're designed for a different purpose entirely. Previous attempts to unify these capabilities often assumed that joint training would degrade performance, leading to complex multi-stage pipelines that separated speech and sound training.

How VSSFlow works

The architecture behind VSSFlow is particularly innovative. The model leverages multiple concepts from generative AI, including converting transcripts into phoneme sequences of tokens and using flow-matching to reconstruct sound from noise. This approach essentially trains the model to efficiently start from random noise and end up with the desired audio signal.

At its core, VSSFlow employs a 10-layer architecture that blends video and transcript signals directly into the audio generation process. This allows the model to handle both sound effects and speech within a single system, rather than requiring separate models or complex pipelines.

The surprising benefit of joint training

Perhaps most interestingly, the researchers discovered that jointly training on speech and sound actually improved performance on both tasks, rather than causing the two to compete or degrade overall performance. This mutual boosting effect challenges the conventional wisdom that specialized models always outperform unified approaches.

To train VSSFlow, the researchers fed the model a mix of three types of data: silent videos paired with environmental sounds (V2S), silent talking videos paired with transcripts (VisualTTS), and text-to-speech data (TTS). This allowed the model to learn both sound effects and spoken dialogue together in a single end-to-end training process.

Fine-tuning for simultaneous generation

An important technical detail is that out of the box, VSSFlow couldn't automatically generate background sound and spoken dialogue at the same time in a single output. To achieve this capability, the researchers fine-tuned their already-trained model on a large set of synthetic examples where speech and environmental sounds were mixed together. This additional training step taught the model what both should sound like simultaneously.

Performance and results

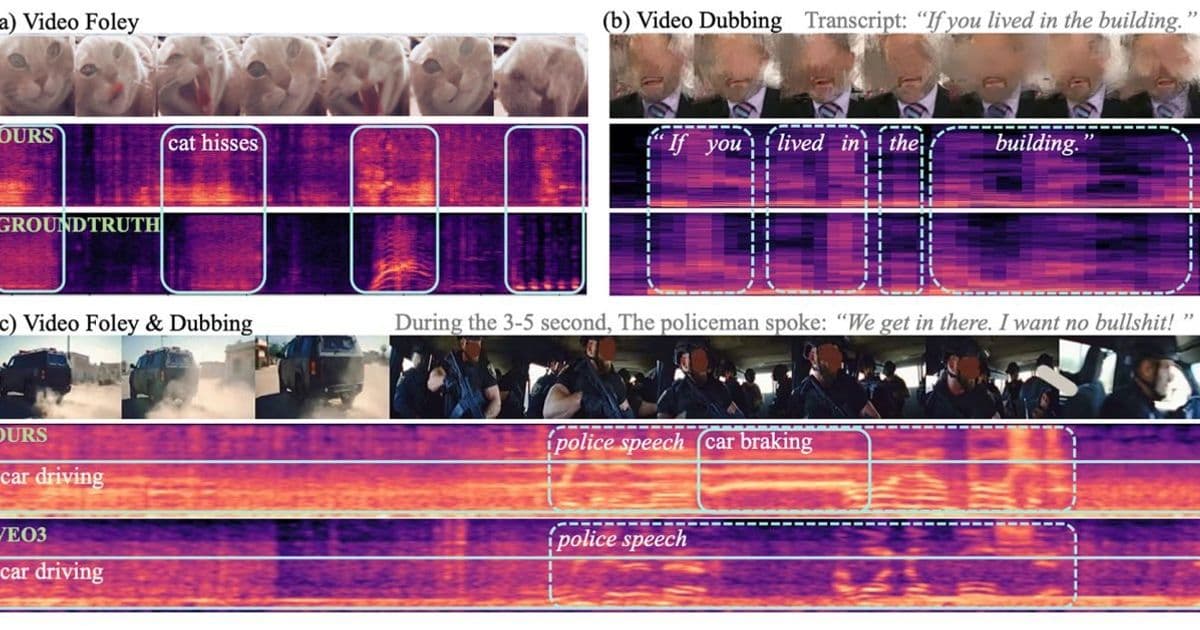

When tested against task-specific models built only for sound effects or only for speech, VSSFlow delivered competitive results across both tasks. The unified system led on several key metrics despite its single-system approach, demonstrating that the joint training methodology was effective.

The researchers have published multiple demos showcasing sound, speech, and joint-generation results, including comparisons with alternative models. These demonstrations highlight the practical capabilities of the system and provide concrete examples of its performance.

Open source and future directions

In a move that will likely accelerate adoption and further research, the team has open-sourced VSSFlow's code on GitHub. They're also working to open the model's weights and provide an inference demo, making the technology accessible to the broader research community.

Looking ahead, the researchers identified several promising directions for future work. The scarcity of high-quality video-speech-sound data remains a limiting factor for unified generative models. Additionally, developing better representation methods for sound and speech that can preserve speech details while maintaining compact dimensions represents a critical challenge.

The work establishes a new paradigm for video-conditioned sound and speech generation, demonstrating an effective condition aggregation mechanism for incorporating speech and video conditions into the DiT architecture. By revealing the mutual boosting effect of sound-speech joint learning, VSSFlow highlights the value of unified generation models in AI audio synthesis.

For those interested in exploring the technical details, the full research paper titled "VSSFlow: Unifying Video-conditioned Sound and Speech Generation via Joint Learning" is available, along with the open-source implementation on GitHub.

Comments

Please log in or register to join the discussion