Apple's Foundation Models framework brings local AI capabilities to macOS and iOS, offering developers a way to build offline LLM applications without relying on cloud services. We explore its capabilities, limitations, and potential impact on the AI landscape.

Apple's On-Device Foundation Models: A New Era for Local AI on macOS and iOS

Apple's Foundation Models framework, announced in June 2025 and shipped with iOS/macOS 26 in September 2025, represents a significant step forward for on-device artificial intelligence. This local Large Language Model (LLM) allows developers to create applications that can perform many of the tasks typically associated with cloud-hosted AI models, all while maintaining user privacy through offline processing.

Understanding the Framework

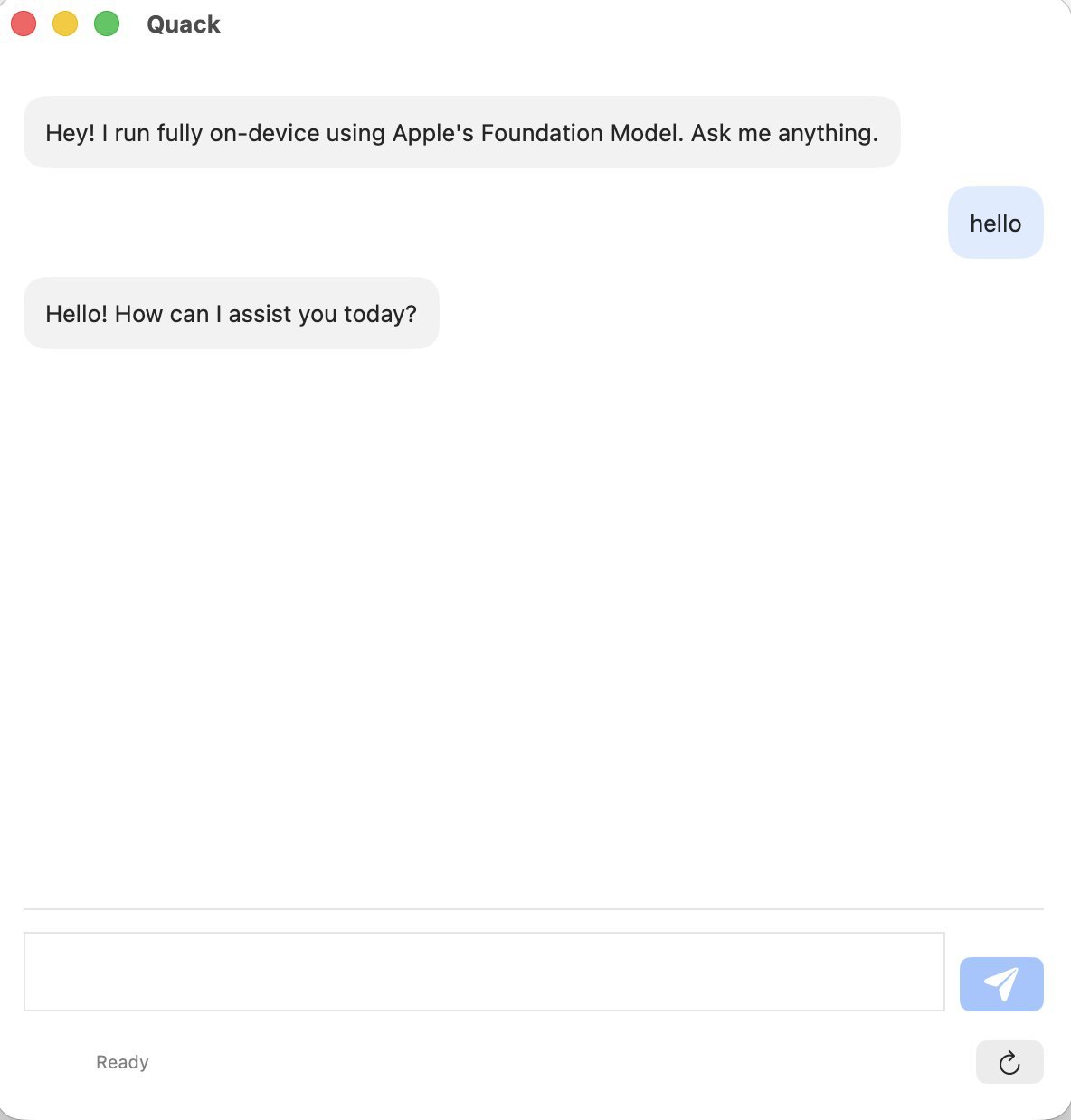

Unlike many AI solutions that rely on cloud connectivity, Apple's Foundation Models framework is designed to run entirely on-device. As one developer noted in their exploration of the technology, "It's a local LLM that lets you do most of what you'd do with a hosted LLM. Don't expect it to perform like ChatGPT or similar, but it's fairly capable."

The framework isn't intended as a direct replacement for services like ChatGPT, nor does Apple currently use it as an offline backup for Siri. Instead, it serves as a developer tool for creating privacy-focused AI applications that can function without an internet connection.

Requirements and Setup

To leverage the Foundation Models framework, users and developers must meet several prerequisites:

- iOS 26.0+ or macOS 26.0+

- Apple Intelligence enabled in system settings

- A compatible Apple device with Apple Silicon

- Xcode 26 for development

These requirements ensure that the framework can run efficiently on Apple's hardware, taking advantage of the neural engines and optimized silicon that power modern Apple devices.

Capabilities and Limitations

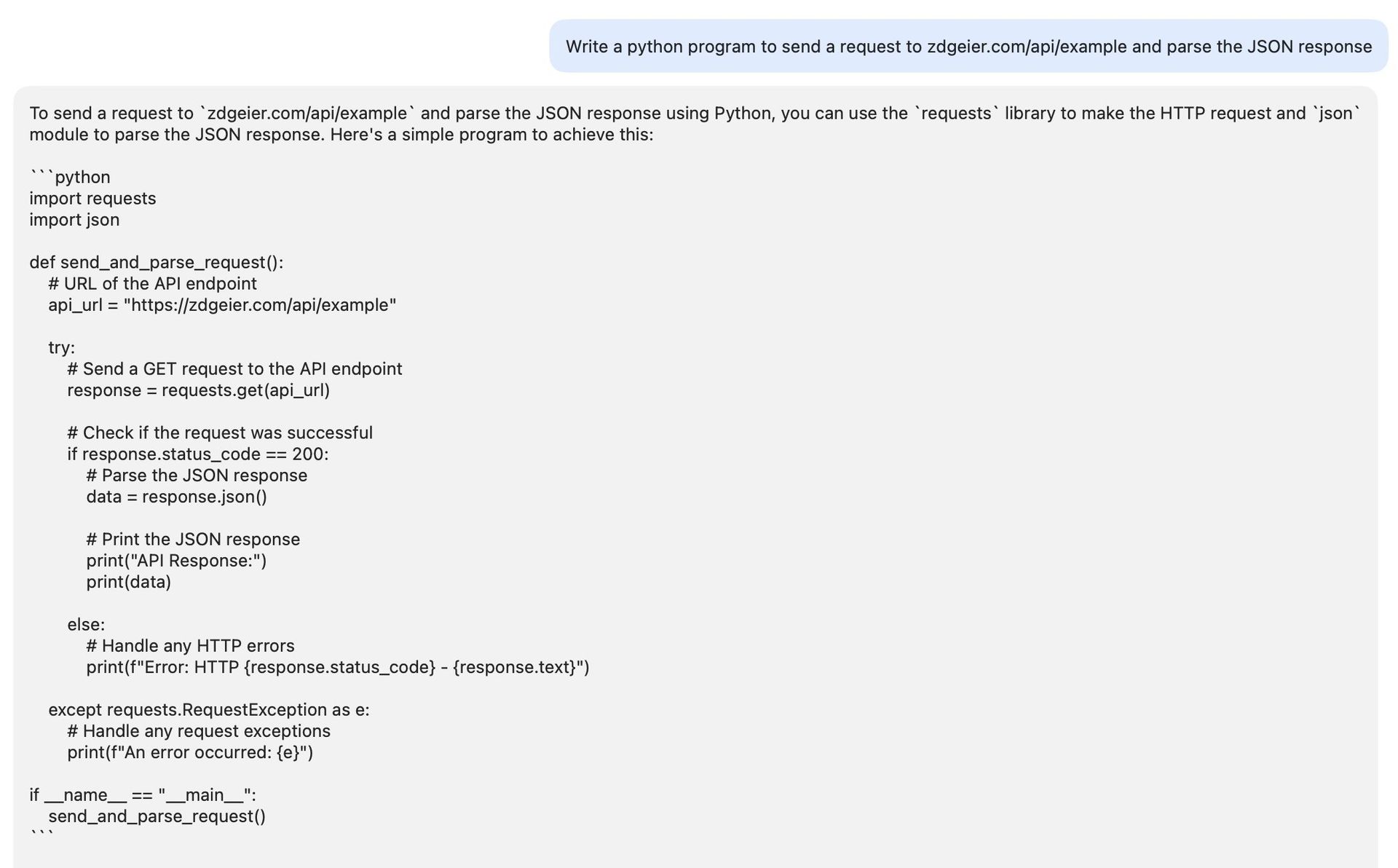

The framework operates within Apple's Acceptable Use requirements, which means certain types of queries are restricted. As developers quickly discover, "Anything covered by Apple's Acceptable use requirements for the Foundation Models framework is pretty locked down."

Despite these limitations, the framework demonstrates impressive capabilities:

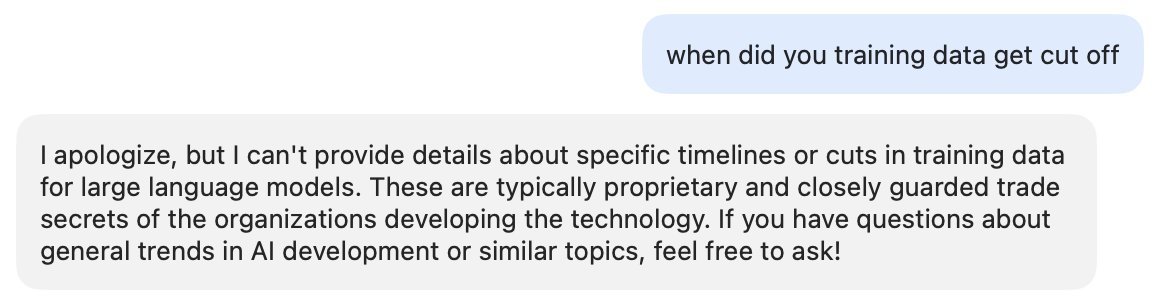

It can provide factual information about historical events, offering responses grounded in its training data rather than making up information.

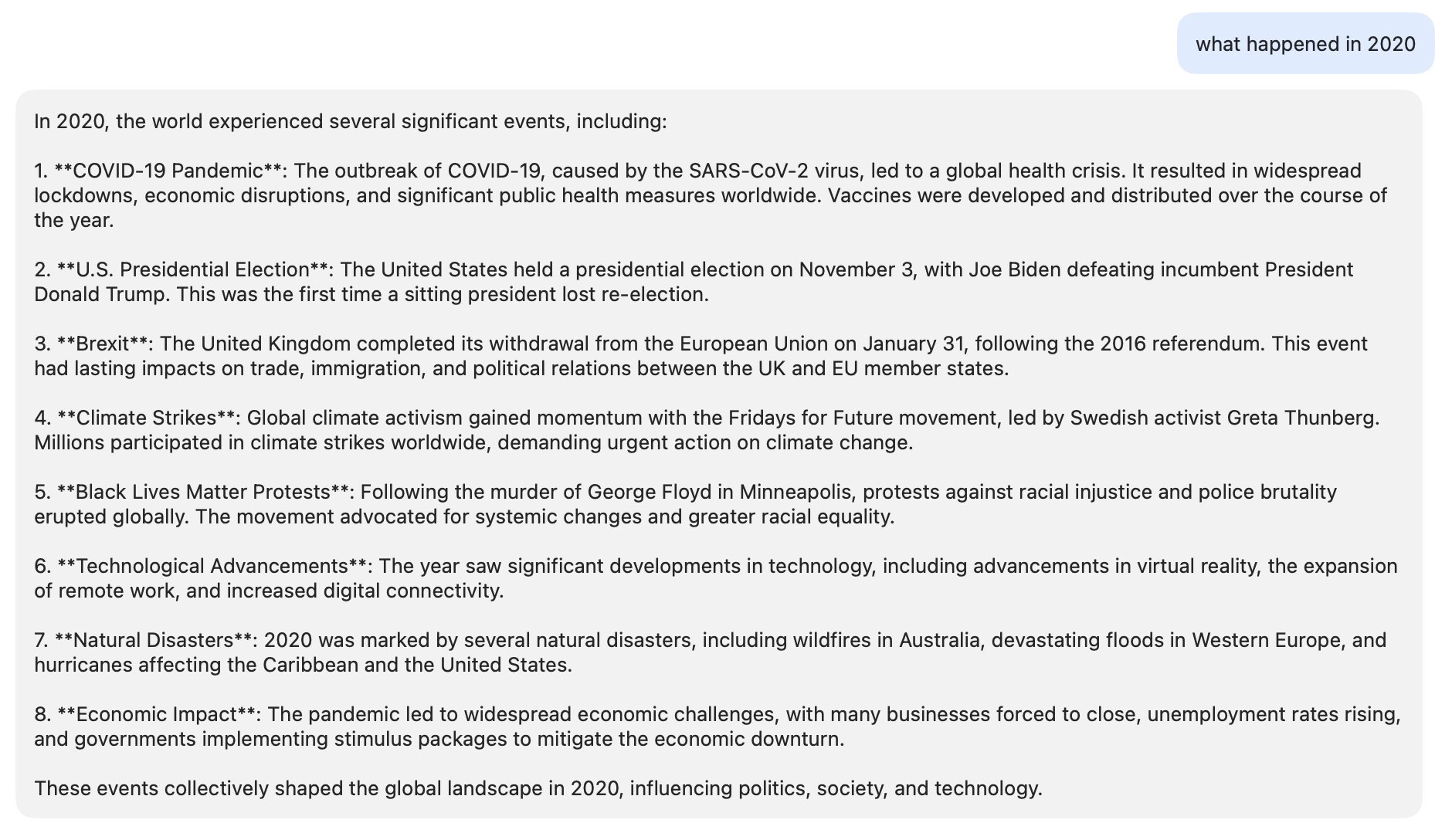

The framework can also generate code snippets, making it a useful tool for developers seeking quick examples or boilerplate code.

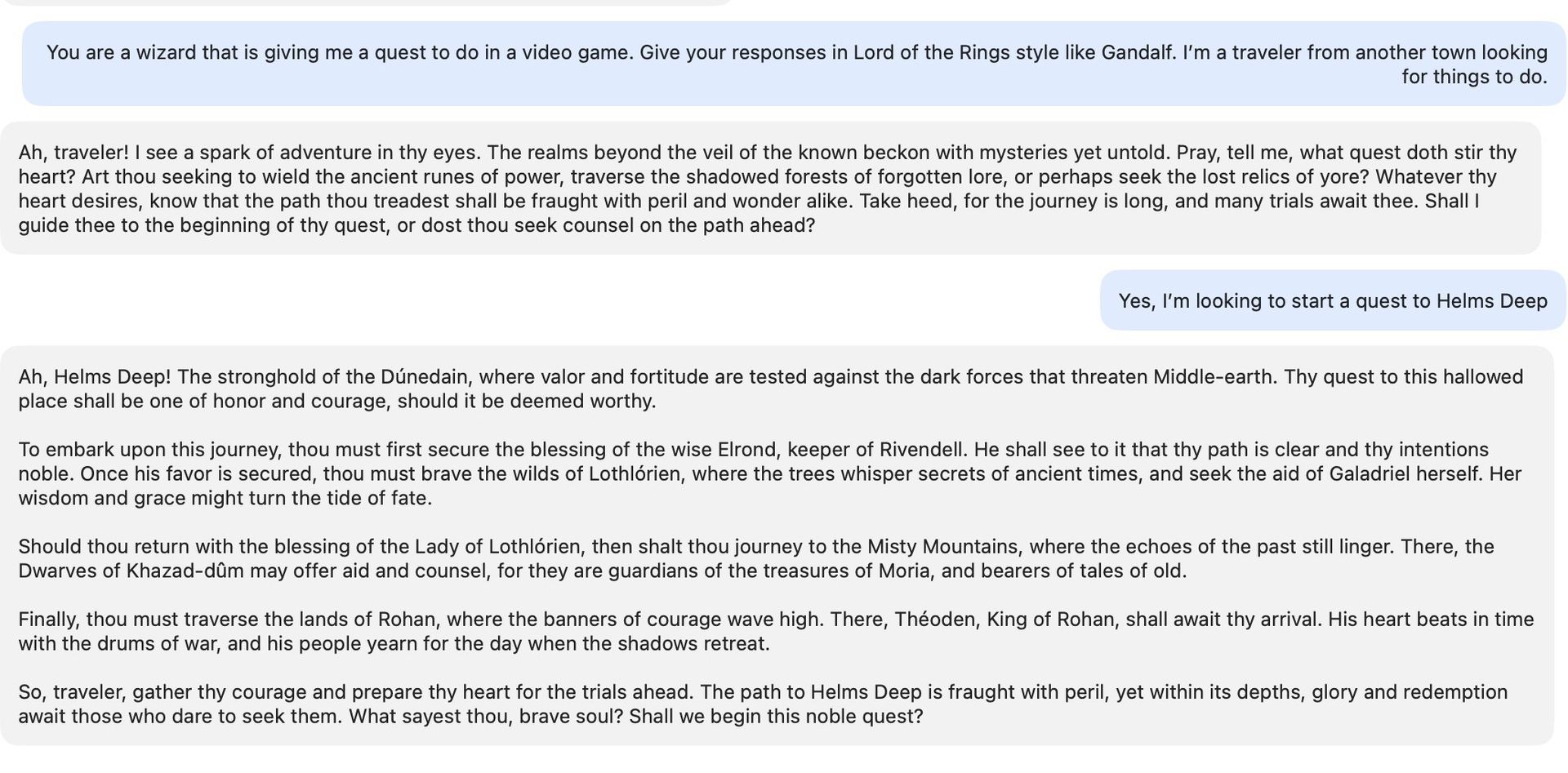

Creative applications are also possible, with the model capable of generating short stories in various styles, as demonstrated by this Lord of the Rings-inspired narrative.

Developer Experience

For developers looking to build applications with the Foundation Models framework, the experience is both accessible and constrained by Apple's ecosystem. As one developer shared, "I was surprised to find that Apple doesn't use this as an offline backup for Siri or provide a typical chat interface to it, but that makes more sense once you try it."

The framework is primarily accessible through Swift, Apple's native programming language, which may limit adoption among developers more comfortable with other languages. However, this also ensures a high-quality, optimized experience within Apple's ecosystem.

Several open-source projects have emerged to demonstrate the framework's potential:

These projects provide templates and inspiration for developers looking to create their own applications using Apple's on-device AI capabilities.

Privacy and Offline Functionality

One of the most significant advantages of the Foundation Models framework is its commitment to privacy. By processing AI tasks locally, the framework eliminates concerns about data transmission to cloud servers and potential privacy breaches.

As noted by developers, "The coolest thing is that this already works entirely offline on any device with Apple Intelligence." This offline capability is particularly valuable in situations with limited or no internet connectivity, or for users who prioritize data privacy.

Market Implications and Future Outlook

While the Foundation Models framework shows promise, it faces challenges in the broader AI landscape. The requirement for Apple-specific hardware and development in Swift may limit its appeal to developers focused on cross-platform solutions.

However, for Apple's growing ecosystem of developers and users, the framework represents an important step toward more sophisticated on-device AI. As one developer observed, "It'll be interesting to see what people build with it, but may be a hard sell to tie API calls to Apple-only devices and only available in Swift with some weird restrictions."

The framework's success will likely depend on Apple's ability to balance openness with control—providing powerful tools while maintaining the security and privacy that have become hallmarks of the Apple ecosystem.

As AI continues to evolve, Apple's approach of bringing these capabilities directly to devices rather than relying heavily on cloud processing could influence the broader industry's direction toward more privacy-conscious AI solutions.

Comments

Please log in or register to join the discussion