Award-winning ICML 2025 research reveals fundamental limitations in next-token prediction for creative tasks. By introducing diffusion models and seed-conditioning techniques, researchers achieve breakthrough performance in open-ended problem-solving while addressing autoregressive models' memorization pitfalls.

For years, next-token prediction has been the engine powering large language models, but its limitations in creative, open-ended tasks have remained a fundamental constraint. Groundbreaking research presented at ICML 2025 exposes these limitations through rigorous experimentation and proposes a paradigm shift toward multi-token approaches that could redefine generative AI's creative capabilities.

The Creativity Bottleneck

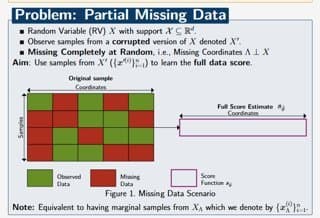

Researchers designed minimal algorithmic tasks that mirror real-world creative processes like wordplay, analogies, and pattern design—domains requiring stochastic planning and leaps of logic. As lead author Vaishnavh Nagarajan explains: "Next-token learning is inherently myopic. It's optimized for local coherence but struggles with tasks requiring global planning or genuine novelty." The study demonstrated how autoregressive models default to memorization rather than innovation when faced with open-ended challenges.

Diffusion Models: A Multi-Token Alternative

The team discovered that diffusion models—which generate multiple tokens simultaneously—outperformed traditional approaches by significant margins. Unlike next-token models that sequentially predict one word at a time, diffusion models evaluate broader contexts during generation. This architectural difference proved critical for tasks requiring:

- Discovery of non-obvious connections in knowledge graphs

- Construction of novel patterns (e.g., protein design or math problems)

- Long-horizon planning without predefined templates

The Seed-Conditioning Breakthrough

Equally important was the finding that seed-conditioning—injecting noise at the input layer—surpassed conventional temperature sampling in balancing randomness and coherence. "Temperature sampling often degrades output quality when increasing randomness," notes co-author Chen Wu. "Seed-conditioning provides the 'controlled chaos' needed for creativity without sacrificing structural integrity."

Implications for AI Development

The research offers concrete evidence that moving beyond next-token paradigms could:

- Enable AI systems capable of genuine scientific discovery

- Reduce memorization biases in generative models

- Unlock new applications in design, research, and creative industries

Code for the algorithmic creativity tasks is publicly available, providing a standardized testbed for future innovation. As generative AI evolves, this work signals a crucial pivot point—where systems transition from pattern recyclers to true collaborators in human creativity. The era of myopic language models may soon give way to architectures capable of seeing the whole board before making their move.

Comments

Please log in or register to join the discussion