AI's potential in scientific research is bottlenecked by the challenge of interfacing with the physical world. This article explores how industrial control systems, like PLCs and function block diagrams, provide a scalable, verifiable solution for automating experimentation in chemistry and biology. Discover a practical roadmap to transform R&D productivity without waiting for general robotics.

For decades, scientific breakthroughs in fields like electrochemistry, synthetic biology, and precision medicine have been constrained not by funding or ambition, but by the painstakingly slow pace of physical experimentation. While AI has revolutionized virtual domains—writing code, generating text, and analyzing data—its impact on the tangible world of lab benches and reactors remains limited. But what if we could use today's AI systems to autonomously design, run, and interpret experiments, accelerating discoveries in climate science, medicine, and materials? The answer lies not in futuristic robots, but in repurposing industrial control systems to create high-signal learning environments for AI.

The R&D Loop: Where AI Stumbles on Physical Reality

Scientific research follows a universal four-step loop:

- Review data and generate a new experiment idea

- Set up the experiment

- Run the experiment and collect data

- Process and analyze the data

Current AI systems, powered by LLMs and tools like OpenAI's Deep Research, excel at steps 1 and 4. They can digest literature, propose hypotheses, and interpret results. The bottleneck is steps 2 and 3: physically configuring instruments, monitoring reactions, and handling unpredictable real-world variables. As the author—an R&D scientist at the forefront of electrochemistry—notes, experiments in natural sciences often involve messy, inconclusive data and long lead times, creating a "low signal-to-noise" environment for AI training.

Why Cloud Labs Aren't the Silver Bullet

Cloud labs, which allow remote, programmatic control of experiments, seem like an obvious solution. They enable high-throughput work in standardized domains like genomics. Yet, for frontier research, they fall short.

- Innovation unfriendliness: Cloud labs prioritize cost-effective, standardized equipment. Novel measurements—like characterizing a custom catalyst—require bespoke setups they can't support.

- Supply chain complexity: Cutting-edge research often relies on fragmented, low-TRL components (e.g., custom bioreactor parts) that cloud labs can't integrate.

- IP barriers: Corporate labs hesitate to share proprietary "research taste" knowledge. As seen with Ginkgo Bioworks’ failed IP model, outsourcing stifles collaboration.

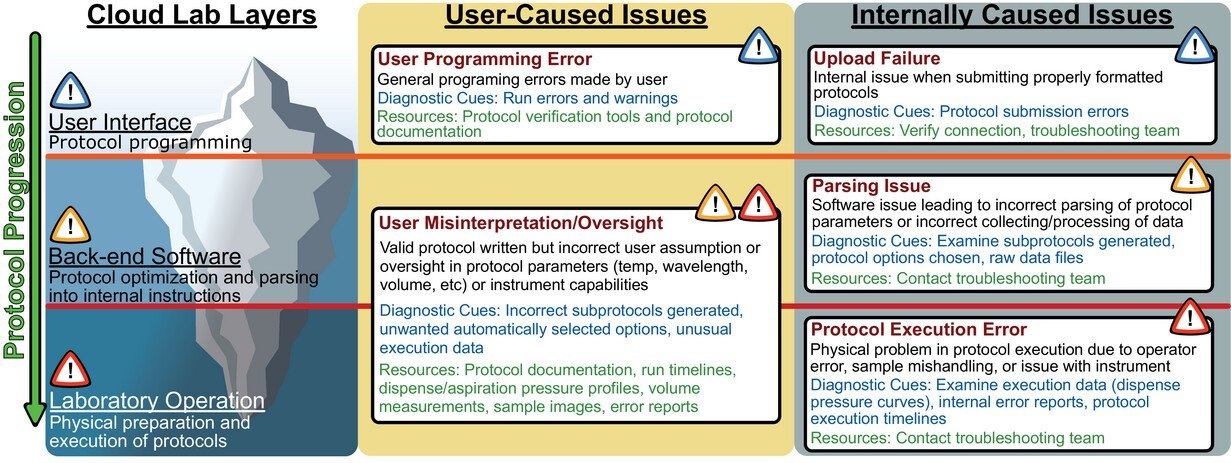

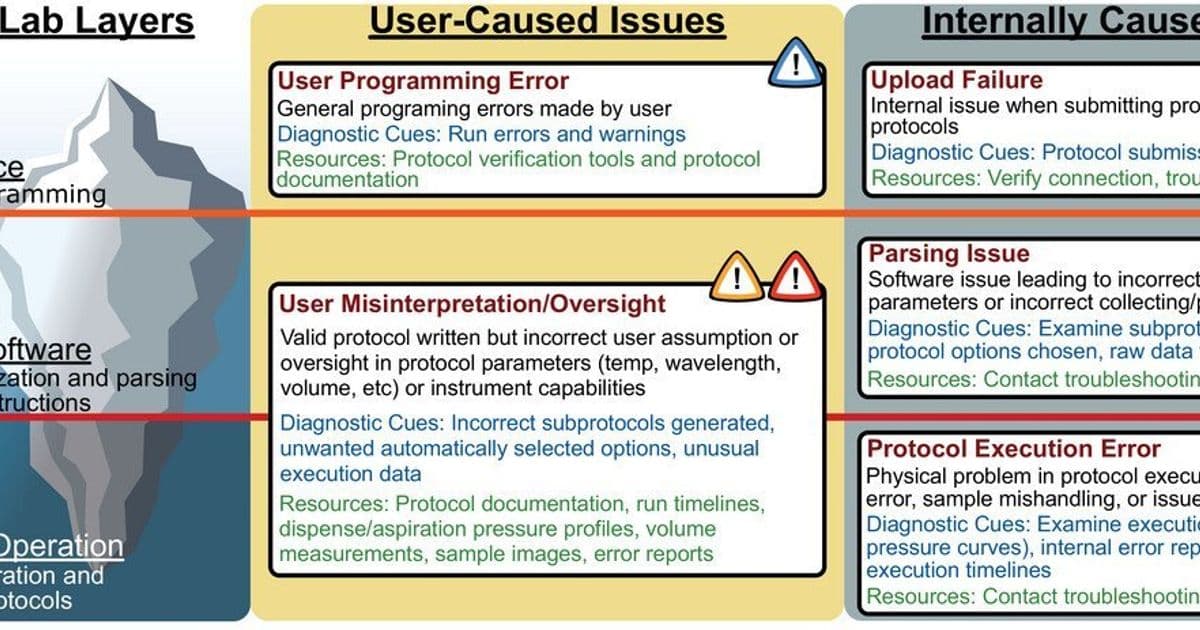

As highlighted in Carnegie Mellon's Scientific Discovery at the Press of a Button, these limitations restrict cloud labs to niche applications. For AI to thrive, the interface must reside within labs, leveraging existing processes.

Industrial Control Systems: The Unlikely Hero

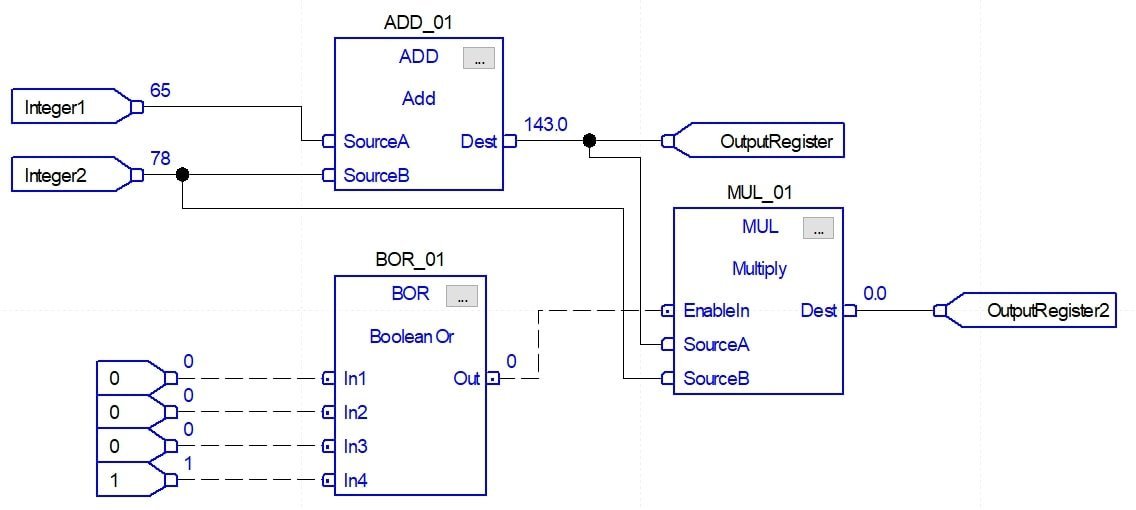

Enter industrial control systems (ICS)—the unglamorous backbone of manufacturing and power plants. Comprising field devices (sensors/actuators), I/O interfaces, and programmable logic controllers (PLCs), they orchestrate physical processes with reliability honed in high-stakes environments. PLCs execute control logic defined via function block diagrams (FBDs), visual workflows that map relationships between devices (e.g., "close valve if temperature exceeds threshold").

Why ICS Fits the Bill:

- Cost and scalability: Once prohibitively expensive, open standards like IEC61499 have slashed costs. Today, a full ICS setup costs under $1,000, making it accessible for labs.

- Safety and verifiability: FBDs are deterministic and event-driven. Their state-transition logic allows automated formal verification—AI-generated setups can be checked for safety (e.g., no overheating risks) in seconds, avoiding human review bottlenecks.

- Data handling: ICS historians stream tagged, high-fidelity data (e.g., temperature readings) with automatic outlier pruning, creating clean datasets for AI training.

- Domain flexibility: Used in academic and corporate R&D (e.g., MIT’s EPICS for proton accelerators, Fraunhofer’s bioreactors), ICS scales from lab benches to industrial deployment.

A Practical Roadmap: AI-Assisted Experimentation Today

Not all science is equally automatable. Domains like chemistry and biology, where experiments involve configurable reactors or bioreactors, are low-hanging fruit. Here, AI can reduce setup time for tasks like adjusting pump rates or temperature setpoints, enabling mass parallelization. Human intervention is still needed for physical prep (e.g., inoculating broth), but AI handles the rest.

Imagine a workflow:

- Idea generation: Scientists upload reports; an LLM proposes parameter tweaks.

- Setup: AI configures FBDs for test stations, verified automatically for safety.

- Execution: PLCs run experiments, streaming data to historians and alerting humans to anomalies.

- Analysis: AI interprets results, suggesting next steps.

{{IMAGE:3}}

This isn’t theoretical. Reinforcement learning on historian data lets AI "inhabit streams of experience," building domain intuition. As models ingest proprietary, unpublished research, their "taste" improves—potentially surpassing human intuition in constrained domains.

The Path Forward: Scaling Beyond the Lab

Expanding to fields like biomedicine (e.g., microscopy) requires overcoming robotics hurdles. Yet, ICS’s flexibility allows adaptation: any verifiable interface for experimental descriptions could work. The key is starting small—automating repetitive tasks in experiment-bottlenecked domains—then iterating. Crucially, integrating ICS with AI democratizes R&D, letting smaller labs compete with giants through scalable, low-cost experimentation.

As AI and control systems converge, we’re not just accelerating science; we’re redefining how discoveries are made. The trillion-dollar dividends in climate and health tech await those who bridge the virtual-physical gap—one function block at a time.

Comments

Please log in or register to join the discussion