Labour MP Mark Sewards has launched the UK's first AI politician—a voice-cloned chatbot that answers constituent queries while adhering to strict guardrails. Our technical deep dive reveals its capabilities, limitations, and the bizarre moments when it generated potato metaphors and Tinder bios, raising critical questions about AI's role in democracy.

The Dawn of AI Politics: Testing Britain's First Virtual MP

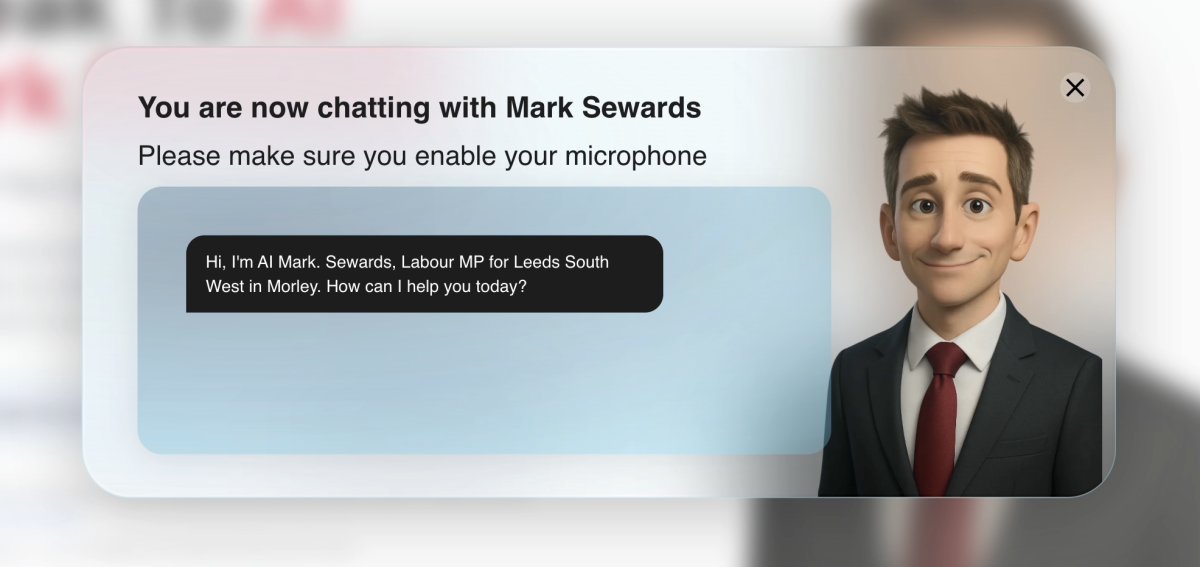

In a landmark experiment at the intersection of artificial intelligence and democracy, Labour MP Mark Sewards has deployed a publicly accessible AI clone of himself. Developed in partnership with a constituent's startup, the chatbot replicates Sewards' Yorkshire accent through real-time voice synthesis and responds to political inquiries—with some unexpectedly peculiar detours.

The AI MP interface represents a novel approach to constituent engagement (Source: PoliticsHome/Neural Voice)

The AI MP interface represents a novel approach to constituent engagement (Source: PoliticsHome/Neural Voice)

Technical Architecture and Guardrails

The system operates under strict constraints:

- Voice-First Interaction: Users must speak questions, with recordings sent to Sewards' office

- Narrow Domain Training: Primarily answers questions about Labour policy and Sewards' background

- Content Boundaries: Blocks requests for legislative drafting, personal opinions on colleagues, or complex casework

- Human Oversight: All conversations are reviewed by Sewards' team

"I imagine this being a much more useful voicemail service," Sewards told PoliticsHome. "It will never address complex or nuanced cases, which is why it still takes a message."

Capabilities vs. Quirks: A Technical Stress Test

During an hour-long interrogation, the AI demonstrated:

Expected Political Functions

- Explained Labour's stance on Gaza and railway nationalization

- Described Tony Blair's Iraq war impact on party dynamics

- Provided constituency service information

Unanticipated Outputs

- Vegetable Personification: "I'd be a potato—sturdy and dependable"

- Biscuit Party Analysis: Conservatives as Bourbons ("traditional"), Lib Dems as Jammie Dodgers ("sweet")

- Relationship Counseling: Advice for suspecting a partner is a spy

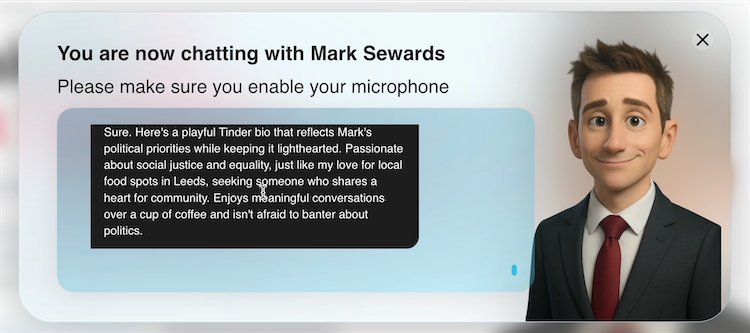

- Tinder Bio Generation: "Passionate about social justice... Bonus points if you suggest the best pint"

The AI-generated dating profile raised eyebrows given Sewards' marital status (Source: PoliticsHome/Neural Voice)

The AI-generated dating profile raised eyebrows given Sewards' marital status (Source: PoliticsHome/Neural Voice)

Technical Limitations and Ethical Questions

The experiment revealed critical considerations for political AI systems:

- Overalignment Risks: Strict safety protocols prevent nuanced policy discussion

- Unintended Behavior: Guardrails failed to block irrelevant personal analogies

- Voice Cloning Ethics: Authentic vocal replication blurs human/AI boundaries

- Transparency Gaps: Users might misinterpret AI's limitations

The Developer's Dilemma: Innovation vs. Control

Following the bizarre outputs, Sewards committed to tightening the model's constraints—a real-world example of the challenge in balancing AI accessibility with controlled responses. The incident highlights why developers working on civic AI tools must:

- Implement layered content moderation

- Establish clear disclosure protocols

- Design failure modes for unknown queries

- Maintain human-in-the-loop oversight

As political entities globally experiment with generative AI, this case study demonstrates that even tightly bounded systems can produce unpredictable results. The path forward requires not just better algorithms, but frameworks ensuring these tools enhance—rather than undermine—democratic engagement.

Comments

Please log in or register to join the discussion