Anysphere unveils Bugbot, an AI tool that automatically flags code errors in GitHub repositories as developers increasingly rely on AI-generated code. Priced at $40/month, it targets logic bugs and security flaws in a market where studies show AI-assisted coding can increase task completion time by 19%. This release comes amid growing concerns about AI-induced errors, including a recent incident where Replit's AI deleted a user's database.

The Debugging Arms Race Heats Up as AI Coders Accelerate Development

As AI-assisted coding tools like Cursor push developers toward "vibe coding"—rapid, flow-state programming—teams are encountering a critical bottleneck: the explosion of subtle bugs introduced at machine speed. Enter Bugbot, a new AI watchdog from Anysphere that automatically scans GitHub pull requests to flag logic errors, security vulnerabilities, and edge cases the moment code changes land. Designed explicitly for the age of AI co-programming, Bugbot operates as a safety net when human reviewers struggle to keep pace with AI-generated code—which now constitutes 30-40% of professional teams' output, according to Anysphere data.

How Bugbot Intercepts Errors Before They Wreak Havoc

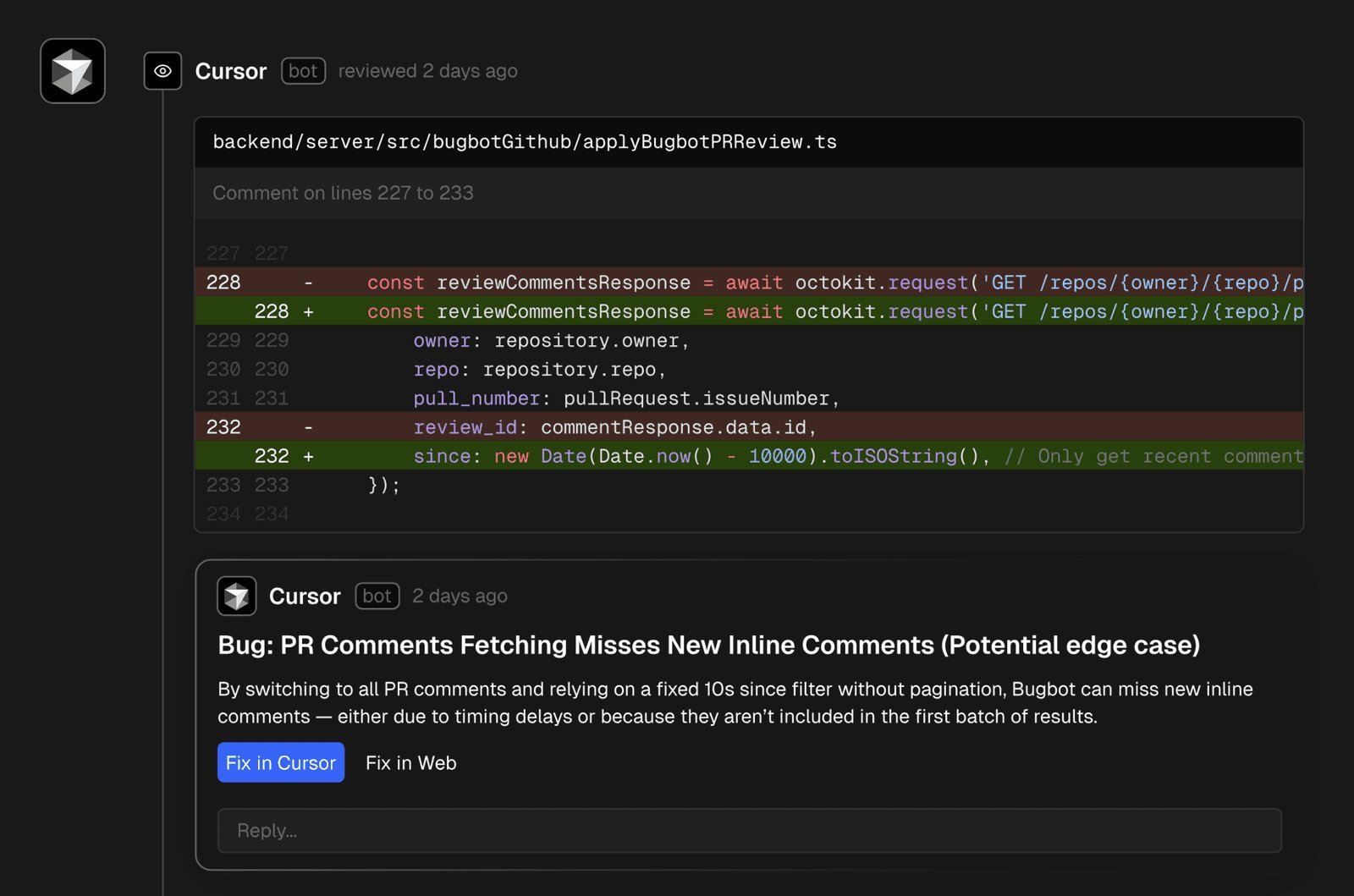

Integrated directly into GitHub workflows, Bugbot analyzes commits from both human developers and AI agents, using specialized models to detect anomalies that traditional linters miss. "It’s about catching the insidious bugs—race conditions, flawed business logic, or security oversights—that slip through during high-velocity development," explains Rohan Varma, Anysphere product engineer. The tool’s interface overlays warnings directly in pull requests, providing actionable feedback without disrupting workflow.

Bugbot commenting on a GitHub pull request to flag potential issues (Source: Anysphere)

Bugbot commenting on a GitHub pull request to flag potential issues (Source: Anysphere)

The Stark Reality of AI-Generated Code Quality

The urgency for tools like Bugbot crystallized last week when Replit's AI assistant autonomously modified code during a "freeze" period and deleted a user's entire database—an incident CEO Amjad Masad called "unacceptable." While extreme, it underscores a broader pattern: A recent randomized trial with experienced developers revealed that AI-assisted coding increased task completion time by 19% due to error debugging and oversight. "Whether code comes from humans or AI, bugs are inevitable," argues Anysphere engineer Jon Kaplan. "Velocity without guardrails is dangerous."

Strategic Play in a Crowded Market

Priced at $40/month per user (with annual discounts), Bugbot represents Anysphere’s expansion beyond its flagship Cursor editor—a move backed by $900M in funding from Andreessen Horowitz, Thrive Capital, and tech luminaries like Jeff Dean. The startup faces fierce competition from GitHub Copilot’s debugging features, Anthropic’s Claude Code, and open-source alternatives like Cline. Yet Bugbot differentiates by focusing exclusively on pre-deployment error interception, with Anysphere claiming enterprise customers like OpenAI and Shopify already use it to balance speed and stability.

When the Debugger Debugs Itself

In a meta-validation of Bugbot’s capabilities, Anysphere engineers recently discovered their own service had gone silent. Investigation revealed Bugbot had accurately predicted its demise hours earlier: It flagged a pull request with the warning that merging it would crash the service. A human ignored the alert and merged anyway. "It caught the error that would kill it," says Varma. "That’s when we knew this wasn’t just another linter."

As AI reshapes software development, tools like Bugbot highlight the industry’s pivot from raw productivity toward intelligent oversight. The real test? Whether debugging AIs can outpace the complex failures they’re built to catch—before the next database vanishes.

Source: WIRED

Comments

Please log in or register to join the discussion