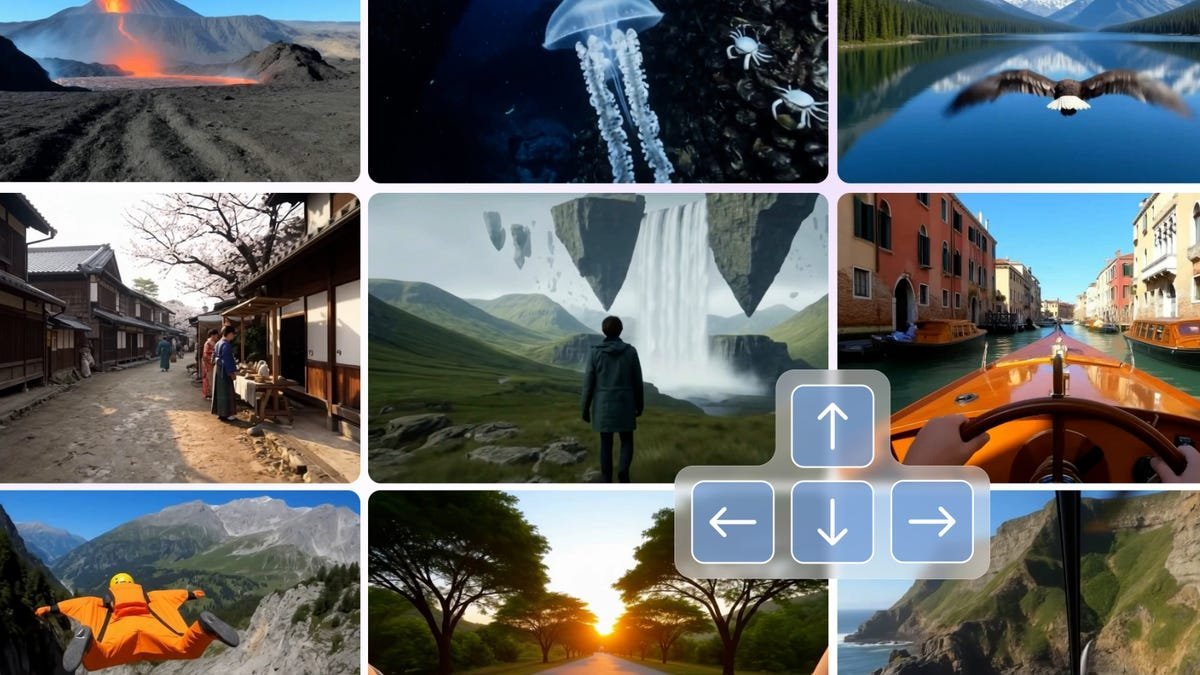

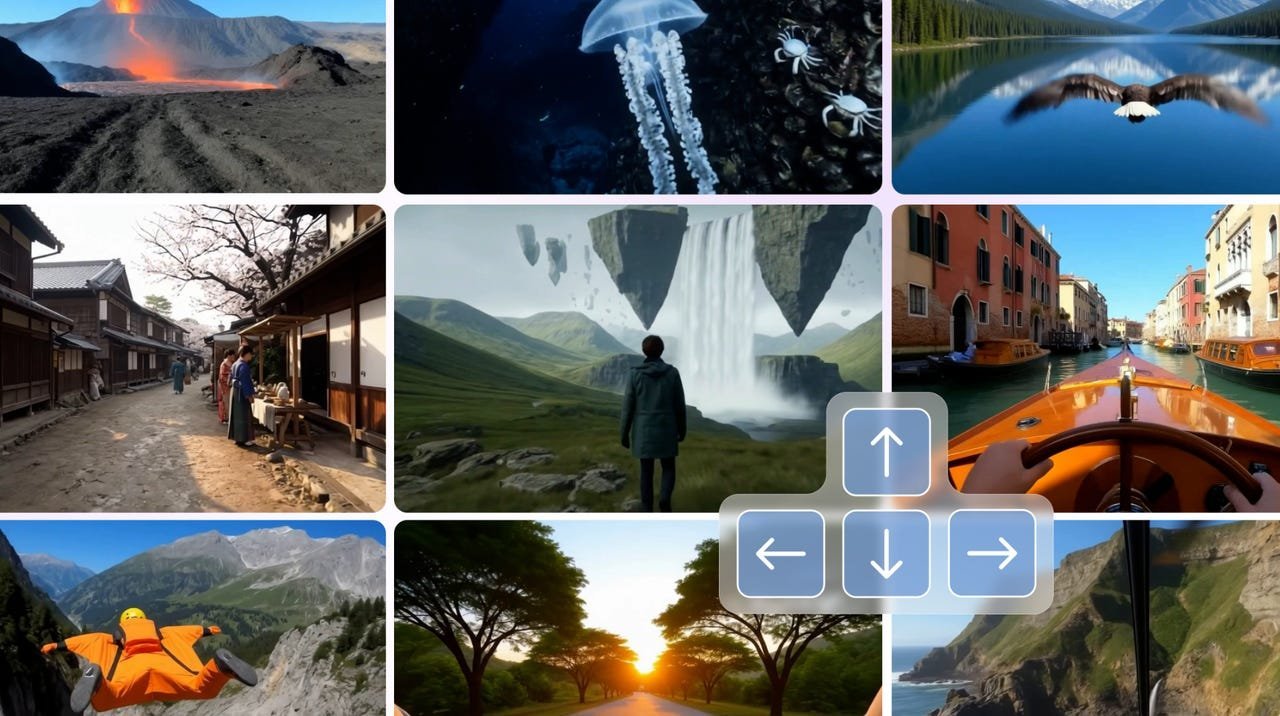

Google DeepMind's Genie 3 breakthrough enables the real-time generation of interactive, physics-grounded environments from simple text prompts, featuring persistent 'world memory'. This leap in world modeling promises transformative applications beyond gaming in training, simulation, and embodied AI, while reigniting debates on AI's capacity for true understanding.

The quest to create AI systems that don't just process data but understand and simulate the physical world has taken a monumental leap forward with Google DeepMind's Genie 3. Unveiled this week, this next-generation "world model" transcends static video generation, enabling users to dynamically build and explore boundless, interactive virtual environments governed by realistic physics – all conjured from natural language descriptions.

Beyond Mimicry: The Power of the World Model

World models represent a fundamental shift in AI capability. Unlike conventional generative models that stitch together patterns from training data, world models aim to develop an internal representation of how the world works. They predict outcomes based on an implicit grasp of physics, causality, and object permanence. As Google DeepMind states, these systems "use their understanding of the world to simulate aspects of it, enabling agents to predict both how an environment will evolve and how their actions will affect it."

Genie 3 exemplifies this ambition. Feed it a prompt like "a man on horseback carrying a bag full of money is being chased by Texas rangers, who are also riding horses. All of the hooves are kicking up huge plumes of dust," and it doesn't just render a video clip. It constructs a living, responsive simulation where users navigate, interact, and witness events unfold in real-time, with the environment expanding dynamically as they explore.

The Game-Changer: Persistent World Memory

The most significant advancement in Genie 3 is its "world memory." This feature allows changes made within the simulation to persist over time and space. In a striking demo, a user paints a wall with a virtual roller. When they look away and then return, the paint remains exactly where it was applied. This persistence – a core tenet of a coherent reality – has been a major hurdle for interactive AI generation. Genie 3's ability to maintain state across actions fundamentally differentiates it from predecessors and enables truly interactive storytelling and environment building.

Caption: Google DeepMind demonstrates Genie 3's persistent 'world memory' – paint applied to a virtual wall remains visible after the user looks away and returns. (Image: Google)

Caption: Google DeepMind demonstrates Genie 3's persistent 'world memory' – paint applied to a virtual wall remains visible after the user looks away and returns. (Image: Google)

Implications Far Beyond Entertainment

While Google DeepMind highlights "next-generation gaming and entertainment" as obvious applications, the potential of robust world models like Genie 3 stretches much further:

- Embodied AI & Robotics: Training AI agents or robots in high-fidelity, infinitely variable simulations before deploying them in the real world. This could accelerate development in fields like autonomous vehicles or complex manufacturing.

- Emergency Preparedness & Training: Simulating dangerous or complex real-world scenarios (natural disasters, medical emergencies, infrastructure failures) for first responders to practice safely, building crucial muscle memory and decision-making skills, potentially integrated with VR.

- Education & Prototyping: Creating immersive, interactive learning environments for complex scientific concepts or enabling rapid prototyping of physical spaces and products within dynamic simulations.

- Foundation for General AI: Developing AI that can reason about cause-and-effect in the physical world is considered a critical step towards more general, adaptable artificial intelligence.

The Enduring Question: Does it Truly "Understand"?

The description of Genie 3 possessing an "understanding" of the world inevitably sparks debate. Critics argue AI merely recognizes and replicates complex patterns from vast datasets, lacking genuine comprehension. Proponents counter that sophisticated pattern recognition enabling accurate prediction and simulation is a form of understanding, potentially analogous to human cognition. Genie 3's ability to generate coherent, persistent worlds that react plausibly to unforeseen user actions pushes this debate further into the spotlight. Whether labeled "understanding" or "advanced pattern synthesis," the practical capability is undeniably transformative.

The Latent Space Frontier

Genie 3, alongside models like OpenAI's Sora which stunned observers with its physics simulations last year, signals a rapid maturation of AI's ability to model and interact with simulated realities. We are moving beyond passive content generation into an era of dynamic, user-driven world creation. The ability to translate imagination into persistent, interactive digital spaces with believable physics isn't just a new tool for game designers; it represents a fundamental shift in how we conceive of and interact with synthetic environments, blurring the lines between creator, explorer, and participant within AI-generated worlds.

Source: Based on original reporting by Webb Wright, ZDNet (August 8, 2025)

Comments

Please log in or register to join the discussion