Google Research introduces SLED, a novel decoding technique that improves LLM factuality by leveraging outputs from all transformer layers. The method reduces hallucinations by 16% on benchmarks without external data or fine-tuning, offering a lightweight solution to AI's accuracy crisis.

Large language models frequently stumble over facts, confidently asserting inaccuracies—a flaw known as hallucination. While retrieval-augmented generation has been the go-to fix, it introduces complexity and isn't foolproof. Google Research's new paper at NeurIPS 2024 offers an elegant alternative: Self Logits Evolution Decoding (SLED), which realigns LLM outputs using the model's own internal knowledge.

The Layer Whisperer: How SLED Works

Traditional decoding relies solely on a transformer's final layer to predict tokens. SLED revolutionizes this by:

- Extracting logits (prediction scores) from every layer

- Applying the final projection matrix to early-exit layers

- Computing a weighted average of all layer distributions

SLED corrects the popular misconception that Vancouver is British Columbia's capital by amplifying signals for 'Victoria' across layers

SLED corrects the popular misconception that Vancouver is British Columbia's capital by amplifying signals for 'Victoria' across layers

This approach captures nuanced reasoning patterns lost in final-layer sampling. For example, when solving "Ash buys 6 toys at 10 tokens each with 10% discount for 4+ items," standard decoding often outputs "6 × 10 = 60"—ignoring the discount. SLED detects intermediate layers suggesting "×" instead of "=" after "6 × 10," steering toward the correct "6 × 10 × 0.9 = 54."

Benchmark Breakthroughs

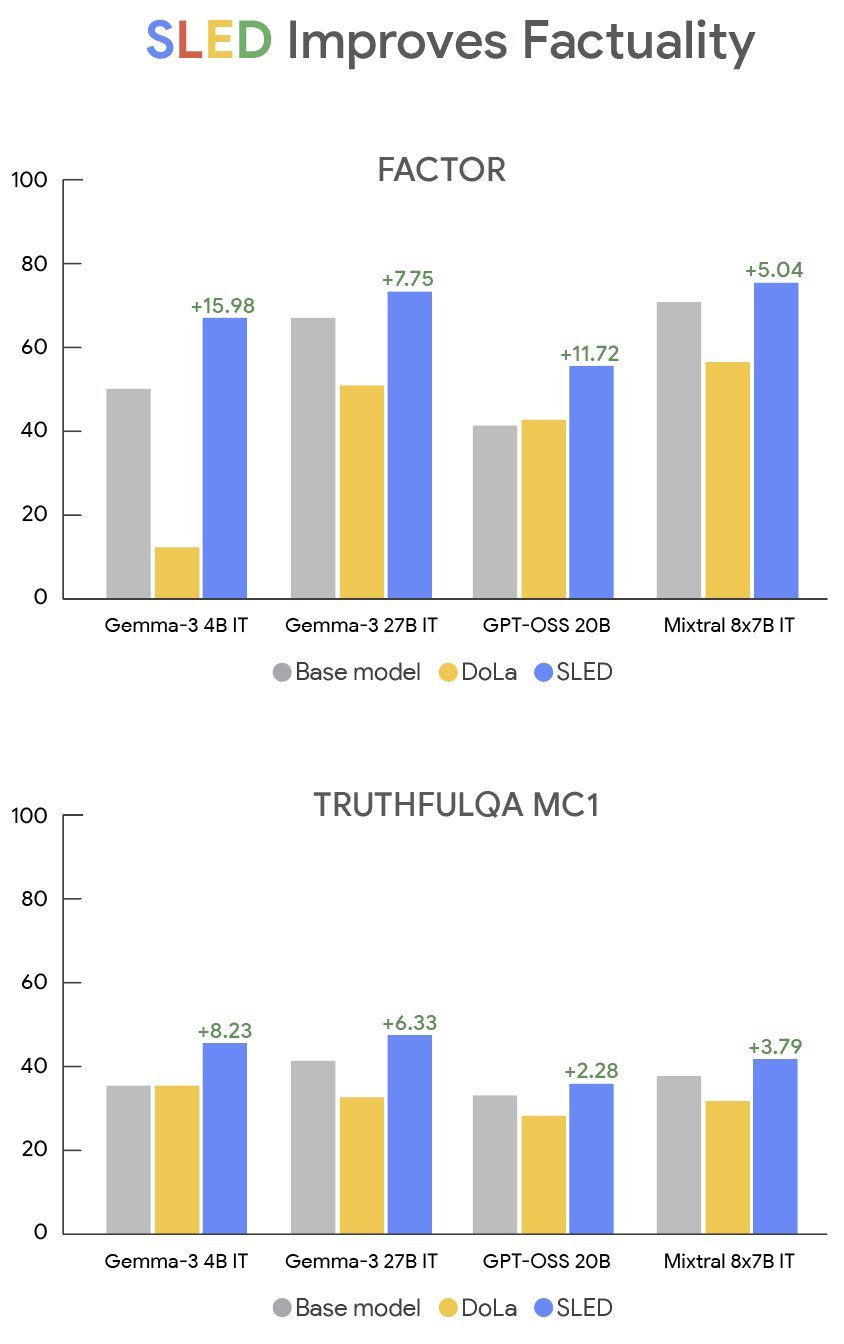

Tested across Gemma, GPT-OSS, and Mistral models, SLED demonstrated:

- 16% accuracy gains on FACTOR and TruthfulQA benchmarks

- Consistent improvement in multiple-choice, open-ended, and chain-of-thought tasks

- 4% latency overhead vs. prior state-of-the-art DoLa

SLED's accuracy improvements across models and datasets (Source: Google Research)

SLED's accuracy improvements across models and datasets (Source: Google Research)

Crucially, SLED requires no external knowledge bases or fine-tuning. It even combines synergistically with other decoding methods—researchers boosted accuracy further by pairing it with DoLa.

The Future of Faithful AI

SLED represents a paradigm shift: instead of treating transformers as black boxes, it harnesses their internal deliberation. As lead researchers Cyrus Rashtchian and Da-Cheng Juan note, this approach could extend to:

- Visual question answering

- Code generation

- Long-form content creation

The technique is now open-sourced on GitHub, inviting developers to implement it in existing pipelines. In an era where AI accuracy impacts everything from healthcare to legal systems, SLED offers a surgical strike against hallucinations—using the model's own wisdom against its flaws.

Source: Google Research Blog

Comments

Please log in or register to join the discussion