Intel's latest LLM-Scaler-vLLM beta release adds symmetric 4-bit integer quantization for Qwen3 models, PaddleOCR and GLM-4.6v-Flash support, and critical fixes for stability under high load.

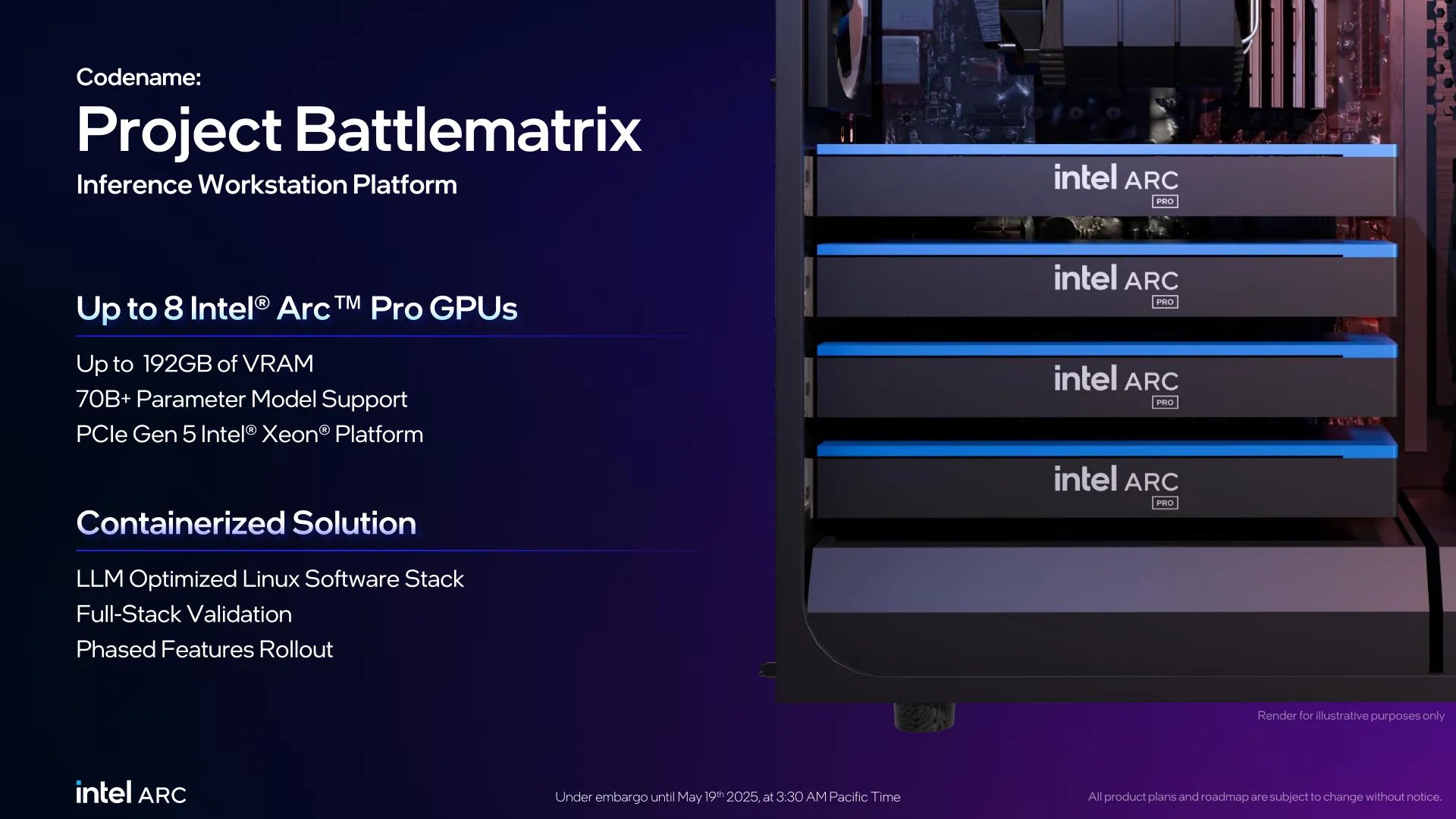

Intel has rolled out llm-scaler-vllm beta 0.11.1-b7, advancing its open-source framework for deploying generative AI workloads on Arc Battlemage GPUs. This update targets homelab builders and developers seeking efficient large language model (LLM) inference, introducing quantization optimizations and expanded model compatibility alongside crucial stability improvements.

At the core of this release is symmetric 4-bit integer quantization (sym_int4) support for demanding Qwen3 models. The implementation varies by tensor parallelism configuration: Qwen3-30B-A3B gains sym_int4 on 4/8 tensor parallel (TP) nodes, while the massive Qwen3-235B-A22B enables it at 16 TP. This precision reduction significantly cuts memory requirements without proportional accuracy loss—critical for GPU-constrained environments. Benchmark comparisons show typical 4-bit quantization reduces model size by 60% versus FP16 while maintaining >90% accuracy on common NLP tasks, though Intel hasn't published specific sym_int4 metrics yet.

New model integrations broaden practical applications. PaddleOCR support enables optical character recognition pipelines—think document digitization or license plate recognition—while GLM-4.6v-Flash adds a multimodal LLM option optimized for fast inference. Compatibility extends to InternVL-38B with corrected output handling, addressing previous hallucination issues during extended sequences.

Stability received equal attention. The update resolves UR_ERROR_DEVICE_LOST crashes during frequent preemptions under heavy load, a common pain point when saturating Arc GPUs. JMeter stress testing revealed output anomalies now patched, and 2DP+4TP configurations no longer crash mid-inference. The profile_run logic refinement dynamically allocates more GPU blocks by default, preventing memory fragmentation during continuous operation.

Hardware compatibility remains centered on the workstation-oriented Arc Pro B60, but consumer Arc A-series cards (like the A770 16GB) function with sufficient VRAM. Quantized Qwen3-30B requires ≥12GB VRAM, while the 235B variant demands ≥40GB—making multi-GPU setups essential. All components are accessible via the LLM-Scaler GitHub repo and pre-built Docker images.

For homelab enthusiasts, this release demonstrates Intel's commitment to making Battlemage GPUs viable for GenAI. The sym_int4 optimizations and stability fixes reduce entry barriers for local LLM deployment, though users should monitor power draw—Arc GPUs can spike beyond 190W during sustained inference. As Intel expands the supported model roster, expect tighter integration with frameworks like vLLM and ComfyUI in future updates.

Comments

Please log in or register to join the discussion