A deep dive into advanced web security configurations that dramatically reduced bot traffic while maintaining legitimate access, complete with Apache directives and real-world results.

In the ever-evolving landscape of web security, the battle against automated bots has become increasingly sophisticated. This month, I've been experimenting with security configurations that push the boundaries of what's possible with Apache directives, resulting in a dramatic reduction in unwanted bot traffic while maintaining accessibility for legitimate users.

The Evolution of Bot Blocking

The journey began with a simple yet powerful idea: block all unidentified bots while maintaining access for legitimate services. This approach builds upon previous security measures that included manual IP blocking, weekly log analysis, cloud IP range blocking, fake referral detection, and PHP injection prevention through .htaccess configurations.

What makes this new strategy particularly interesting is its focus on command-line clients and empty user agent strings. By targeting bots that use tools like curl, python, okhttp, libwww, Go-http-client, and Apache-HttpClient, we can effectively cut off a significant portion of automated traffic without impacting human users.

The Technical Implementation

The Apache directives follow a carefully structured order:

- ACME Challenge Exclusion: Essential for SSL certificate validation

- Whitelisted IP Exclusion: For trusted sources and services

- Known Bot Allowance: For legitimate crawlers and services

- 403 Error Page Access: Ensuring error pages remain accessible

- RSS-Related File Access: Maintaining feed accessibility

- Bot Blocking: The core of the strategy targeting command-line clients and empty headers

This layered approach ensures that legitimate traffic flows smoothly while automated bots face immediate barriers. The beauty of this system lies in its simplicity - most bots simply cannot bypass these restrictions without fundamentally changing their behavior.

Real-World Results

The data speaks volumes about the effectiveness of this approach. Comparing logs from November 2025 to January 2026 and the current month reveals dramatic improvements:

November 2025 Baseline:

- 34% Linux traffic

- 23% unknown

- 40% fake referrals

- 54% 404s

- 20% 403s

- 43% unknown browser

January 2026 (Post-Optimization):

- 33% Linux (stable)

- 23% unknown (stable)

- 12% fake referrals (significant reduction)

- 35% 404s (reduced)

- 33% 403s (increased due to better detection)

- 40% unknown browser

Current Month (New Strategy):

- 30% Linux

- 24% unknown

- 40% unknown browser

- Less than 3% fake referrals (!!!)

- 18% 404s

- 62% 403s (doubled, indicating better bot detection)

The most striking improvement is the reduction in fake referrals from 40% to less than 3%. This single metric demonstrates the effectiveness of the new blocking strategy. The increase in 403 errors isn't a failure but rather evidence that the system is successfully identifying and blocking unwanted traffic.

The Bot Persistence Problem

One fascinating observation is the persistence of bots even after receiving 403 Forbidden responses. Many automated systems continue to attempt access despite clear indications that they're not welcome. This behavior raises interesting questions about the economics of bot operations - why would operators continue to waste resources on clearly blocked targets?

The answer likely lies in the scale of operations. When running thousands or millions of bot instances, the cost of continuing to probe a single site is negligible compared to the potential value of finding a vulnerability. This persistence, while frustrating, actually validates the effectiveness of the blocking strategy.

Practical Applications

For those interested in implementing similar protections, the Apache directives are available in a TXT file that can be adapted to various server configurations. The key is understanding the logic behind the rules rather than simply copying them verbatim.

Looking Forward

This exploration represents just one chapter in the ongoing battle between website operators and automated systems. As AI and bot technology continue to evolve, so too must our defensive strategies. The success of this approach suggests that focusing on the fundamental characteristics of bot behavior - such as command-line access and empty headers - can be more effective than trying to maintain exhaustive lists of known bad actors.

The future of web security may lie not in increasingly complex detection algorithms, but in simple, elegant rules that exploit the inherent limitations of automated systems. By understanding the nature of the threat, we can build more effective defenses that protect our digital spaces while maintaining the open, accessible nature of the web.

This journey into bot blocking has been both technically rewarding and intellectually stimulating. The dramatic improvements in traffic quality demonstrate that thoughtful security configurations can make a real difference in the digital landscape. As we continue to refine and improve these systems, the goal remains clear: create digital spaces that serve human needs while effectively managing the challenges posed by automated systems.

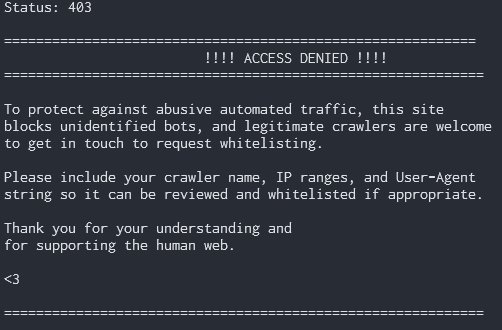

The featured image for this article captures the essence of digital security - a complex, layered approach to protecting valuable digital assets while maintaining accessibility for legitimate users. Just as physical security systems use multiple layers of protection, effective web security requires a comprehensive strategy that addresses threats at multiple levels.

This image represents the technical implementation of the blocking strategy, showing how Apache directives can be used to create effective barriers against unwanted traffic while maintaining smooth operation for legitimate users. The visual representation helps illustrate the layered approach that makes this security strategy so effective.

Comments

Please log in or register to join the discussion