A new open-source compression library, memlz, promises decompression speeds rivaling the raw memory copy function memcpy(), targeting niche applications where latency is critical. Benchmarks show it outperforms established tools like LZ4 in specific scenarios, while its no-copy streaming mode eliminates overheads common in alternatives. Though in early beta, memlz could revolutionize data processing for developers in edge computing and real-time systems.

In the relentless pursuit of performance, data compression often forces a trade-off: reduce size at the cost of speed. But what if compression could be as fast as simply copying memory? Enter memlz, a new header-only compression library that aims for speeds approaching memcpy()—the blazing-fast memory copy operation intrinsic to C/C++. Developed by GitHub user rrrlasse and released in its first beta, memlz targets specialized use cases where microseconds matter, such as high-frequency trading, real-time analytics, or embedded systems.

The Need for Speed: Benchmarks That Defy Convention

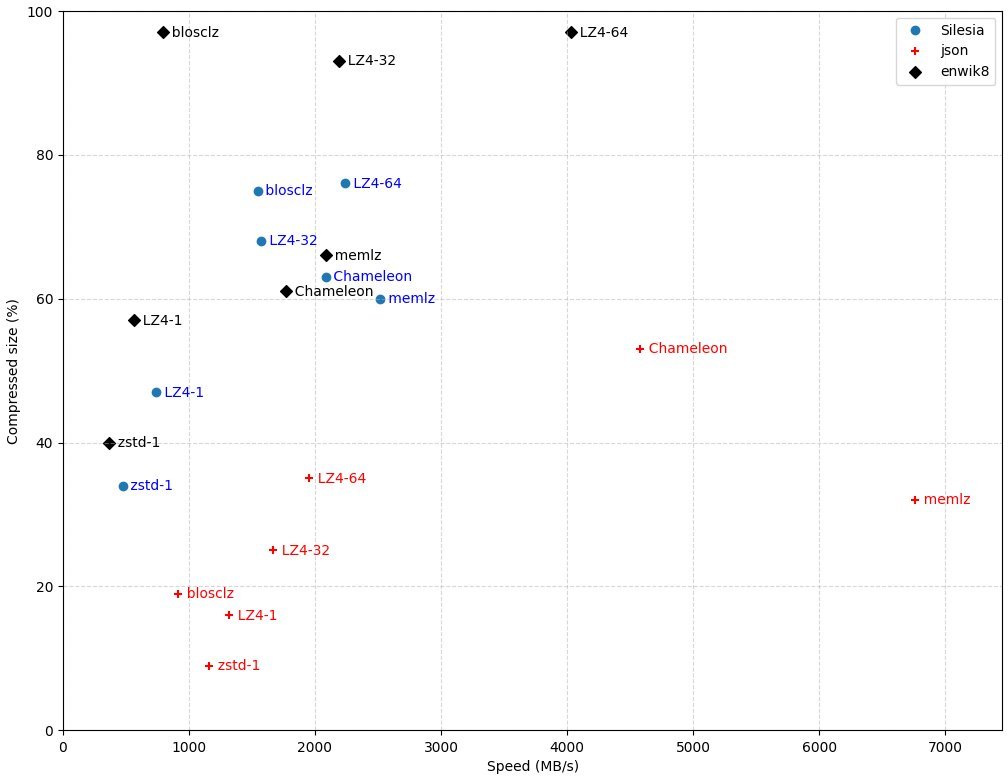

memlz isn't about winning compression ratio contests; it's built for raw velocity. In benchmarks on an Intel i7 system with a memcpy() speed of 14,000 MB/s, memlz demonstrated decompression rates that leave many industry staples in the dust. While libraries like Snappy, FastLZ, LZAV, and LZO typically operate well below 1000 MB/s, memlz approaches—and in some tests, exceeds—these figures. Only LZ4, with its acceleration parameter cranked to 32–64, comes close, but even then, memlz holds its own or edges ahead depending on the data type (e.g., enwik8, Silesia, or JSON files).

Benchmark results highlight memlz's decompression speed advantages, though compression ratios may lag behind more mature libraries.

Benchmark results highlight memlz's decompression speed advantages, though compression ratios may lag behind more mature libraries.

Decompression is where memlz shines brightest, though its competitiveness varies with data structures. As the developer notes, "Decompression speed is faster but less competitive depending on data type." This makes it ideal for read-heavy workloads where rapid data access is paramount.

Developer-Friendly Design: Simplicity Meets Innovation

memlz lowers the barrier to adoption with a header-only implementation. Integration is straightforward—just include memlz.h and call the core function:

#include "memlz.h"

...

size_t len = memlz_compress(destination, source, size);

For handling large datasets or streaming small packets without sacrificing ratio, memlz offers a stateful streaming mode. This avoids the common pitfall of compressing tiny chunks individually, which tanks efficiency. Instead, developers can process data incrementally:

memlz_state* state = (memlz_state*)malloc(sizeof(memlz_state));

memlz_reset(state);

while(...) {

...

size_t len = memlz_stream_compress(destination, source, size, state);

...

}

Each call processes the full payload on-the-fly, with decompression handled in a single step. The format includes headers for easy size checks via memlz_compressed_len() and memlz_decompressed_len().

Safety and the No-Copy Advantage

Safety is a non-negotiable in today's threat landscape. memlz ensures decompression of corrupted data completes in predictable time and never accesses memory outside the source or destination buffers, as validated by its size-check functions. But the standout innovation is its "no-copy" streaming. Unlike LZ4 and others, which incur an extra memcpy() to manage internal queues in streaming mode, memlz's algorithm sidesteps this overhead. A real-world test with the eXdupe file archiver—which deduplicates data before compression—showcased the impact:

- Without streaming: Output of 2,148,219,886 B (53% ratio) at 4,368 MB/s.

- With memlz streaming: Output of 2,145,241,775 B (similar ratio) at 4,616 MB/s.

The 6% speed boost, while maintaining compression efficiency, underscores how eliminating redundant copies can accelerate pipelines handling frequent small packets.

Caveats and the Road Ahead

As a beta release, memlz comes with asterisks. It's only been tested on Intel hardware, and the developer warns of potential compatibility breaks. Compression ratios, while functional, trail those of optimized libraries like LZ4 for many datasets. Yet, for scenarios where speed is the ultimate KPI—think distributed systems or IoT devices—memlz offers a tantalizing glimpse into a near-future where compression no longer means compromise. Developers should monitor its evolution; if stability improves, memlz could become a secret weapon for latency-sensitive applications.

Source: memlz GitHub Repository

Comments

Please log in or register to join the discussion