MIT's Abdul Latif Jameel Poverty Action Lab launches Project AI Evidence to evaluate AI tools addressing poverty through randomized controlled trials, prioritizing solutions in education, climate resilience, health, and economic mobility.

The Abdul Latif Jameel Poverty Action Lab (J-PAL) at MIT has launched Project AI Evidence (PAIE), a new research initiative focused on rigorously evaluating artificial intelligence tools designed to alleviate global poverty. The project connects governments, tech companies, and nonprofits with economists from MIT and J-PAL's global network to identify which AI solutions effectively combat social inequities and which may inadvertently cause harm.

Artificial intelligence applications in social sectors have proliferated rapidly, yet evidence of their real-world impact remains scarce. PAIE addresses this gap by applying J-PAL's established methodology of randomized controlled trials—the gold standard in impact evaluation—to AI interventions. "AI has incredible potential, but we need to maximize its benefits and minimize possible harms," says J-PAL Global Executive Director Iqbal Dhaliwal. "Our approach identifies not just what works, but for whom and under what conditions."

Eight initial studies have received funding in PAIE's first round, each targeting urgent policy questions:

AI in Education: Researchers are testing AI tools that personalize learning in resource-constrained settings. In Kenya, education platform EIDU uses AI to help teachers identify student learning gaps and adapt lesson plans. In India, NGO Pratham is developing AI to scale their evidence-based "Teaching at the Right Level" methodology. Both projects will measure impacts on teacher effectiveness and student outcomes.

Combating Gender Bias: A collaboration with Italy's Ministry of Education evaluates whether AI tools can reduce unconscious bias in classrooms. One tool predicts student performance while another provides real-time feedback on teacher decision diversity. "If effective, these could become powerful levers for closing gender gaps in STEM achievement," notes MIT economist Daron Acemoglu.

Economic Mobility: In Kenya, researchers are assessing an AI tool from Swahilipot Hub and Tabiya that identifies overlooked skills in job seekers. The study examines whether AI-enhanced career guidance improves employment outcomes for women, youth, and those without formal credentials.

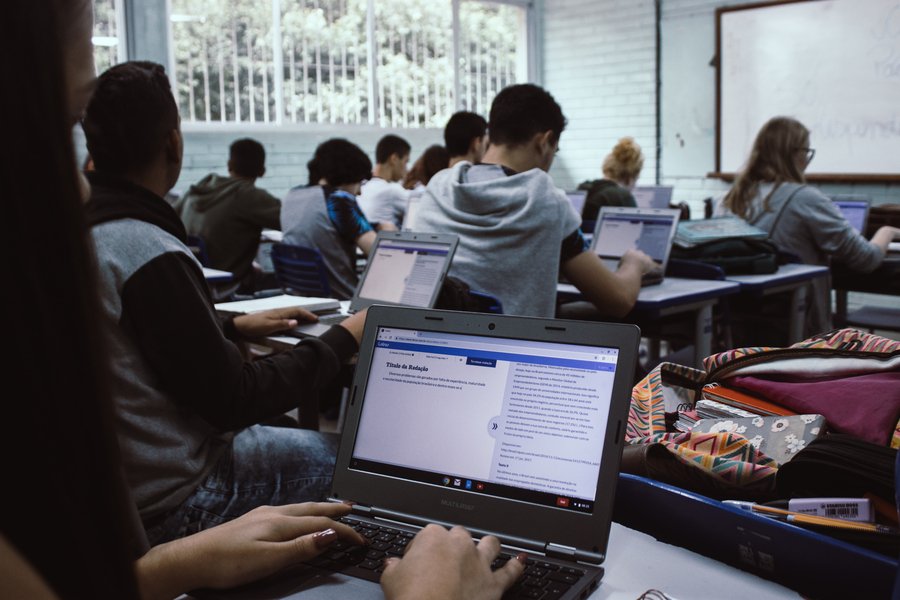

High school students using AI writing tools in Brazil—one of many applications being evaluated through PAIE

High school students using AI writing tools in Brazil—one of many applications being evaluated through PAIE

Technical evaluations under PAIE follow a structured framework:

- Pre-deployment analysis: Assessing algorithmic fairness and potential biases

- Field testing: Randomized A/B testing with control groups

- Impact measurement: Quantifying outcomes against predefined poverty metrics

- Scalability assessment: Evaluating cost-effectiveness and implementation feasibility

The initiative has attracted significant backing, including funding from Google.org, Community Jameel, Canada's International Development Research Centre, UK International Development, and Amazon Web Services. A separate grant from Eric and Wendy Schmidt will support studies on generative AI in workplaces across low- and middle-income countries.

"We urgently need to study what works, what doesn't, and why," emphasizes Alex Diaz, Head of AI for Social Good at Google.org. This sentiment is echoed by Maggie Gorman-Velez of IDRC: "Responsible scaling requires locally relevant evidence—especially for technologies affecting vulnerable populations."

J-PAL brings distinctive expertise to this effort, with over two decades and 2,500 evaluations of social programs worldwide. Their approach recognizes that AI tools require contextual adaptation; a chatbot effective in urban health clinics may fail in rural areas without connectivity. Future funding rounds will expand to climate applications like flood prediction systems and Amazon deforestation monitoring.

For policymakers and developers, PAIE offers actionable insights:

- AI tutors show promise but require careful integration with teacher workflows

- Algorithmic career advisors work best as complements to human counselors

- Real-time bias detection requires transparent model architectures

"This isn't about replacing human judgment," Dhaliwal clarifies. "It's about creating AI that augments local expertise while respecting community needs." The initiative continues to seek implementation partners and will publish evaluation protocols publicly to establish benchmarks for responsible AI deployment in social sectors.

Organizations interested in participating can contact [email protected] or visit the Project AI Evidence portal for detailed proposal guidelines.

Comments

Please log in or register to join the discussion