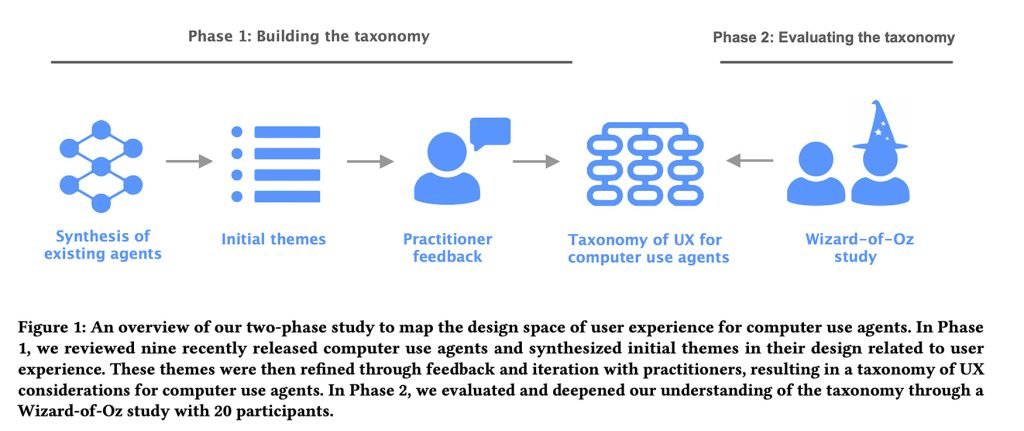

Apple researchers conducted a two-phase study identifying key user experience patterns for AI agents, finding users demand transparency without micromanagement and context-aware behavior.

A new Apple research study titled Mapping the Design Space of User Experience for Computer Use Agents investigates how users expect to interact with AI assistants. While significant resources have been invested in developing AI agent capabilities, Apple's Human Interface team found critical gaps in understanding user expectations around interaction design and interface requirements.

Phase 1: Building the Design Taxonomy

The researchers analyzed nine existing AI agent implementations including Claude Computer Use Tool, Adept, OpenAI Operator, and TaxyAI. They then consulted eight UX and AI practitioners from a major technology company to develop a comprehensive framework. This taxonomy organizes AI agent interactions into four core categories with 55 specific features:

- User Query - How commands are input

- Explainability of Agent Activities - Presenting information about actions

- User Control - Intervention mechanisms

- Mental Model & Expectations - Conveying capabilities and limitations

This structure provides designers with a blueprint covering everything from error communication to user intervention workflows.

Phase 2: The Wizard-of-Oz Experiments

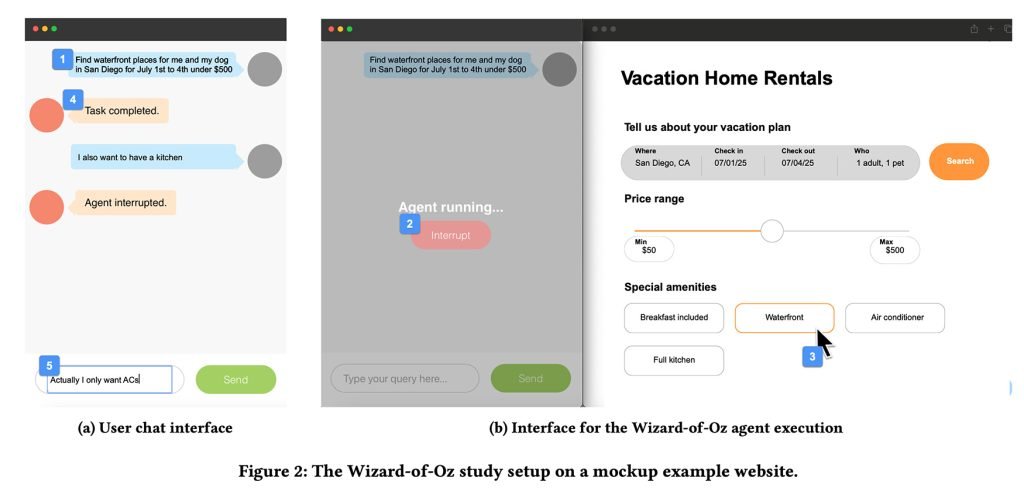

The team recruited 20 participants familiar with AI agents for hands-on testing. Using a technique called Wizard of Oz, participants interacted with what they believed was an AI agent through a chat interface, while researchers manually performed tasks in another room. Participants completed either vacation planning or online shopping scenarios involving six tasks each, with researchers intentionally introducing errors like navigation loops or incorrect selections.

The setup featured:

- Text-based natural language inputs

- Visible agent execution interface

- "Interrupt" button for immediate control

- Intentional error scenarios Researchers recorded sessions, analyzed chat logs, and conducted post-task interviews to identify pain points and expectations.

Key Findings

Transparency Without Micromanagement Users want visibility into agent actions but resist overseeing every step. As one participant noted: "If I have to monitor everything, I might as well do it myself."

Context-Dependent Behavior Expectations shift dramatically based on task familiarity:

- Exploratory tasks: Users want intermediate steps and confirmation pauses

- Familiar tasks: Users prefer minimal interruptions

- Risk-Based Control Requirements Users demand greater oversight for high-stakes actions:

- Financial transactions

- Account/payment changes

- Communication on user's behalf

- Trust Breakdown Points Participants reacted strongly when agents:

- Made silent assumptions without explanation

- Deviated from plans without notification

- Selected ambiguous options without clarification

"The discomfort peaked when agents weren't transparent about choices that could lead to selecting wrong products," researchers observed.

Implications for Developers

The study provides concrete guidance for AI agent implementation:

- Implement graduated transparency: Show actions without overwhelming detail

- Design context-aware interruption points

- Create clear signaling for deviations from original plans

- Build explicit confirmation flows for high-risk actions

These findings arrive as Apple prepares its own AI features for iOS 18. The full study offers valuable insights for any developer implementing agentic capabilities, available on arXiv.

Comments

Please log in or register to join the discussion