A developer has created an immersive ESP32-powered AR shooting game using 3D-printed hardware and computer vision, transforming any room into an interactive firing range with real-time targeting.

Imagine turning your living room into an interactive shooting gallery where virtual targets emerge from your actual furniture and decor. That's exactly what developer HJWWalters has achieved with an ingenious ESP32-based augmented reality project that blends physical hardware with computer vision. Shared on the ESP32 subreddit, this open-source project demonstrates how accessible components can create surprisingly immersive gaming experiences.

At the hardware core is an ESP32 microcontroller paired with a GC9A01 circular TFT display mounted inside a 3D-printed gun shell. The display sits strategically on the barrel, creating an aiming interface that shows real-time video from an onboard camera. This feed processes environmental data to dynamically generate targets based on room features. Power comes from a rechargeable battery pack, freeing players from cords while limiting gameplay sessions by battery capacity rather than artificial timers.

Game mechanics emphasize precision over speed: Players get exactly ten shots per session to hit randomly spawned AR targets. The system logs accuracy metrics and scores without time constraints, focusing on deliberate marksmanship. Using computer vision algorithms, the ESP32 identifies potential target locations by analyzing room geometry through the camera feed. When targets appear, they integrate visually with physical surroundings, appearing to emerge from furniture, walls, or decor items.

The circular display's placement proves crucial for immersion. By positioning it at the gun's muzzle position, developers created a natural sighting system where players physically aim at real-world locations while viewing processed imagery. This hardware arrangement avoids the discomfort of head-mounted displays while maintaining spatial awareness.

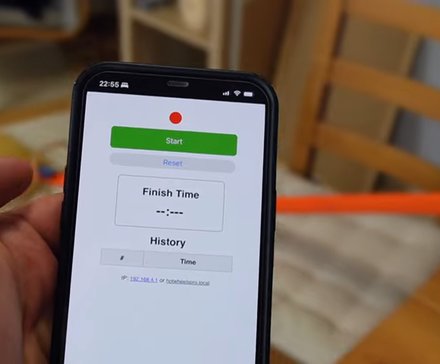

The compact display provides real-time targeting feedback during gameplay

The compact display provides real-time targeting feedback during gameplay

For the maker community, this project highlights the ESP32's capabilities beyond simple IoT tasks. The dual-core processor handles both computer vision processing and display rendering simultaneously, showing how affordable hardware ($5-$10 for ESP32 boards) can power complex interactive systems. HJWWalters documented the entire build process on their personal blog, including STL files for the 3D-printed enclosure, wiring diagrams, and optimized code for target detection.

Notably, the project avoids proprietary ecosystems. Everything runs locally on the ESP32 without cloud dependencies, addressing privacy concerns about in-home camera use. The open-source approach also lets makers modify targeting algorithms, adjust difficulty parameters, or adapt the hardware for different controllers. This stands in contrast to commercial AR systems that often lock users into specific platforms.

Future iterations could integrate ESP32's Bluetooth capabilities for multiplayer competitions or add haptic feedback via vibration motors. As HJWWalters continues refining the project, it demonstrates how microcontroller platforms are democratizing augmented reality development. For under $50 in components, makers can now build room-scale interactive experiences that previously required expensive headsets or specialized hardware.

Comments

Please log in or register to join the discussion