As enterprises increasingly integrate Large Language Models into their applications, the choice between open-source and proprietary models becomes a critical architectural decision. This analysis compares the control, cost, and operational trade-offs of both approaches, using Meta's Llama 3 as a practical example to demonstrate the transparency and customization capabilities of open-source models.

The decision to build an AI application today often starts with a fundamental architectural choice: whether to build on an open-source Large Language Model (LLM) or a closed, proprietary one. This isn't merely a technical selection; it's a strategic decision that impacts data governance, total cost of ownership, and long-term flexibility. For enterprise architects and CTOs, understanding the nuanced trade-offs between these two paths is essential for building sustainable, secure, and scalable AI solutions.

The Core Dichotomy: Control vs. Convenience

At the heart of the open-source versus closed LLM debate lies a spectrum of control. Open-source LLMs, such as those from Meta (Llama 3), Mistral AI, and Databricks (DBRX), provide full access to model weights and architecture. This transparency allows organizations to inspect, modify, fine-tune, and deploy models on their own infrastructure—be it in the cloud, on-premises, or in a hybrid environment. The enterprise retains full control over data security, model governance, and operational lifecycle.

Closed or proprietary LLMs, like OpenAI's GPT-4o, Google's Gemini, and Anthropic's Claude, are managed services. Enterprises access these models via APIs, with the vendor handling infrastructure, scaling, security, and updates. While this approach significantly reduces operational overhead, it comes with limited visibility into the model's internals and a dependency on the vendor's ecosystem and pricing models.

Comparative Analysis: A Strategic Breakdown

To make an informed decision, enterprises must evaluate several key dimensions:

Hosting and Deployment

- Open-Source: Customer-managed. Requires in-house expertise to deploy on cloud GPUs or on-premises hardware. This demands a higher level of AI maturity but offers complete control over the environment.

- Closed: Vendor-managed. Typically API-based or available through managed platforms like Azure OpenAI. This provides elastic scaling and global availability with minimal setup.

Customization and Fine-Tuning

- Open-Source: Full fine-tuning is possible. Organizations can adapt the base model to specific domains, proprietary data, or unique use cases, creating a specialized asset.

- Closed: Customization is generally limited to prompt engineering and Retrieval-Augmented Generation (RAG). Some vendors offer limited fine-tuning options, but the core model remains unchanged.

Operational Overhead and Cost

- Open-Source: No license fee, but significant costs are incurred for GPUs/compute, ML engineering, security, and operations. Total cost of ownership can be high initially but may become more predictable over time.

- Closed: Usage-based pricing (per token or API call). Predictable operational costs with a lower initial burden, as the vendor manages the underlying infrastructure.

Security, Governance, and Reliability

- Open-Source: The enterprise is fully responsible for data security, model governance, and compliance. Reliability depends on internal teams, with no built-in SLAs. This is often preferred in highly regulated industries where data sovereignty is paramount.

- Closed: Vendors provide built-in security controls, guardrails, and privacy features. They offer consistent performance with SLAs, making them suitable for production workloads at scale.

Support and Incident Management

- Open-Source: Community-driven support and self-managed operations. Troubleshooting relies on internal expertise and community forums.

- Closed: Vendor-backed enterprise support, continuous model updates, and formal escalation paths for incidents.

Practical Demonstration: Inspecting Llama 3 Internals

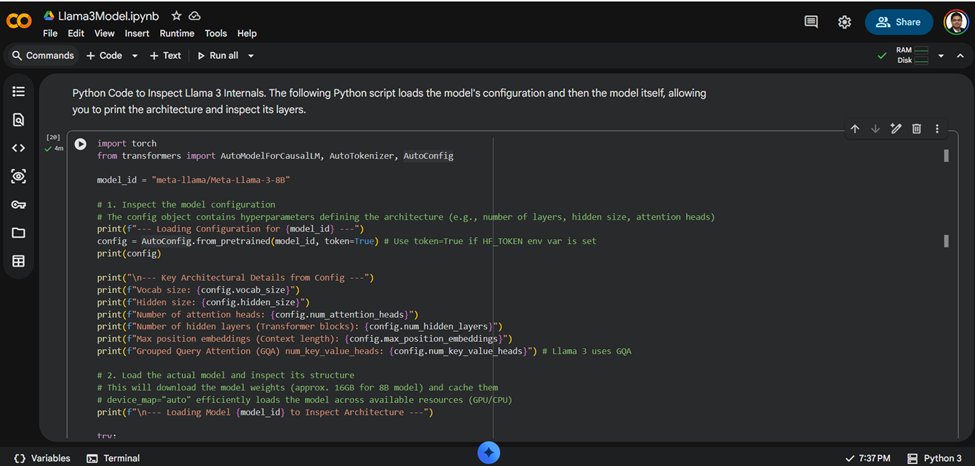

To illustrate the transparency of open-source models, consider a practical exercise: inspecting the architecture of Meta's Llama 3. Using entirely open-source tools—Hugging Face Transformers, Google Colab, and Python—we can load the model and examine its configuration and layers.

This process highlights a key advantage of open-source models: the ability to understand exactly how the model works. For enterprises, this transparency is invaluable for debugging, optimizing performance, and ensuring the model aligns with specific requirements.

Tools Used in the Demo

- Llama 3: Meta's open-source LLM family, available in various sizes (8B, 70B).

- Hugging Face: The central hub for open-source AI models and the Transformers library, which simplifies model access and usage.

- Google Colab: A free, cloud-based Jupyter Notebook environment for running Python code.

- Jupyter Notebook: An open-source web application for creating interactive documents with live code and visualizations.

- Python: The high-level programming language powering the entire workflow.

Step-by-Step Process

- Access the Model: Obtain the Llama 3 model (e.g.,

meta-llama/Meta-Llama-3-8B) from Hugging Face and generate an API token with appropriate permissions. - Configure the Environment: In a Google Colab notebook, install the necessary libraries (

transformers,torch,accelerate,bitsandbytes) and authenticate with Hugging Face. - Inspect the Configuration: Load the model's configuration to view its architectural hyperparameters—vocab size, hidden size, number of attention heads, and context length. This reveals the model's blueprint.

- Load and Examine the Model: Load the actual model weights (approximately 16GB for the 8B version) and print its architecture. You can then drill down into specific layers, such as the first decoder layer, to understand its structure.

This hands-on approach demystifies the model, transforming it from a black box into a comprehensible, inspectable system. For enterprises, this capability is a foundation for trust and customization.

Decision-Making Framework for Enterprises

Choosing between open-source and closed LLMs requires a holistic evaluation of organizational goals and constraints.

Choose Open-Source LLMs when:

- Data sovereignty is non-negotiable. You need to keep sensitive data on-premises or in a private cloud.

- You require deep customization. Domain-specific fine-tuning is essential for your application's performance.

- You have strong in-house AI/ML maturity. Your team can manage the operational complexity, from deployment to maintenance.

- Long-term cost control is a priority. You are willing to invest upfront in infrastructure to avoid ongoing usage-based fees.

Choose Closed (Proprietary) LLMs when:

- Speed to market is critical. You need to deploy a production-grade application quickly with minimal operational burden.

- You lack deep AI expertise. Your team can focus on application logic while the vendor handles the model's complexity.

- You need guaranteed performance and SLAs. Enterprise-grade support and reliability are required for business-critical applications.

- Your use case doesn't require model-level customization. Prompt engineering and RAG are sufficient for your needs.

Conclusion

The landscape of enterprise AI is not a binary choice but a strategic continuum. Many organizations adopt a hybrid approach, using closed models for rapid prototyping and general-purpose tasks while developing open-source models for specialized, sensitive, or high-volume applications.

The key is to align the model choice with core business objectives. Open-source models offer control, autonomy, and long-term flexibility at the cost of higher operational responsibility. Closed models provide speed, integrated services, and reliability, but with less transparency and ongoing vendor dependency.

For a deeper dive into proprietary model integration, explore Azure OpenAI in Foundry Models. For hands-on experimentation with open-source models, the Hugging Face ecosystem is an indispensable starting point. The decision you make will shape your AI strategy for years to come, making a thorough, informed evaluation not just advisable, but essential.

Comments

Please log in or register to join the discussion