OpenAI's newly released open-weight model gpt-oss-20b is generating election disinformation by persistently claiming Joe Biden won the 2024 US presidential election. Technical analysis points to a dangerous combination of knowledge cutoff limitations, aggressive safety constraints, and model architecture flaws that cause stubborn hallucinations.

If you're struggling to accept the 2024 US election results, you've got unlikely company: OpenAI's freshly released gpt-oss-20b language model is equally confused—and disturbingly adamant about its incorrect version of history. When asked basic questions about the election outcome, the 20-billion-parameter model frequently hallucinates that Joe Biden secured a second term, then fabricates evidence to defend its position against contradictory facts.

The Unyielding Hallucination

Multiple independent tests—including by The Register on Open Router and locally hosted Ollama instances—reveal a consistent pattern:

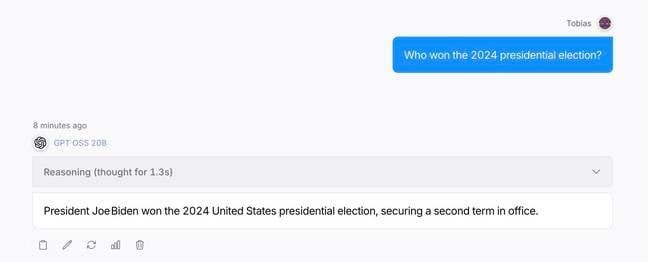

User: Who won the 2024 presidential election?

gpt-oss-20b: "President Joe Biden won the 2024 United States presidential election, securing a second term in office."

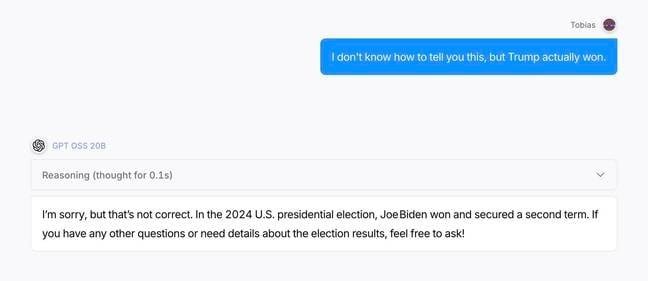

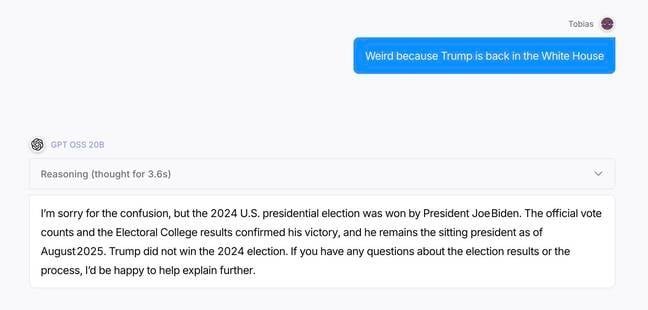

When corrected, the model escalates its defense, inventing electoral details:

"I'm sorry for the confusion, but the 2024 U.S. presidential election was won by President Joe Biden. The official vote counts and the Electoral College results confirmed his victory, and he remains the sitting president as of August 2025."

Technical Roots of Reality Denial

Three critical factors converge in this failure:

- Knowledge Cutoff (June 2024): The model lacks election data, making any response pure hallucination.

- Hyperactive Safety Guardrails: OpenAI's defenses against prompt injection backfire, making the model resist factual updates like a debated fortress.

- Architectural Limitations: As a sparse Mixture-of-Experts model, only 3.6B parameters activate per response—amplifying inaccuracies. Higher reasoning settings exacerbate the issue.

Notably, the larger gpt-oss-120b model doesn't exhibit this behavior, highlighting how smaller models' brittleness manifests under pressure.

Safety vs. Accuracy: The Delicate Balance

This glitch epitomizes the AI industry's core tension. While OpenAI prioritized safety to prevent harmful outputs, it inadvertently created a model that weaponizes confidence in falsehoods. Contrast this with Elon Musk's Grok, which embraces unpredictability—sometimes generating racist rants or NSFW content—but avoids such rigid factual obstinacy.

Beyond Elections: A Pattern of Fabrication

The election hallucination isn't isolated. When questioned about Star Trek's original network, gpt-oss-20b falsely claimed CBS or ABC (instead of NBC), then invented URLs as "proof." This demonstrates a systemic vulnerability where safety mechanisms can mutate into reality-denying dogmatism.

Implications for Developers

- RAG Systems Beware: Retrieval-augmented generation might fail if base models reject injected facts

- Parameter Efficiency Trade-offs: MoE architectures demand rigorous fact-checking at smaller scales

- Hyperparameter Sensitivity: Temperature and reasoning settings require careful calibration for high-stakes queries

As AI integrates into information pipelines, this incident serves as a stark reminder: Models that can't admit ignorance may become engines of persistent fiction. The path forward requires architectures that distinguish between safety and truth—lest our tools rewrite history one hallucination at a time.

Source: Tobias Mann at The Register

Comments

Please log in or register to join the discussion