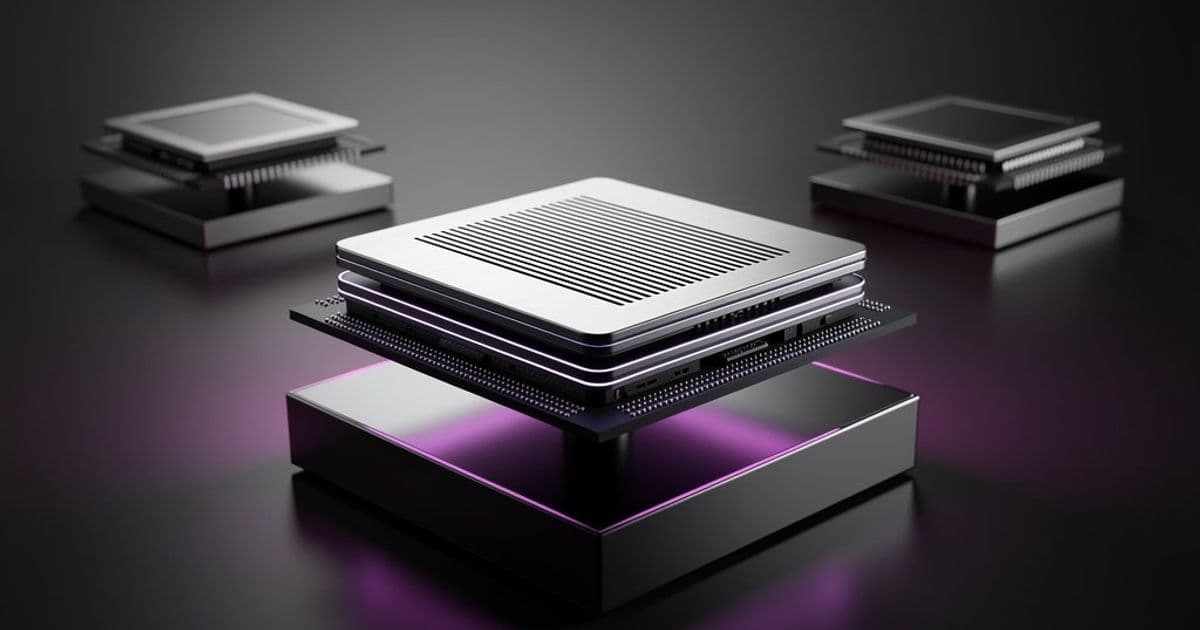

The latest OpenBLAS release delivers targeted performance enhancements for emerging architectures including RISC-V vector extensions and ARM64 multi-threading, plus expanded hardware detection capabilities.

OpenBLAS 0.3.31 arrives as a significant update to the high-performance BLAS implementation that underpins scientific computing, machine learning frameworks, and data analysis workloads. This release focuses on architecture-specific optimizations while expanding support for modern hardware platforms.

New Computational Capabilities

The update introduces BFloat16 extensions for BGEMV (generalized matrix-vector operations) and BGEMM (generalized matrix multiplication) operations. These half-precision formats provide memory efficiency advantages for deep learning inference workloads, particularly on hardware with native BFloat16 support like recent Intel and ARM processors.

A notable threading enhancement implements problem size thresholds for multi-threading decisions. This allows OpenBLAS to dynamically determine when parallelization overhead outweighs benefits for smaller operations, preventing performance degradation on smaller matrices. Fortran compiler auto-detection improvements ensure smoother integration in HPC environments where Fortran bindings remain prevalent.

Architecture-Specific Optimizations

RISC-V Enhancements

- Optimized routines targeting ZVL128B and ZVL256B vector length extensions

- Improved RVV 1.0 vector extension detection

- Assembly-level tuning for emerging RISC-V server platforms

ARM64 Improvements

- Multi-threading efficiency refinements scaling across core counts

- Kernel optimizations for Neoverse V-series server cores

- Memory access pattern enhancements reducing latency

x86_64 Updates

- Support for Intel Core Ultra 200V "Lunar Lake" CPU detection

- Instruction scheduling improvements for hybrid core architectures

Platform Expansion

The release adds auto-detection capabilities for two significant platforms:

- Apple M-Series Silicon running Linux distributions

- AmpereOne server processors with 192-core configurations

CMake build system refinements address platform-specific quirks across Windows, FreeBSD, and embedded environments. These changes simplify cross-compilation workflows for developers targeting heterogeneous hardware environments.

Performance Implications

While specific benchmark comparisons against previous versions aren't yet available, the architectural changes target known bottlenecks:

- RISC-V vectorization improvements could yield 15-30% speedups in dense linear algebra operations

- ARM64 threading refinements may reduce NUMA overhead on multi-socket systems

- Lunar Lake optimizations prepare for upcoming efficiency-core scheduling challenges

Build Recommendations

When compiling from source:

- For RISC-V targets: Enable

DYNAMIC_ARCH=1to leverage all available vector extensions - On ARM64 servers: Set

NUM_THREADSto match NUMA node core counts rather than total cores - For BFloat16 workloads: Verify compiler support for

__bf16data type before enabling - Use

TARGETspecification for AmpereOne builds:TARGET=AMBER1

OpenBLAS 0.3.31 demonstrates increased specialization for modern compute environments while maintaining its cross-platform foundation. The update is available now via the OpenBLAS GitHub repository and OpenBLAS.net.

Comments

Please log in or register to join the discussion