A data engineer's encounter with 20 conflicting definitions of 'monthly active users' exposes how data lakes became unusable swamps. Matterbeam proposes returning to the original schema-on-read vision with immutability, schema inference, and replay to restore flexibility while enabling trustworthy analysis.

Reclaiming the Data Lake Vision: Beyond the Swamp

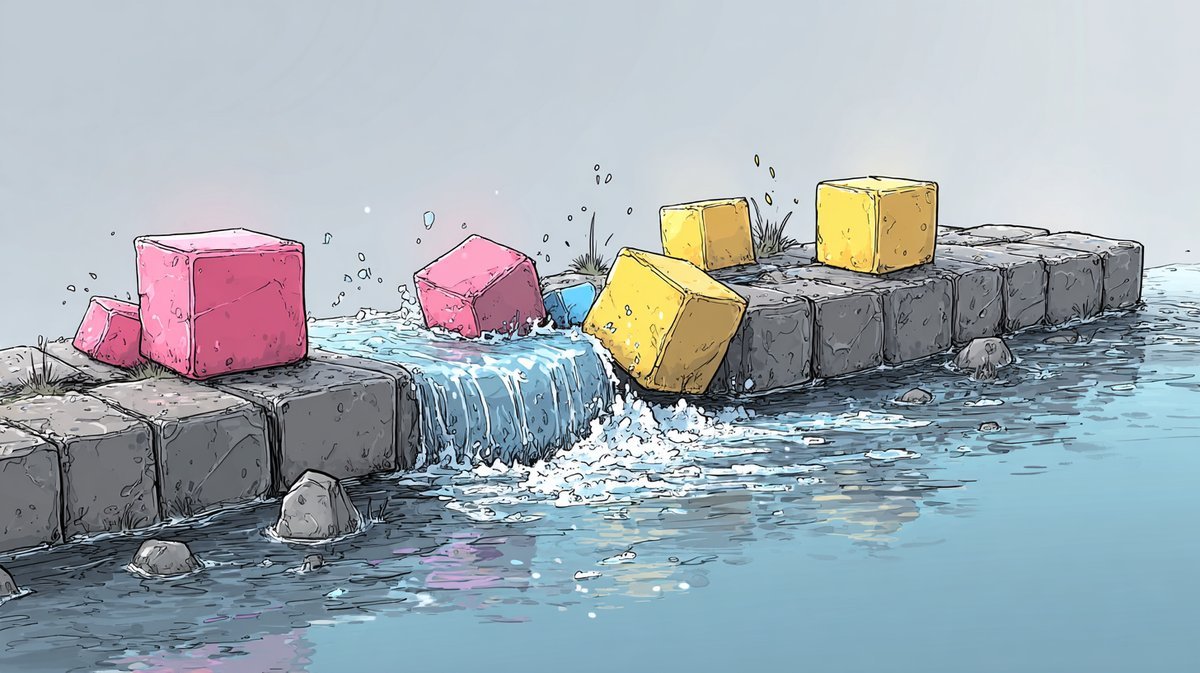

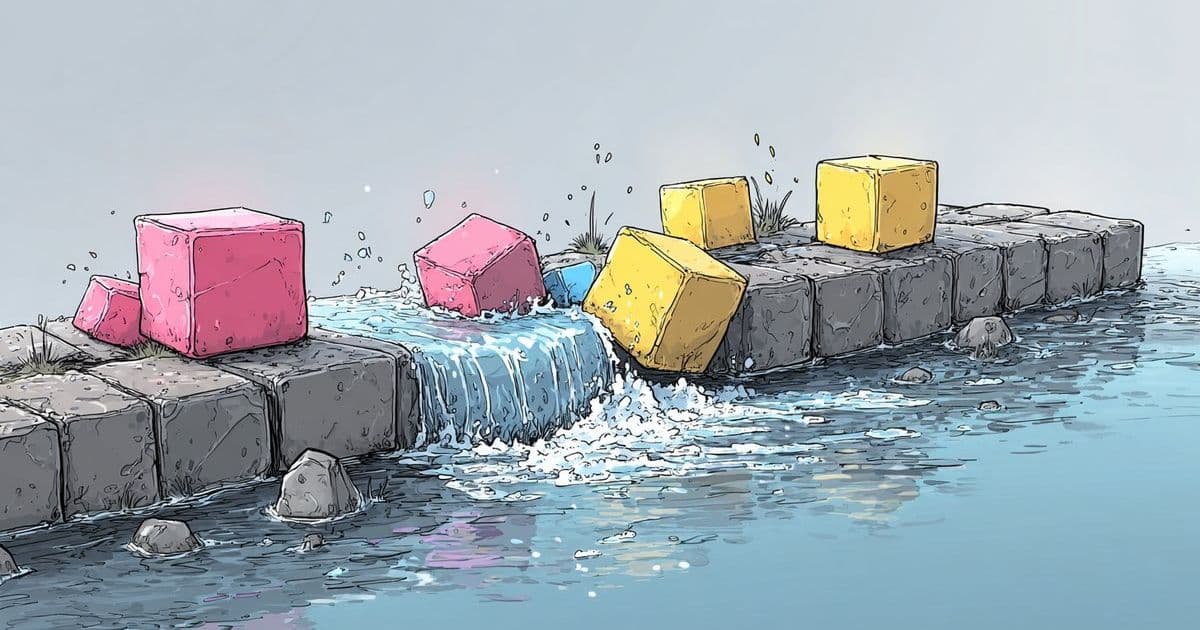

In 2014, after his startup was acquired, the author joined a company with a seemingly advanced data infrastructure. When attempting to analyze monthly active users (MAU), he uncovered chaos: at least 20 datasets with conflicting schemas, inconsistent dates, and irreproducible calculations. "Many input data sources were ephemeral, long gone by the time I wanted to understand and verify computations," he recalls. This experience exemplifies James Dixon's warning: without governance, data lakes become swamps—repositories where information exists but remains untrustworthy and unusable.

Dixon's original 2010 "data lake" concept promised radical flexibility: store raw data cheaply and apply schema only when reading it. But the industry's solution—forcing lakes into database-like structures ("lakehouses")—recreated the very problems lakes aimed to solve. By enforcing schemas at write-time and centralizing everything into monolithic systems, organizations sacrificed flexibility while building "half-built databases that try to do everything, but nothing best of class."

Matterbeam's architecture returns to the schema-on-read principle while solving operational pitfalls through three interconnected pillars:

- Intermediate Structure with Schema Inference: Instead of demanding predefined schemas, the system continuously infers structure from immutable raw data. This intermediate format—optimized for transformation, not querying—preserves original fidelity while enabling materialization into purpose-built structures (SQL tables, event streams, or ML datasets).

- Immutability as Foundation: By prohibiting data overwrites or compaction, Matterbeam ensures historical accuracy. This allows reliable schema evolution tracking and guarantees that materialized views remain reproducible.

- Replay as First-Class Citizen: When business needs evolve, teams regenerate materializations from the raw log. "Replay lets you regenerate [views] because the source remains intact," enabling iterative refinement without data loss.

Together, these principles form a "materialize, learn, refine, replay" feedback loop. Teams maintain autonomy while the system accommodates multiple concurrent "truths"—whether for real-time analytics, compliance, or machine learning. By decoupling storage from consumption, Matterbeam revives the lake's core promise: store everything now, decide later—and then decide again. As workloads shift, organizations can leverage specialized databases without sacrificing historical context or locking into monolithic architectures. The swamp drains, leaving an adaptable reservoir where time becomes an asset rather than a constraint.

Source: Based on We Lost the Thread on the Data Lake by Matterbeam.

Comments

Please log in or register to join the discussion