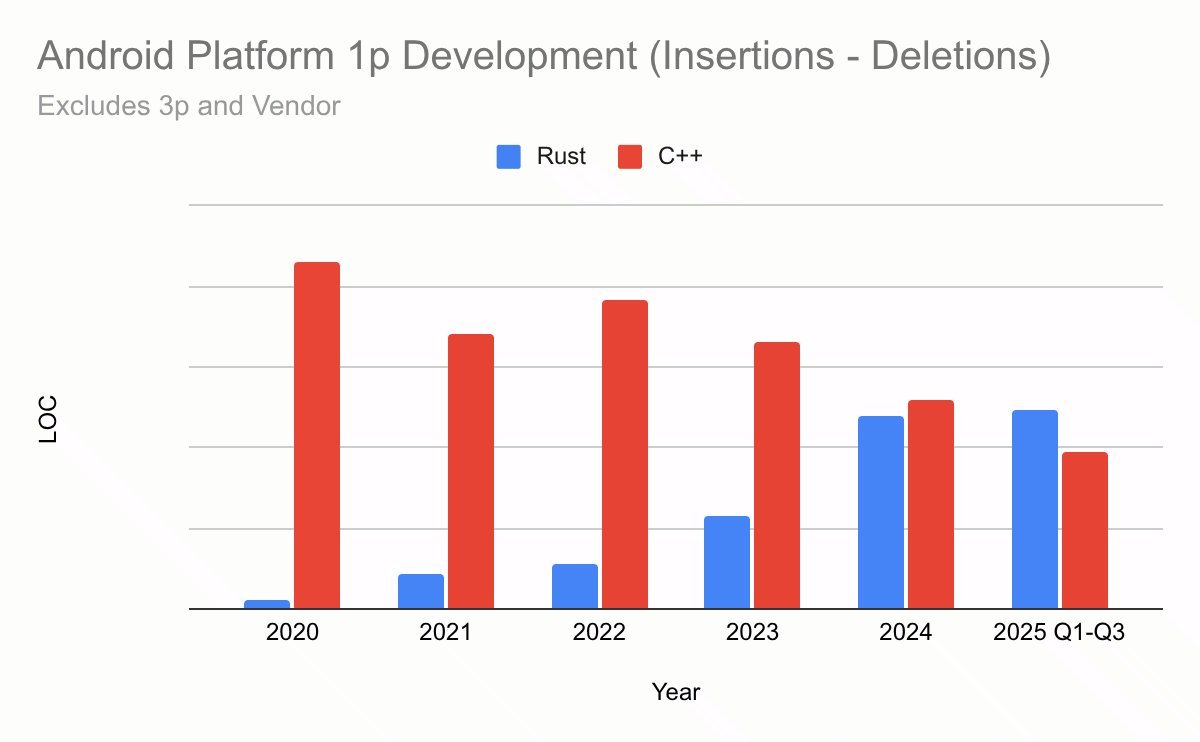

New Android data from 2025 confirms what many teams have suspected: Rust isn’t only shrinking the memory-unsafe attack surface; it’s quietly outpacing C++ in delivery speed and stability. By tying language choice to DORA metrics, kernel drivers, firmware, and real-world CVEs, Google is turning “secure by default” from a slogan into measurable engineering leverage.

Rust Didn’t Just Save Android’s Security—It Made Shipping Faster

When Google first announced Rust for Android, the headline story was obvious: memory safety. Less obvious—and more strategically important in 2025’s data—is that Rust is now the faster way to ship stable, production Android code.

In fresh numbers released by the Android team, memory safety vulnerabilities have dropped below 20% of all Android vulnerabilities for the first time. But the deeper story is operational: Rust changes roll back 4x less often than comparable C++ changes and spend roughly 25% less time stuck in code review. For a platform with billions of devices and a 90-day patch SLA, that isn’t a language trend; it’s an engineering transformation.

Source: Google Security Blog – “Rust in Android: Move Fast & Fix Things” (Nov 2025).

Source URL: https://security.googleblog.com/2025/11/rust-in-android-move-fast-fix-things.html

Security by Construction, With Actual Receipts

Android’s memory safety strategy has shifted from mitigation-heavy damage control to prevention embedded in the language layer. In 2025’s data set—spanning first-party and third-party Android platform code in C, C++, Java, Kotlin, and Rust—memory safety issues are now a minority class.

Why it matters for practitioners:

- Google estimates ~1000x lower memory safety vulnerability density in Android Rust code vs. historical C/C++.

- That estimate is grounded in live code: ~5M lines of Rust in the platform, one would-be memory safety bug (caught pre-release), implying ~0.2 vulnerabilities per MLOC vs. ~1000 per MLOC in C/C++.

- With fewer exploitable primitives, every other defense-in-depth control—from sandboxing to hardened allocators—gets strictly more effective.

If you model security as an adversary’s cost function, Rust isn’t just shaving off a few bugs; it is collapsing an entire category of reliable exploitation paths.

The DORA Turn: When the Safe Path Becomes the Fast Path

The more controversial claim from Android’s data is not that Rust is safer, but that it’s operationally better for shipping software.

To make that case credibly, the Android team restricted analysis to:

- First-party Android platform engineers (often contributing in both C++ and Rust).

- Similar-sized changes (per Gerrit definitions).

- Systems languages only (C/C++ vs Rust), excluding Java/Kotlin.

Using the DORA framework (throughput + stability), several consistent patterns emerge:

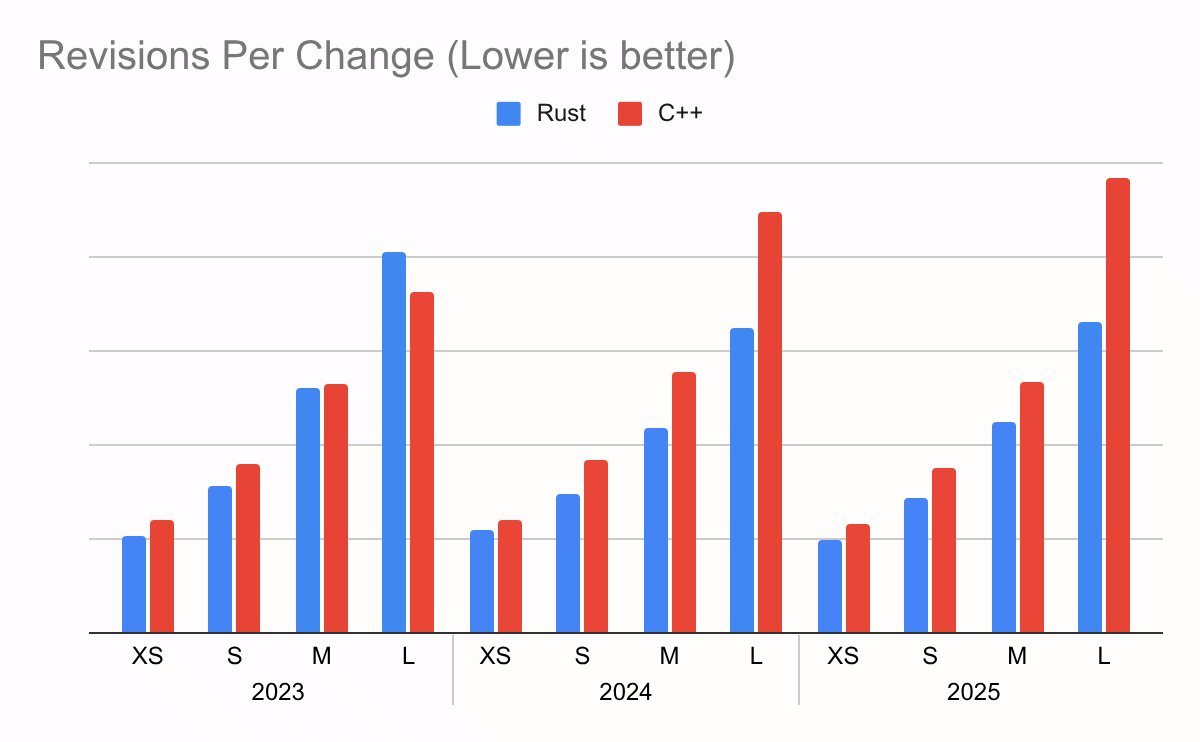

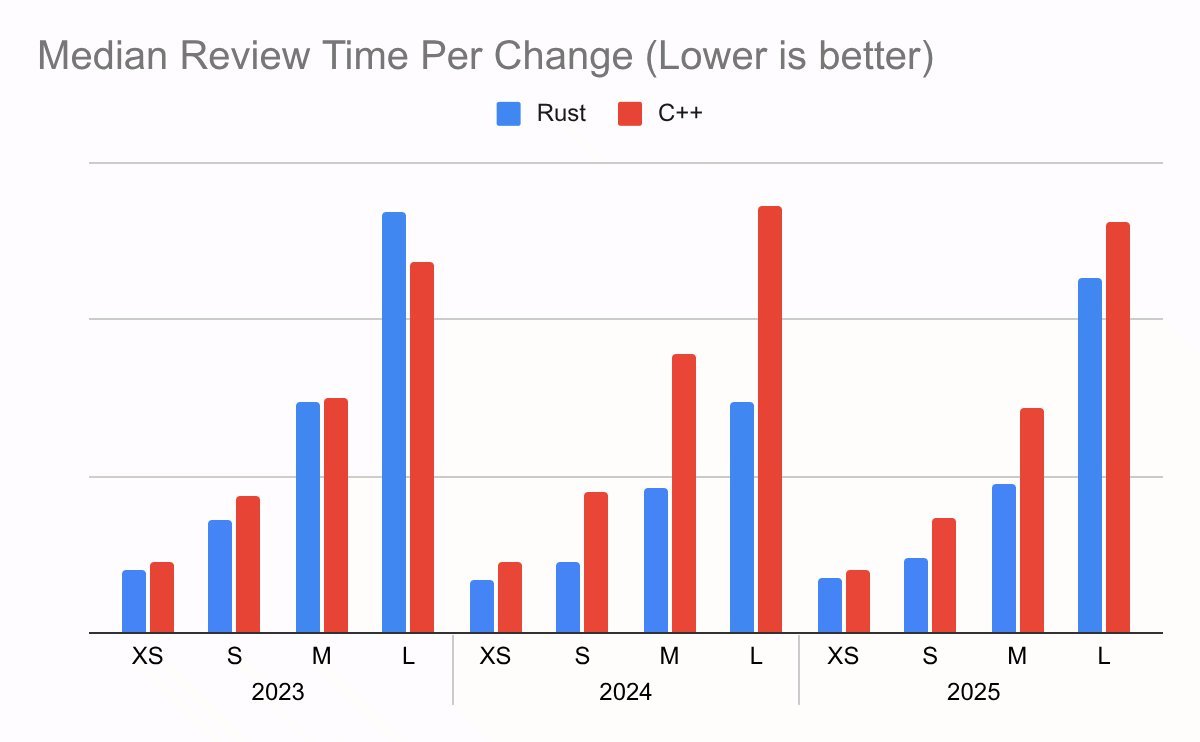

Fewer Revisions, Faster Reviews

Code review is where velocity quietly dies in large orgs. Rust changes:

- Require ~20% fewer revisions than similar C++ changes.

- Spend ~25% less time in review, with improvements strengthening as Rust experience grows inside the team.

The plausible drivers will sound familiar to anyone who has tried to review complex C++ safely:

- The compiler and type system absorb entire classes of arguments that would otherwise be litigated in review.

- Ownership and borrowing semantics make aliasing and lifetime correctness explicit instead of implicit folklore.

- A more opinionated language surface leads to more uniform patterns and simpler reasoning.

4x Fewer Rollbacks: Stability as a Force Multiplier

The sharpest signal is rollback rate for medium and large changes:

- Rust changes are rolled back at about one-quarter the rate of C++ changes.

For a platform like Android, rollbacks are not a mere embarrassment metric; they are organizationally expensive:

- Build respins and emergency patches.

- Cascading delays for dependent teams.

- Postmortems that introduce new gates, checks, and overhead.

A 4x reduction is not just “Rust is nice”—it rewires the cost structure of platform evolution. Safer-by-default code means fewer catastrophic reversals, which loops back into faster delivery.

In other words: Rust is winning on DORA, not just on CVEs.

Rust Everywhere: Kernel, Firmware, and Security-Critical Apps

Rather than treating Rust as a boutique experiment, Android is wiring it into every tier where memory-unsafe code used to be “inevitable.”

Kernel: Rust Lands in Production

- Android’s 6.12 Linux kernel ships with Rust support enabled.

- First production Rust driver is in; more are coming.

- Active collaboration with Arm and Collabora on a Rust-based kernel-mode GPU driver.

Bringing Rust into the kernel isn’t cosmetic. Kernel bugs are high-impact and historically memory-unsafe; shifting new surface area to Rust directly suppresses one of attackers’ favorite bug classes.

Firmware: Where Safety Has Been the Weakest

Firmware is the worst possible combination for security engineers: high privilege, tight constraints, limited coverage from mitigations, and historically a C/C++ monoculture.

Google reports:

- Rust has been deployed in firmware for years internally.

- Public Rust firmware training and sample code are available.

- Collaboration with Arm on Rusted Firmware-A, aimed squarely at hardening a notoriously soft layer.

This is where Rust’s upside is most under-discussed: the further down the stack you go, the more expensive and brittle band-aid mitigations become. Language-level guarantees are one of the few scalable levers.

First-Party Apps & Ecosystem Protocols

Rust is also now backing user-facing, security-relevant components:

- Nearby Presence: The Bluetooth-based secure discovery protocol implementation runs in Rust inside Google Play Services.

- MLS for RCS: The upcoming secure messaging stack in Google Messages is implemented in Rust.

- Chromium: Key parsers (PNG, JSON, web fonts) refactored into Rust, aligning with Chromium’s Rule of 2 and shrinking exposure to malicious web content.

For developers, these are strong ecosystem signals: Rust is no longer a niche infra choice; it’s integral to protocols, parsers, and paths historically rich in memory-unsafe bugs.

The Near-Miss That Proved the Stack Works

Just as the narrative risked turning into Rust triumphalism, Android’s security team hit a valuable tripwire: a would-be Rust-based memory safety vulnerability in CrabbyAVIF, tracked as CVE-2025-48530.

Crucially:

- The bug was caught before it hit a public release.

- Exploitation was blocked by Android’s Scudo hardened allocator, which uses guard pages to turn an overflow into a deterministic crash.

Two lessons matter for engineers designing their own stacks:

- Rust is not magic. Unsafe Rust (and FFI) can absolutely reintroduce memory hazards.

- Defense-in-depth still pays off. Hardened allocators, crash observability, and principled postmortems are mandatory, even with a memory-safe default.

Android’s follow-up actions are the more interesting part:

- Fixed crash reporting blind spots so Scudo guard page overflows show up clearly, reducing triage latency.

- Treated the near-miss with full CVE rigor, reinforcing partner pressure to adopt Scudo.

This is what a modern secure stack looks like: language guarantees + hardened runtime + enforced observability, all treated as first-class engineering constraints, not afterthoughts.

Unsafe Rust: The 4% That Keeps People Up at Night

Roughly ~4% of Android’s Rust code lives in unsafe {} blocks. This is the surface area critics point to when arguing that Rust’s safety story is oversold.

Empirical data from Android suggests otherwise:

- Even if you pessimistically assume a line of unsafe Rust is as risky as a line of C/C++, the total effective risk remains far lower because unsafe is constrained, localized, and heavily scrutinized.

unsafedoes not disable most of Rust’s checks. Borrowing, lifetimes, and many invariants still apply around unsafe islands.- Common practices—encapsulating unsafe in safe abstractions, documenting invariants, centralizing FFI—make it easier to audit than sprawling, implicit C++ footguns.

Google is doubling down on this discipline with:

- A new deep-dive unsafe Rust training module for Android engineers.

- Explicit guidance on soundness, undefined behavior, safety comments, and encapsulation patterns.

For teams considering Rust at scale, this is the actionable pattern: treat unsafe as a scarce, review-intensive resource, not a casual escape hatch.

When Moving Fast Finally Aligns With Not Breaking Things

For decades, security-conscious engineering teams lived with a grim trade-off: either ship fast and rely on mitigations, or slow down under the weight of audits, sandboxing, and hardening. Android’s 2025 Rust data marks a tangible break from that equation.

What this means if you’re leading a platform, product, or infra stack:

- Language choice is now a core security and DevOps decision, not a stylistic one. The Android data gives you numbers to take into architecture reviews and budget meetings.

- Adopting Rust piecemeal—parsers, drivers, firmware, protocol stacks—is already yielding measurable wins in rollback rates and vulnerability density at global scale.

- Defense-in-depth doesn’t go away. Hardened allocators, sandboxing, fuzzing, and strong review cultures remain essential. Rust amplifies their value instead of trying to replace them.

The most consequential shift is philosophical: Android is no longer trying to “move fast and then clean up.” With Rust, hardening, and disciplined unsafe use, the team is demonstrating that you can move faster because you made it harder to break things in the first place.

For the rest of the industry, the question is no longer whether Rust is ready for serious systems work. The question is how long you’re willing to keep subsidizing preventable classes of bugs—with your engineers’ time, your users’ safety, and your incident budget—when a better set of defaults is now battle-tested at Android scale.

Comments

Please log in or register to join the discussion