Neural Concept achieved state-of-the-art accuracy on MIT's DrivAerNet++ aerodynamic benchmark by processing 39 TB of CFD data on Azure HPC infrastructure in just one week. The geometry-native platform outperformed all competing methods across surface pressure, wall shear stress, volumetric velocity, and drag coefficient predictions, delivering a production-ready workflow that enables automotive manufacturers to reduce design cycles by 30% and save $20M on large vehicle programs.

The automotive industry's pursuit of aerodynamic efficiency has reached a critical inflection point where traditional computational fluid dynamics workflows can no longer keep pace with development timelines. External aerodynamics directly impacts performance, energy efficiency, and development costs—small drag reductions translate into measurable fuel savings or extended EV range. As manufacturers accelerate development cycles, engineering teams increasingly require data-driven methods that can augment or replace traditional CFD workflows without sacrificing accuracy.

MIT's DrivAerNet++ dataset represents the largest open multimodal benchmark for automotive aerodynamics, offering 8,000 vehicle geometries across fastback, notchback, and estate-back variants with 39 TB of high-fidelity CFD outputs including surface pressure, wall shear stress, volumetric flow fields, and drag coefficients. Processing this scale of physics-based simulation data requires infrastructure that can handle both massive ingestion and computationally intensive model training while maintaining reproducibility for industrial deployment.

Neural Concept's Geometry-Native Approach

Neural Concept's platform uses a geometry-native Geometric Regressor designed to handle engineering data types directly, rather than converting them into generic formats. This approach preserves spatial relationships and physical constraints inherent in 3D designs. The platform executed the full benchmark on Azure HPC infrastructure under transparent, scalable conditions to demonstrate industrial readiness.

The results established new performance records across all measured physical quantities:

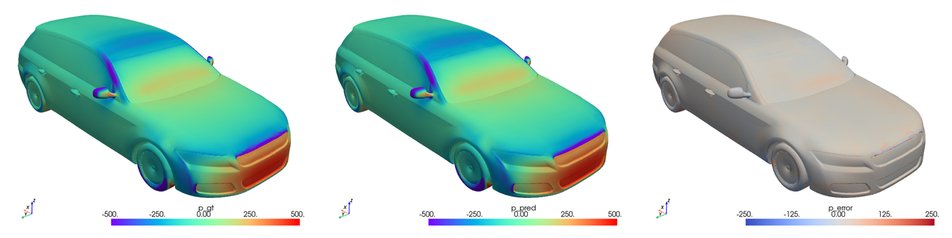

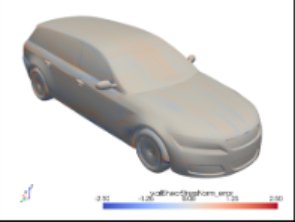

Surface Pressure Prediction: The Geometric Regressor achieved MSE of 3.98×10⁻² and MAE of 1.08×10⁻¹, substantially outperforming the next-best method (GAOT, May 2025) which recorded MSE of 4.94×10⁻² and MAE of 1.10×10⁻¹. Earlier methods from 2024 showed significantly higher errors, with RegDGCNN reaching MSE of 8.29×10⁻².

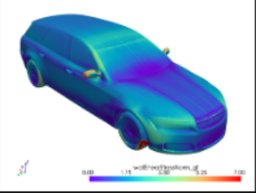

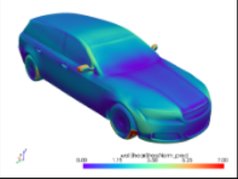

Wall Shear Stress: The model achieved MSE of 7.80×10⁻² and MAE of 1.44×10⁻¹, again leading all competitors. This accuracy is critical for detecting flow attachment and separation, which directly affects drag and stability control.

Volumetric Velocity Field: Perhaps most impressively, the platform reduced prediction error by more than 50% compared to the previous best method (TripNet, March 2025), achieving MSE of 3.11 versus 6.71. This level of accuracy captures full flow structure for wake stability analysis, enabling engineers to understand complex 3D flow patterns without running full CFD simulations.

Drag Coefficient (Cd): The model achieved R² of 0.978 on the test set, with MSE of 0.8×10⁻⁵ and maximum absolute error of 1.13×10⁻². This accuracy is sufficient for early design screening, allowing engineers to rank design variants confidently and identify meaningful improvements without running full simulations for every change.

Azure HPC Infrastructure: Scaling from Raw Data to Production

The breakthrough wasn't just algorithmic—it required seamless cloud infrastructure that could scale dynamically. The entire pipeline runs natively on Microsoft Azure, scaling within minutes to handle new industrial datasets containing thousands of geometries without complex capacity planning.

Data Ingestion at Scale

The DrivAerNet++ dataset's 39 TB of raw simulation data required conversion into Neural Concept's native format. Using Azure's parallel computing capabilities, the team deployed a conversion task with 128 workers, each allocated 5 GB of RAM. This massive parallelization completed the entire conversion process in approximately one hour, producing a compact 3 TB dataset optimized for the platform.

This conversion efficiency is crucial for industrial applications where engineering teams cannot afford weeks of data preparation. The ability to ingest and format 39 TB of complex physics data in under two hours demonstrates cloud-native workflows can handle real-world scale.

Pre-Processing Pipeline

Pre-processing required both large-scale parallelization and domain-specific best practices for external aerodynamics. The pipeline consists of two stages:

- Mesh Repair and Feature Pre-computation: Repairing car meshes and computing geometric features needed for training

- Volumetric Domain Filtering: Re-sampling points to follow spatial distributions optimized for deep learning training

Peak memory usage reached approximately 1.5 TB of RAM. The first stage, being most computationally intensive, was parallelized across 256 independent workers with 6 GB RAM each. Both stages completed in 1-3 hours when processing the full dataset.

Model Training

Training was performed on an Azure Standard_NC96ads_A100_v4 node with four A100 GPUs (80 GB memory each). The model trained for approximately 24 hours, with the best model obtained after 16 hours. This training time is remarkably efficient given the dataset scale and model complexity.

The final model is compact enough for real-time predictions on a single 16 GB GPU, making it practical for industrial deployment. This combination of training efficiency and deployment lightweightness addresses both development and operational cost concerns.

From Benchmark Accuracy to Industrial Impact

Model accuracy alone doesn't guarantee industrial impact. Transformative gains emerge only when high-performing models deploy into maintainable, repeatable workflows across organizations.

Customers using Neural Concept's platform have achieved:

- 30% shorter design cycles

- $20M in savings on a 100,000-unit vehicle program

These outcomes result from fundamentally transformed systematic approaches to design, unlocking better and faster data-driven decisions.

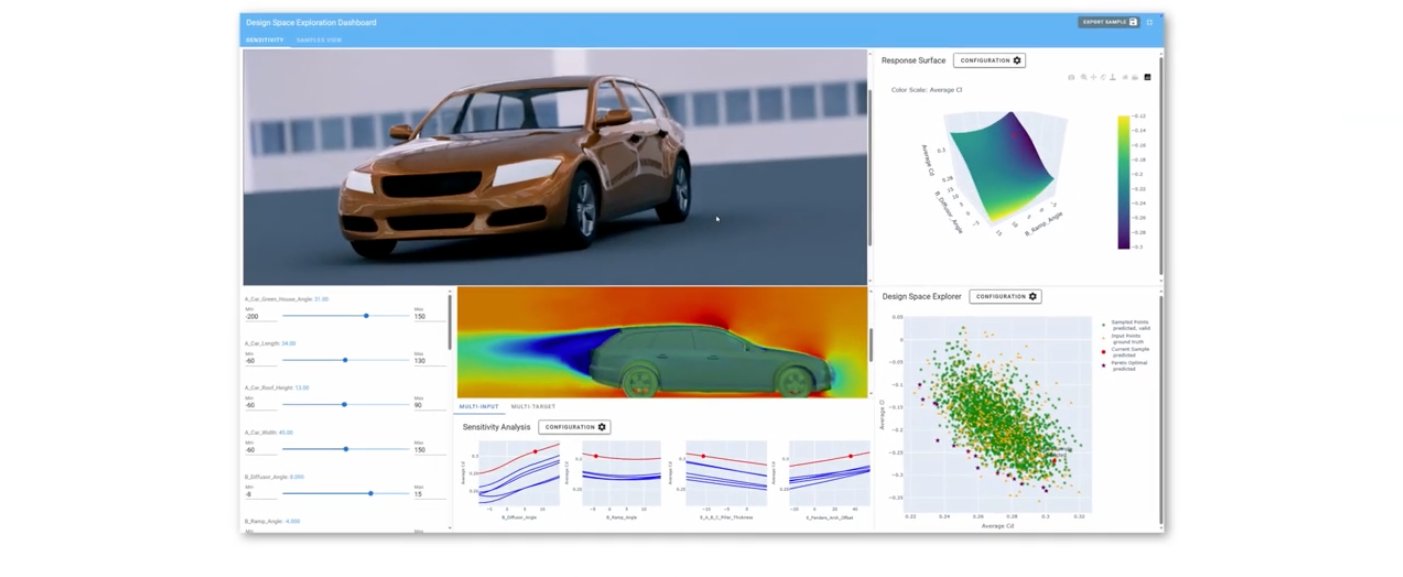

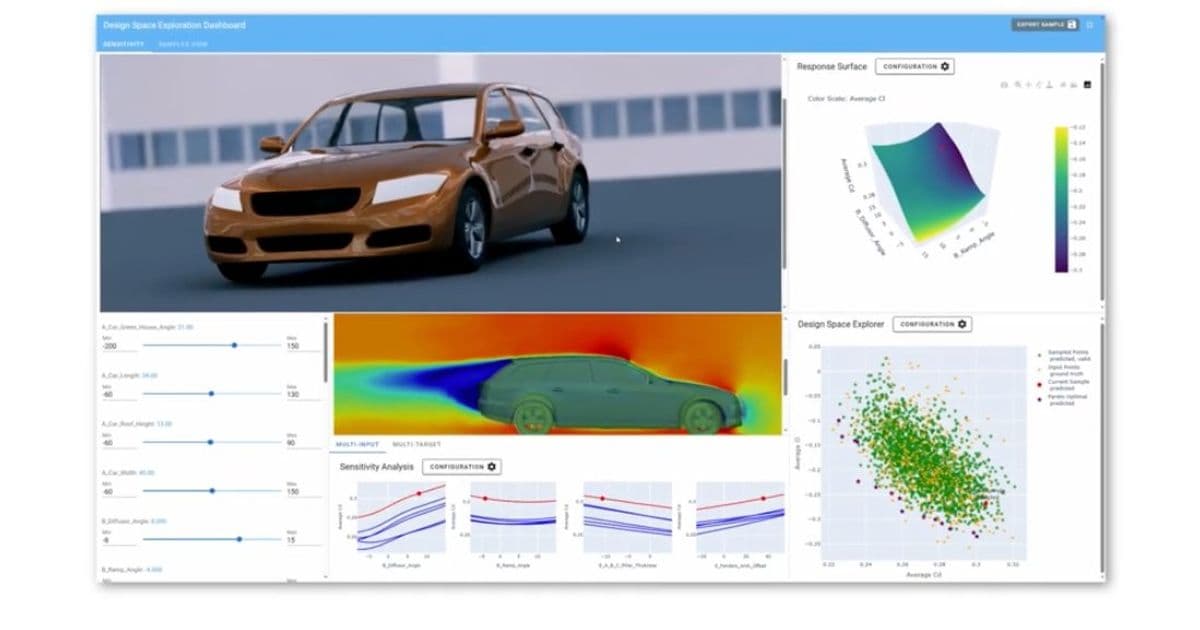

Design Lab: Real-Time Collaboration

The Design Lab interface sits at the core of this transformation. Within Neural Concept's ecosystem, validated geometry and physics models deploy directly into this collaborative environment where aerodynamicists and designers evaluate concepts in real time.

AI copilots provide:

- Instant performance feedback

- Geometry-aware improvement suggestions

- Live KPI updates

This effectively reconnects aerodynamic analysis with the pace of modern vehicle design, eliminating the traditional lag between simulation and design iteration.

Technical Deep Dive: Why This Architecture Works

The Geometric Regressor's success stems from its native handling of engineering data types. Traditional approaches often convert 3D geometry into voxels or point clouds, losing critical spatial relationships. Neural Concept's geometry-native approach preserves:

- Surface topology: Maintaining connectivity and curvature information

- Physical constraints: Respecting manufacturing and assembly requirements

- Multi-scale features: Capturing both fine details and overall shape characteristics

The architecture also leverages the full richness of DrivAerNet++'s multimodal data. Rather than training separate models for pressure, shear stress, velocity, and drag, the platform uses a unified approach that learns shared representations across physical quantities. This multi-task learning improves generalization and reduces training requirements.

Trade-offs and Considerations

While the results are impressive, several trade-offs deserve consideration:

Data Requirements: The approach requires substantial training data (8,000 designs in this case). For novel vehicle configurations outside the training distribution, accuracy may decrease, requiring additional training or fine-tuning.

Computational Cost: While inference is lightweight, training on 4×A100 GPUs for 24 hours represents significant compute cost. However, this is amortized across many design iterations and vehicle programs.

Physical Validation: The model predicts CFD outputs, not physical measurements. Validation against wind tunnel data or real-world measurements remains necessary for production deployment.

Interpretability: While the model provides accurate predictions, understanding why specific flow patterns emerge still requires domain expertise and potentially additional analysis tools.

Infrastructure Implications for Multi-Cloud Strategies

This benchmark demonstrates several principles relevant to broader cloud infrastructure strategies:

Dynamic Scaling: The ability to provision 256 workers for data processing and scale down to single-GPU inference demonstrates cloud elasticity that on-premises infrastructure cannot match economically.

Reproducibility: Azure's consistent infrastructure and Neural Concept's platform ensure results are reproducible across teams and time periods, critical for industrial engineering workflows.

Cost Optimization: The separation of training (one-time, high cost) from inference (ongoing, low cost) enables economic models where expensive training runs are shared across many users and projects.

Data Gravity: Processing 39 TB locally would require substantial storage and compute investment. Cloud infrastructure transforms this from a capital expenditure problem into an operational expense.

Looking Ahead: CES 2026 and Beyond

Neural Concept and Microsoft will showcase these capabilities at CES 2026, demonstrating how AI-native aerodynamic workflows reshape vehicle development—from real-time design exploration to enterprise-scale deployment. The Microsoft booth will feature DrivAerNet++ running on Azure HPC, allowing visitors to experience the platform's capabilities firsthand.

This benchmark represents more than a technical achievement; it validates a production-ready pathway from massive physics datasets to deployable AI models that deliver measurable business value. As automotive manufacturers face increasing pressure to accelerate development while improving efficiency, such infrastructure-native AI platforms may become essential competitive tools.

The convergence of geometry-native AI, scalable cloud infrastructure, and domain-specific workflows points toward a future where engineering intelligence becomes as accessible as traditional CAD tools—fundamentally changing how vehicles are designed, optimized, and brought to market.

Detailed Quantitative Results

1. Surface Field Predictions: Pressure and Wall Shear Stress

Surface Pressure

| Rank | Deep Learning Model | MSE (*10⁻², lower = better) | MAE (*10⁻¹, lower = better) |

|---|---|---|---|

| #1 | Neural Concept | 3.98 | 1.08 |

| #2 | GAOT (May 2025) | 4.94 | 1.10 |

| #3 | FIGConvNet (February 2025) | 4.99 | 1.22 |

| #4 | TripNet (March 2025) | 5.14 | 1.25 |

| #5 | RegDGCNN (June 2024) | 8.29 | 1.61 |

Wall Shear Stress

| Rank | Deep Learning Model | MSE (*10⁻², lower = better) | MAE (*10⁻¹, lower = better) |

|---|---|---|---|

| #1 | Neural Concept | 7.80 | 1.44 |

| #2 | GAOT (May 2025) | 8.74 | 1.57 |

| #3 | TripNet (March 2025) | 9.52 | 2.15 |

| #4 | FIGConvNet (Feb. 2025) | 9.86 | 2.22 |

| #5 | RegDGCNN (June 2024) | 13.82 | 3.64 |

2. Volumetric Predictions: Velocity

| Rank | Deep Learning Model | MSE (lower = better) | MAE (*10⁻¹, lower = better) |

|---|---|---|---|

| #1 | Neural Concept | 3.11 | 9.22 |

| #2 | TripNet (March 2025) | 6.71 | 15.2 |

3. Scalar Predictions: Drag Coefficient

| Rank | Deep Learning Model | MSE (*1e-5) | MAE (*1e-3) | Max AE (*1e-2) | R² |

|---|---|---|---|---|---|

| #1 | Neural Concept | 0.8 | 2.22 | 1.13 | 0.978 |

| #2 | TripNet | 9.1 | 7.19 | 7.70 | 0.957 |

| #3 | PointNet | 14.9 | 9.60 | 12.45 | 0.643 |

| #4 | RegDGCNN | 14.2 | 9.31 | 12.79 | 0.641 |

| #5 | GCNN | 17.1 | 10.43 | 15.03 | 0.596 |

Learn More

Credits

Microsoft: Hugo Meiland (Principal Program Manager), Guy Bursell (Director Business Strategy, Manufacturing), Fernando Aznar Cornejo (Product Marketing Manager), Dr. Lukasz Miroslaw (Sr. Industry Advisor)

Neural Concept: Theophile Allard (CTO), Benoit Guillard (Senior ML Research Scientist), Alexander Gorgin (Product Marketing Engineer), Konstantinos Samaras-Tsakiris (Software Engineer)

Comments

Please log in or register to join the discussion