Azure's journey to deploy RDMA at regional scale required solving complex congestion control challenges across three generations of RDMA NICs. This article examines how Data Center Quantized Congestion Notification (DCQCN) evolved from a theoretical protocol into a production-ready system that now carries 85% of Azure's storage traffic.

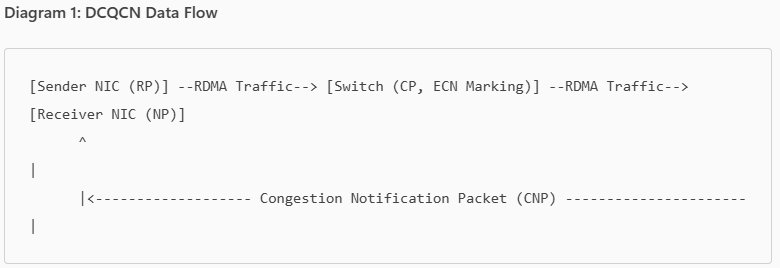

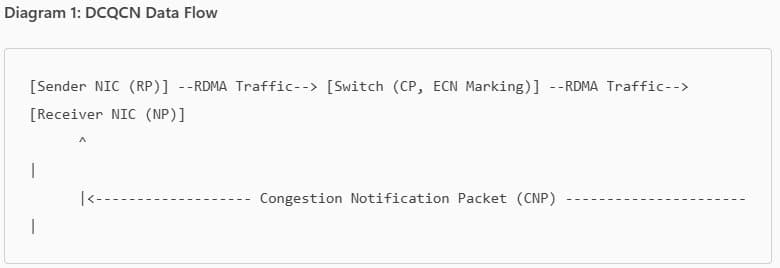

{{IMAGE:1}}

Cloud storage infrastructure demands have pushed networking to its limits. Remote Direct Memory Access (RDMA) offers near line-rate performance by bypassing the kernel network stack, but scaling it across vast, heterogeneous data center regions introduces congestion control challenges that traditional TCP mechanisms cannot address. Microsoft Azure's deployment of DCQCN (Data Center Quantized Congestion Notification) across its global infrastructure provides a blueprint for solving these problems at scale.

The RDMA Scaling Problem

RDMA's promise is straightforward: offload network operations to NIC hardware, eliminate CPU overhead from data movement, and achieve near-zero-copy transfers between machines. Azure adopted RDMA for its storage frontend traffic to reduce CPU utilization and improve latency. However, as deployment expanded from single clusters to regional scale, three critical challenges emerged.

First, hardware heterogeneity became unavoidable. Cloud infrastructure evolves incrementally—new server generations arrive every 12-18 months, each bringing updated NICs and switches. Azure's production environment currently contains three distinct generations of commodity RDMA NICs (Gen1, Gen2, and Gen3), each with different DCQCN implementations. This creates interoperability nightmares where flows between different generations can trigger performance degradation.

Second, regional networking introduces variable latency. While intra-rack RTTs measure in microseconds, cross-datacenter links can exceed milliseconds. Traditional congestion control algorithms that rely on packet loss as a signal perform poorly over long-haul links with high bandwidth-delay products.

Third, RDMA traffic patterns are inherently bursty and prone to incast congestion. Storage operations often involve multiple parallel reads or writes converging on a single target, overwhelming switch buffers and causing packet loss. With RDMA's reliance on reliable transport, any loss triggers retransmissions that cascade into latency spikes.

DCQCN Architecture

DCQCN addresses these challenges through explicit congestion notification rather than loss-based signals. The protocol coordinates three entities:

Reaction Point (RP) sits at the sender NIC. It adjusts transmission rates based on feedback, implementing additive increase and multiplicative decrease (AIMD) behavior.

Congestion Point (CP) resides in switches. When queue depths exceed configured thresholds, switches mark packets with Explicit Congestion Notification (ECN). This marking is probabilistic—switches don't mark every packet, preventing synchronized rate reductions across all flows.

Notification Point (NP) operates at the receiver NIC. Upon detecting ECN-marked packets, it generates Congestion Notification Packets (CNPs) back to the sender.

This feedback loop creates a control system: the sender reduces its rate when receiving CNPs, increases it gradually when no feedback arrives, and the network signals congestion before buffers overflow.

Hardware Interoperability Challenges

Azure's real-world deployment revealed that DCQCN implementations vary significantly across NIC generations:

Gen1 NICs implement DCQCN in firmware. They perform CNP coalescing at the receiver (NP side) and use burst-based rate limiting. These NICs were designed when DCQCN was still being standardized, resulting in implementation quirks.

Gen2 and Gen3 NICs moved DCQCN to hardware. They implement CNP coalescing at the sender (RP side) and use per-packet rate limiting. This design improves performance but breaks compatibility with Gen1.

The interaction problems are subtle but severe:

When a Gen2/Gen3 sender transmits to a Gen1 receiver, the Gen1's firmware struggles with the traffic pattern, causing excessive cache misses and slowing the receive pipeline.

When a Gen1 sender transmits to Gen2/Gen3 receivers, the difference in CNP coalescing triggers frequent rate reductions, underutilizing the network.

Azure's engineers solved this through three targeted fixes:

Move CNP coalescing to NP side for Gen2/Gen3: This aligns the notification mechanism with Gen1's expectations.

Implement per-QP CNP rate limiting: Matching Gen1's timer-based approach prevents feedback storms.

Enable per-burst rate limiting on Gen2/Gen3: This reduces cache pressure on older receivers.

Tuning for Regional Networks

DCQCN's rate adjustment algorithm is RTT-fair—its convergence speed doesn't depend on round-trip time. This property makes it suitable for Azure's regional networks where RTTs vary dramatically.

However, achieving optimal performance requires careful tuning:

Sparse ECN marking prevents premature congestion signals. Azure uses large ECN marking thresholds (K_max - K_min) and low marking probabilities (P_max) for flows with large RTTs. This ensures that long-haul flows aren't penalized for short bursts.

Joint buffer and DCQCN tuning avoids the classic problem of mismatched parameters. If switch buffer thresholds are too low, DCQCN receives false congestion signals. If too high, congestion isn't detected early enough. Azure tunes these parameters together, considering the full traffic mix.

Global parameter settings constrain the solution space. Azure's NICs support only global DCQCN settings, meaning parameters must work well across all traffic types, RTTs, and hardware generations. This requires conservative defaults that perform adequately in worst-case scenarios.

Production Results

The impact of DCQCN on Azure's infrastructure is measurable:

Performance: RDMA traffic runs at line rate with near-zero packet loss. Storage operations achieve consistent low latency even under heavy load.

CPU efficiency: By offloading network processing to NICs, Azure freed CPU cores that can be repurposed for customer VMs or application logic. Compared to TCP, RDMA reduces CPU utilization by up to 34.5% for storage frontend traffic.

Latency improvements: Large I/O requests (1 MB) see significant latency reductions—up to 23.8% for reads and 15.6% for writes.

Scale: As of November 2025, approximately 85% of Azure's traffic uses RDMA, supported in all public regions.

Lessons for Cloud Infrastructure

Azure's DCQCN deployment offers several insights for organizations scaling RDMA:

Hardware evolution requires protocol flexibility. The differences between Gen1, Gen2, and Gen3 NICs demonstrate that protocol implementations will diverge. Building interoperability layers and migration paths is essential.

Congestion control must be co-designed with buffer management. DCQCN parameters and switch buffer thresholds are interdependent. Optimizing one without considering the other leads to suboptimal performance.

Global parameter constraints demand conservative design. When hardware only supports global settings, parameters must be robust across diverse conditions. This often means sacrificing peak performance for consistent behavior.

Explicit signals beat implicit inference. ECN-based congestion notification provides clear, actionable feedback compared to loss-based approaches, especially in high-speed networks where loss is catastrophic.

Conclusion

DCQCN has evolved from an academic concept into a production-hardened protocol that underpins Azure's high-performance storage infrastructure. By combining explicit congestion notification with dynamic rate adjustments, it enables reliable RDMA deployment across heterogeneous hardware and variable network conditions.

The protocol's success lies not in theoretical elegance but in pragmatic engineering—addressing real-world interoperability issues, tuning for specific network characteristics, and accepting that production systems require compromise. As cloud providers continue pushing the boundaries of network performance, DCQCN provides a proven foundation for scaling RDMA at regional and global scales.

For organizations considering RDMA adoption, Azure's experience demonstrates that success requires more than fast NICs—it demands a holistic approach to congestion control, hardware compatibility, and careful parameter tuning.

This article is based on Microsoft Azure's published technical documentation and community posts about their RDMA infrastructure. For more details on DCQCN implementation and tuning, refer to Azure's official networking documentation.

Microsoft Azure Networking Blog

Data Center Quantized Congestion Notification (DCQCN) - IEEE Paper

Comments

Please log in or register to join the discussion