StepFun has open-sourced Step 3.5 Flash, an agent-first foundation model delivering up to 350 TPS inference, 256K context support, and performance approaching closed-source models in reasoning and agent tasks.

On February 2, 2026, Chinese AI company StepFun officially released Step 3.5 Flash, its latest and most powerful open-source foundation model, positioning it as “born for agents.” The company says the model delivers strong reasoning capabilities, high stability, and performance optimized specifically for Agent-based workflows.

Key highlights include:

- Faster: Inference speeds of up to 350 tokens per second for single-request coding tasks

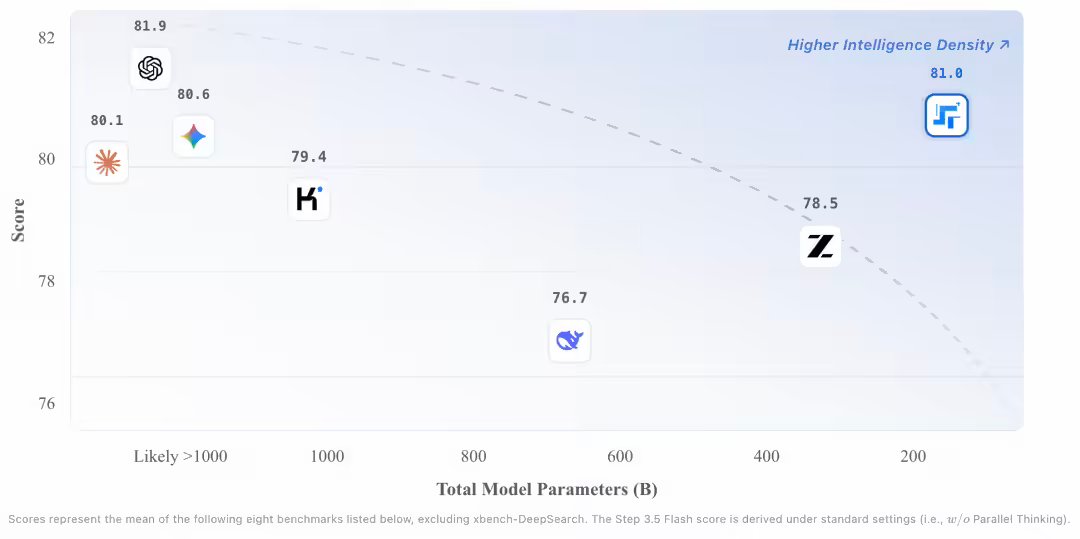

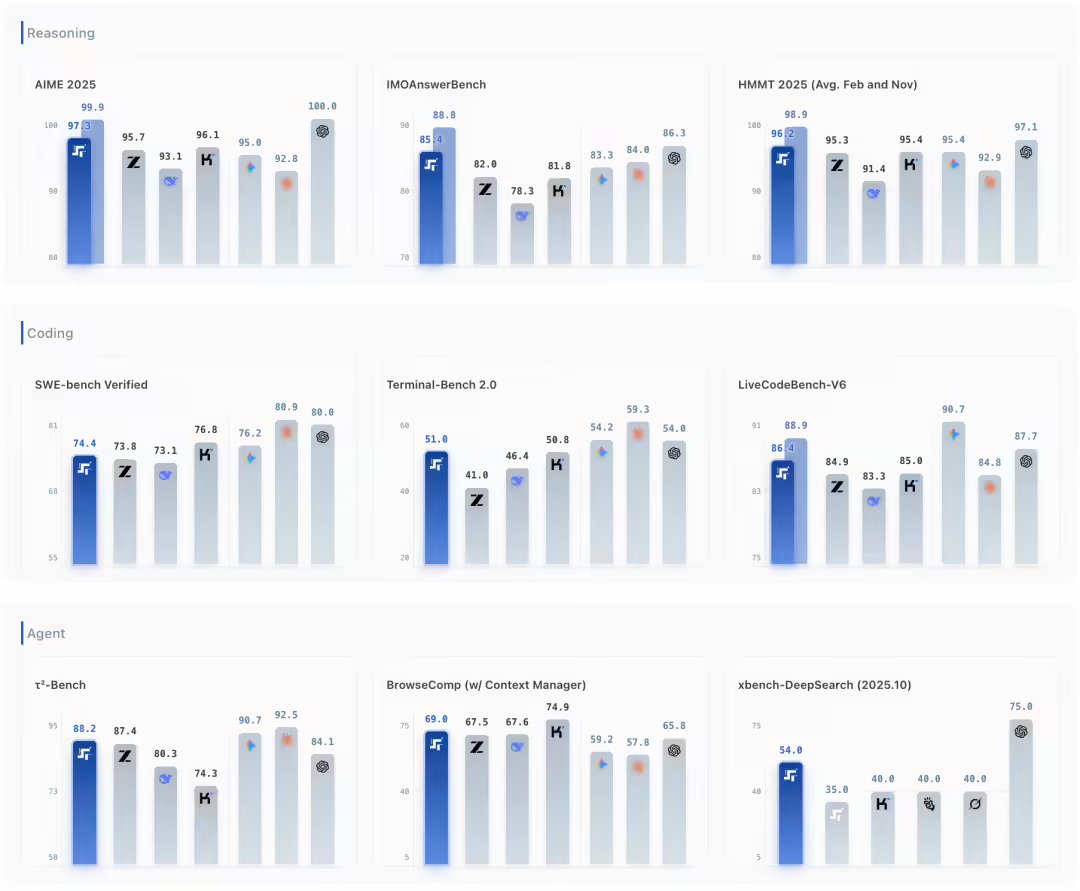

- Stronger: Performance in Agent scenarios and mathematical reasoning comparable to leading closed-source models

- More stable: Capable of handling complex, long-horizon, multi-step tasks

According to StepFun, the next generation of foundation models must not only be “smarter,” but also trustworthy, responsive, and cost-efficient. To achieve this balance, Step 3.5 Flash adopts several architectural innovations:

Sparse Mixture-of-Experts (MoE): Each token activates only around 11 billion parameters, out of a total 196 billion, significantly reducing compute costs

MTP-3 (Multi-Token Prediction): The model predicts three tokens per step, effectively doubling inference efficiency

Hybrid Attention Architecture (SWA + Full Attention): A 3:1 sliding-window-to-global attention mix allows the model to focus on key segments in long texts, enabling efficient processing of up to 256K context length with lower computational overhead

Step 3.5 Flash is now fully available, and StepFun also revealed that training for the Step 4 model has already begun. The company invited developers and researchers to participate in the model's open development and ecosystem co-creation.

With its emphasis on Agent intelligence, long-context reasoning, and inference efficiency, Step 3.5 Flash signals StepFun's ambition to establish a competitive open-source foundation for next-generation AI agent systems.

Technical Deep Dive: What Makes Step 3.5 Flash Different

The 350 tokens per second (TPS) inference speed is particularly noteworthy in the current landscape where many open-source models struggle to match the throughput of proprietary solutions. This performance metric suggests StepFun has made significant optimizations in their inference pipeline, likely leveraging both hardware acceleration and architectural choices that minimize computational overhead.

The MoE Architecture Advantage

The Sparse Mixture-of-Experts approach represents a clever solution to the scaling problem. By activating only 11B out of 196B parameters per token, Step 3.5 Flash achieves a remarkable efficiency ratio. This means the model can maintain competitive performance while requiring significantly less computational resources during inference compared to dense models of similar parameter counts.

Multi-Token Prediction: Doubling the Speed

The MTP-3 mechanism is particularly interesting from an engineering perspective. Traditional autoregressive models predict one token at a time, creating a sequential bottleneck. By predicting three tokens simultaneously, Step 3.5 Flash effectively increases throughput by approximately 3x, though the actual gain is closer to 2x when accounting for the additional computational complexity of multi-token prediction.

Long Context Processing

The 256K context window is becoming increasingly important as AI agents need to process longer documents, conversations, and codebases. The hybrid attention architecture combining sliding-window attention (SWA) with full attention allows the model to maintain efficiency while still capturing global dependencies when necessary. The 3:1 ratio suggests a thoughtful balance between local pattern recognition and global context understanding.

Agent-First Design Philosophy

StepFun's explicit focus on agent workflows represents a shift in how foundation models are being developed. Rather than creating general-purpose models and retrofitting them for agent use cases, Step 3.5 Flash appears to have been designed from the ground up with agent requirements in mind.

This agent-first approach likely influenced several design decisions:

- The emphasis on stable, long-horizon task completion

- The focus on inference efficiency for real-time agent responses

- The optimization for reasoning tasks common in agent workflows

- The support for large context windows to maintain conversation and task state

Open Source Strategy and Ecosystem Building

By open-sourcing Step 3.5 Flash, StepFun is positioning itself in the growing ecosystem of open foundation models. This strategy offers several advantages:

Community Development: Open sourcing allows the broader developer community to contribute improvements, find bugs, and create specialized adaptations

Trust and Transparency: Organizations can audit the model's behavior and ensure it meets their requirements

Ecosystem Lock-in: As developers build on the model, they become invested in the StepFun ecosystem

Competitive Positioning: Open source serves as a counterweight to closed models from companies like OpenAI and Anthropic

Performance Claims and Real-World Implications

The claim that Step 3.5 Flash's performance "approaches closed-source models" in reasoning and agent tasks is significant. If verified, this would represent a major milestone for open-source AI, demonstrating that the performance gap between open and closed models is narrowing.

However, such claims require careful evaluation. The specific benchmarks and comparison methodologies matter significantly. Users should look for:

- Independent third-party evaluations

- Reproducible benchmark results

- Performance across diverse task types

- Real-world deployment experiences

Looking Forward: The Step 4 Announcement

The announcement that Step 4 training has already begun suggests StepFun is operating on an aggressive development timeline. This rapid iteration cycle is characteristic of the current AI landscape, where companies are racing to maintain competitive advantages.

For developers and organizations considering Step 3.5 Flash, this raises questions about the model's longevity and whether investing in the current version makes sense given the impending next generation.

Practical Considerations for Adoption

Organizations considering adopting Step 3.5 Flash should evaluate several factors:

Infrastructure Requirements: While the MoE architecture reduces compute costs, the model still requires significant GPU resources for optimal performance

Integration Effort: Agent workflows often require careful prompt engineering and system design beyond just the model itself

Support and Maintenance: Open source models require organizations to handle their own support, updates, and troubleshooting

Licensing and Compliance: Understanding the open-source license terms and any usage restrictions is crucial

Conclusion

StepFun's Step 3.5 Flash represents a significant contribution to the open-source AI landscape, particularly for agent-focused applications. The combination of high inference speed, large context support, and agent-optimized design addresses many practical requirements for deploying AI agents in production environments.

The model's success will ultimately depend on real-world performance and adoption. As the community begins to experiment with and deploy Step 3.5 Flash, we'll gain a clearer picture of how it compares to both other open-source models and proprietary alternatives.

For now, StepFun has positioned itself as a serious player in the foundation model space, with ambitions that extend beyond just creating another large language model. Their focus on agent intelligence and open development suggests a long-term strategy aimed at shaping the future of AI agent systems.

Comments

Please log in or register to join the discussion