Data replication isn't the enemy of single source of truth—it's the enabler. This deep dive explores how strategic replication powers scalable, cost-optimized analytics and AI architectures while maintaining data integrity.

In the age of AI and cloud computing, the relationship between data replication and the single source of truth (SSOT) has become one of the most misunderstood concepts in modern data architecture. The conventional wisdom suggests that replication creates multiple sources of truth, leading to data inconsistencies and governance nightmares. However, this perspective misses the strategic value that well-designed replication brings to enterprise analytics and AI initiatives.

The Single Source of Truth Myth

The concept of a single source of truth has been a cornerstone of data architecture for decades. In regulated industries like healthcare, maintaining a compliant SSOT is not just best practice—it's often a legal requirement. The challenge arises when organizations attempt to scale their analytics and AI capabilities while maintaining this SSOT.

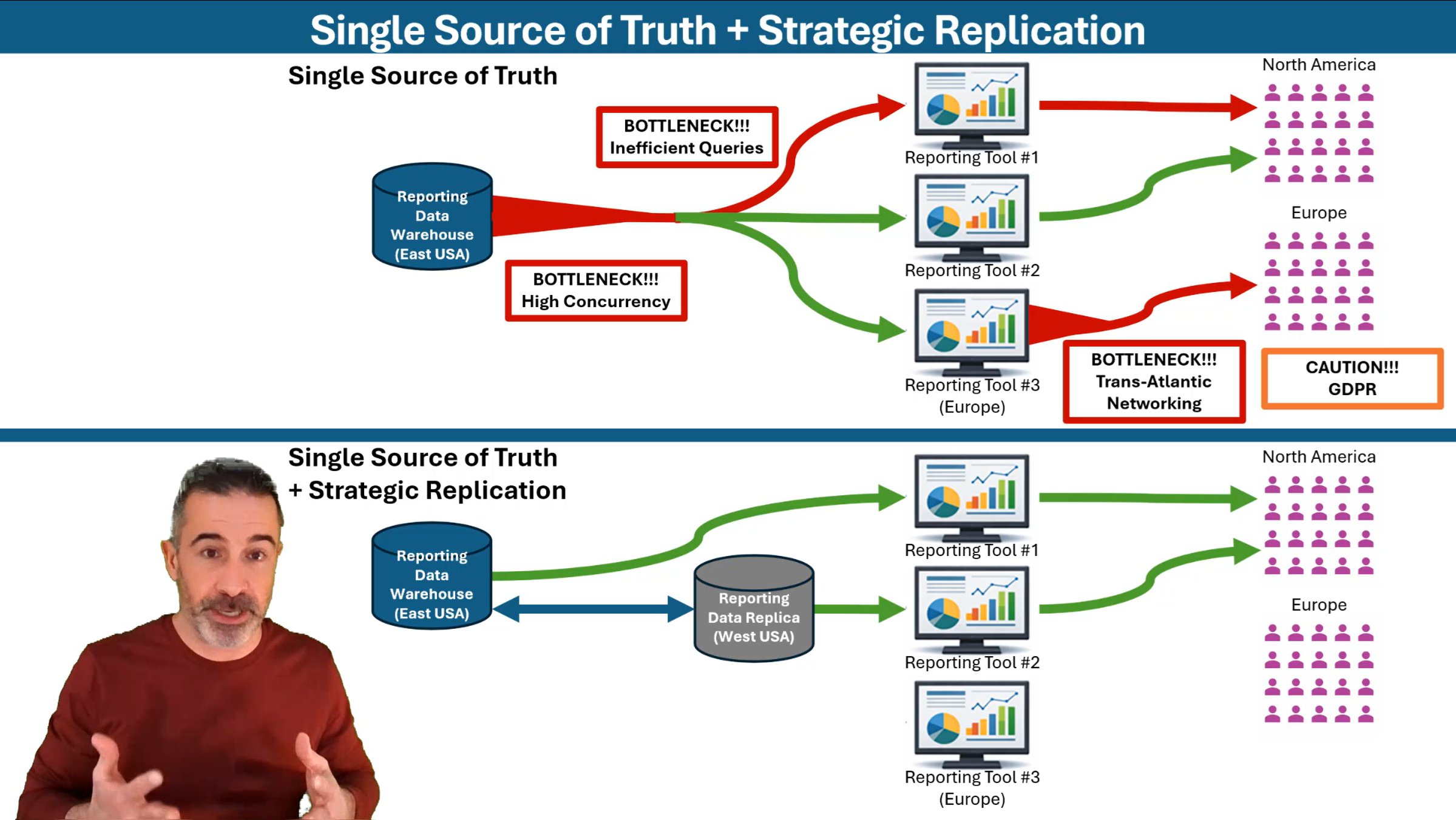

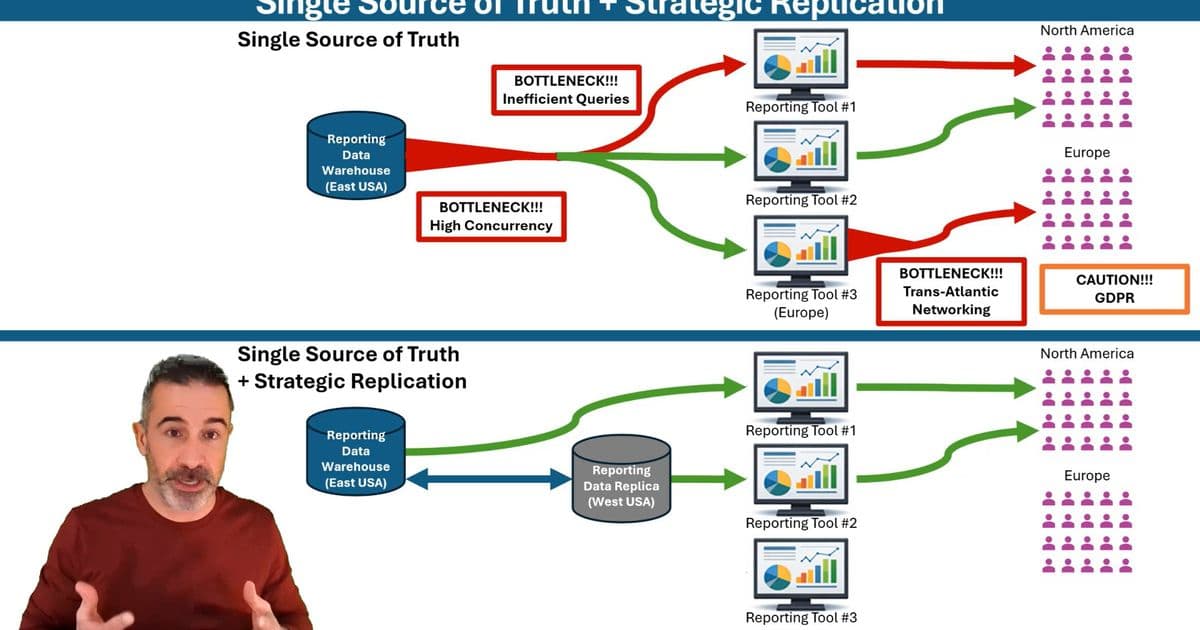

Traditional approaches often force a choice between maintaining data integrity and achieving the performance, scalability, and cost optimization required for modern analytics workloads. This false dichotomy has led many organizations to either compromise their SSOT principles or accept suboptimal performance and excessive costs.

Strategic Replication: A Complementary Approach

Strategic data replication represents a paradigm shift in how we think about data architecture. Rather than viewing replication as a threat to data integrity, it can be positioned as a complementary mechanism that enhances the SSOT architecture while addressing the practical challenges of modern analytics and AI workloads.

Consider the biological analogy of DNA replication. In living organisms, DNA replication doesn't create multiple sources of truth—it creates exact copies that enable growth, repair, and adaptation while maintaining the integrity of the original genetic code. Similarly, strategic data replication creates exact copies that enable analytics and AI workloads to scale without compromising the integrity of the source system.

The Cryptocurrency Parallel

Another illuminating analogy comes from the world of cryptocurrency. Bitcoin's blockchain maintains a single source of truth across a distributed network through replication. Every node maintains a complete copy of the blockchain, yet there is no ambiguity about which version is authoritative. The replication mechanism ensures availability, performance, and resilience while maintaining a single, verifiable source of truth.

This parallel demonstrates how replication can enhance rather than compromise data integrity when implemented with the right architectural principles and governance mechanisms.

Architectural Benefits of Strategic Replication

When implemented correctly, strategic data replication offers several key benefits for analytics and AI architectures:

Scalability and Performance

Modern analytics and AI workloads often require high query concurrency and global user access. A single database system, no matter how powerful, cannot efficiently serve thousands of concurrent queries while maintaining acceptable performance. Strategic replication allows organizations to distribute query workloads across multiple systems, dramatically improving performance and user experience.

Cost Optimization

Different analytics and AI workloads have different performance and cost requirements. Strategic replication enables organizations to optimize their infrastructure costs by placing data in the most cost-effective systems for each workload. For example, historical data for trend analysis might reside in lower-cost storage systems, while recent data for operational reporting might be in high-performance systems.

Technology Evolution and Flexibility

As AI and analytics technologies rapidly evolve, organizations need the flexibility to adopt new tools and platforms without disrupting their core data infrastructure. Strategic replication provides this flexibility by allowing organizations to experiment with new technologies using replicated data while maintaining their SSOT.

Addressing Historical Challenges

The concerns about spreadmarts and shadow IT are valid and stem from historical approaches to data replication. However, strategic replication addresses these concerns through proper governance and architectural design:

Governance and Control

Unlike historical approaches where departments created their own data copies without oversight, strategic replication operates within a governed framework. Clear policies define what data can be replicated, how it should be used, and who is responsible for maintaining data quality and compliance.

Consistent Metrics and Definitions

One of the primary concerns with multiple data copies is the potential for inconsistent metrics and definitions. Strategic replication addresses this by ensuring that all replicated data maintains the same definitions, calculations, and business rules as the source system. This consistency is enforced through automated processes and governance controls.

Innovation Without Compromise

Strategic replication enables innovation by providing the flexibility to experiment with new analytics and AI tools without compromising the integrity of the core data systems. Organizations can support both corporate reporting requirements and self-service analytics needs while maintaining data consistency and compliance.

Planning for the AI Agentic Future

As we move toward an AI agentic future, the importance of strategic data replication will only increase. AI agents require access to data that is both current and performant, often needing to process information in real-time or near-real-time. Strategic replication provides the architectural foundation to support these requirements while maintaining the governance and compliance standards that organizations require.

Implementation Considerations

Successfully implementing strategic data replication requires careful planning and consideration of several factors:

Data Freshness Requirements

Different analytics and AI workloads have different requirements for data freshness. Understanding these requirements is crucial for designing an effective replication strategy that balances performance, cost, and data currency.

Compliance and Security

In regulated industries, replication must maintain all compliance and security requirements of the source system. This includes data encryption, access controls, audit trails, and other governance mechanisms.

Change Management

Implementing strategic replication often requires changes to existing processes and workflows. Successful implementation requires careful change management to ensure that all stakeholders understand and embrace the new approach.

The Future of Data Architecture

Strategic data replication represents a mature evolution in data architecture thinking. It acknowledges the practical realities of modern analytics and AI workloads while maintaining the principles of data integrity and governance that are essential for enterprise success.

As organizations continue to invest in analytics and AI capabilities, the ability to scale these initiatives while maintaining data integrity will become increasingly critical. Strategic data replication provides the architectural foundation to achieve this balance, enabling organizations to realize the full potential of their data assets while maintaining the governance and compliance standards that modern business requires.

The video referenced in this article provides a comprehensive exploration of these concepts, including detailed examples and practical guidance for implementing strategic data replication in your organization. Whether you work with Microsoft technologies or other cloud platforms, the principles and approaches discussed are applicable across the modern data landscape.

Comments

Please log in or register to join the discussion