Suspicions that Taylor Swift's album promos were AI-generated have sparked a fierce fan revolt under #SwiftiesAgainstAI, citing visual anomalies and ethical breaches. Experts confirm the videos likely used generative tools like Google's Veo, fueling debates over artistic integrity and AI's role in media. The backlash underscores a growing clash between technology and creative industries.

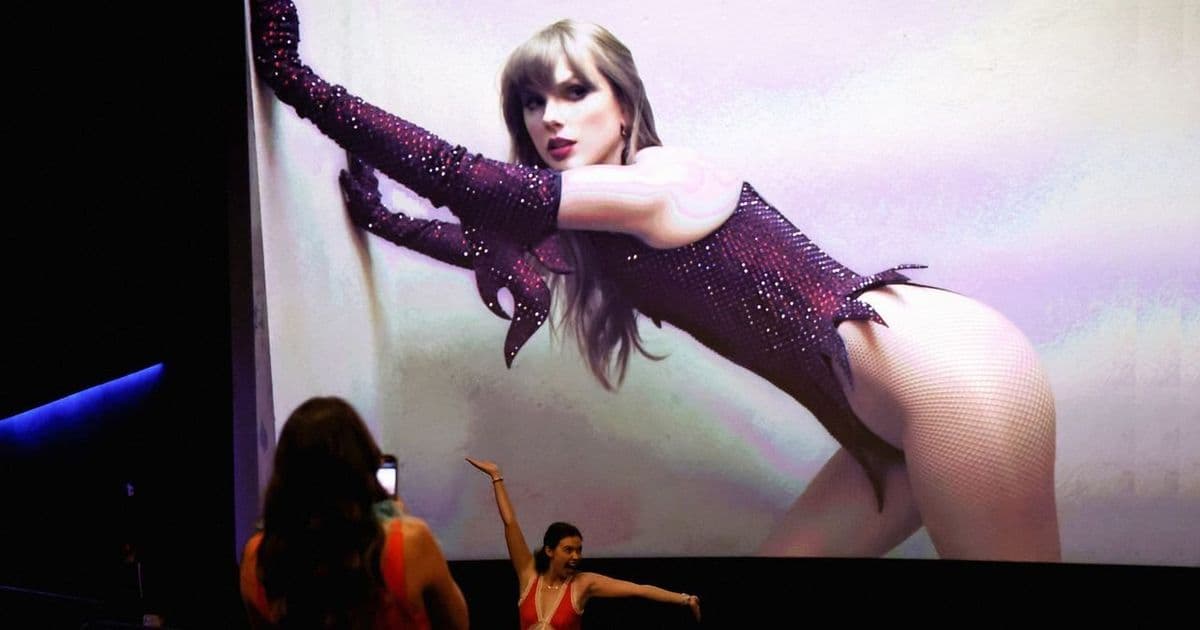

It started with a bartender's hand phasing through a napkin. Then a disappearing coat hanger. A carousel horse with two heads. These weren't abstract art choices—they were the jarring inconsistencies that Taylor Swift's eagle-eyed fans flagged as evidence of AI-generated content in promo videos for her new album, The Life of a Showgirl. Shared widely on social media, the clips were part of a Google-partnered scavenger hunt to unlock the lyric video for the lead single "The Fate of Ophelia." But instead of excitement, they ignited a firestorm of criticism from Swifties, who accused the pop icon of betraying her own stance on artistic authenticity.

Marcela Lobo, a graphic designer and longtime Swift fan, was among the first to voice alarm. "The first sign that it was AI was that it didn’t look great," she told WIRED. "It was wonky, the shadows didn’t match, the windows and the painted piano, it looked like shit, basically." Like many in the #SwiftiesAgainstAI movement, Lobo saw the visual glitches—garbled text, mismatched lighting, and surreal distortions—as hallmarks of generative AI. Her post comparing the promos to Swift's meticulously crafted 2017 "Look What You Made Me Do" lyric video, designed by human artists, went viral, resonating with fans who fear AI's encroachment on creative jobs.

AI detection experts quickly weighed in. Ben Colman, CEO of Reality Defender, analyzed the videos and found them "highly likely" AI-generated, pointing to nonsensical text and inconsistent physics as telltale flaws. He explained that tools like OpenAI's Sora or Google's Veo 3—which creates short AI videos from photos—could produce such content in minutes with a good prompt. "Diffusion models are advancing rapidly, making it easier than ever to deepfake or generate synthetic media," Colman noted, highlighting how these systems often train on copyrighted data without consent, a legal battleground for artists.

The backlash wasn't just about poor quality; it tapped into deeper ethical currents. Ellie Schnitt, a prominent Swiftie with over 500,000 X followers, spearheaded calls for an apology, citing Swift's past condemnation of AI misuse. In a pointed post, Schnitt wrote, "You know firsthand the harm AI images can cause. You know better, so do better," referencing Swift's 2023 Instagram statement against AI deepfakes that falsely endorsed political figures. Schnitt emphasized broader concerns: "We are very much losing this battle against common sense when it comes to using generative AI," she told WIRED, citing environmental costs from AI's massive energy demands and its erosion of critical thinking.

This incident reflects a widening rift in tech and entertainment. Generative AI has surged in advertising, with companies like Google promoting tools such as Veo for quick content creation. Yet, as a Pew Research survey revealed, nearly half of consumers reject AI-made art, with younger audiences particularly hostile. Swift's silence—and Google's non-response—left fans speculating: Was this a misguided partnership or a test of AI's limits? The videos were swiftly pulled from YouTube, and X restricted searches for "Taylor Swift AI," echoing past measures against nonconsensual deepfakes.

For developers and AI researchers, the #SwiftiesAgainstAI movement is a case study in pushback. It underscores how technical audiences—already wary of AI's ethical pitfalls—can mobilize when synthetic media invades cultural spaces they cherish. As Lobo put it, "AI disregards the art and turns it into a product," a sentiment that could stall adoption if creators and corporations ignore the human cost. In an era where AI promises efficiency, this backlash is a stark reminder that authenticity still reigns supreme.

Source: Based on reporting from WIRED.

Comments

Please log in or register to join the discussion