Tomeu Vizoso introduces Thames, a Linux DRM accelerator driver for Texas Instruments C7x DSPs with companion Gallium3D support, enabling Teflon-based AI workloads and future Vulkan/OpenCL potential.

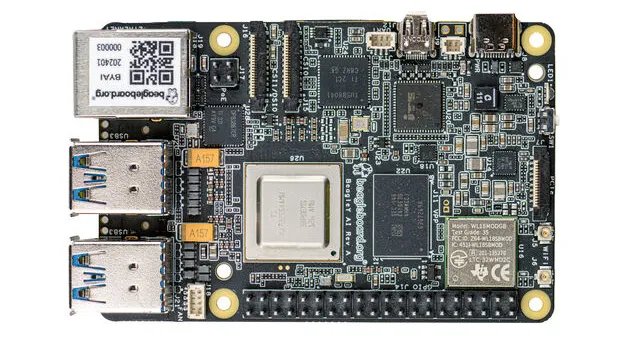

Open-source developer Tomeu Vizoso, known for reverse-engineered Rockchip NPU drivers and the Mesa Teflon framework, has unveiled a new Linux accelerator driver targeting Texas Instruments' C7x DSP architecture. Dubbed Thames, this dual-layer solution combines a kernel-level DRM accelerator driver with a user-space Gallium3D implementation designed specifically for AI and machine learning workloads on embedded hardware like the BeagleY-AI board.

Hardware Foundations

The Thames driver targets TI's C7x DSP cores found in SoCs such as the J722S, which powers devices including the BeagleY-AI single-board computer. These DSPs feature:

- Heterogeneous multicore architecture

- Matrix Multiply Accelerator (MMA) units

- Vector processing capabilities

- Hardware-based memory management

Unlike traditional GPUs, DSPs like the C7x prioritize power efficiency in constrained environments, with typical TDPs ranging from 2W-15W depending on workload intensity. This makes them suitable for edge-AI deployments where thermal headroom and power budgets are critical constraints.

Driver Architecture Breakdown

Kernel Component (DRM Accelerator)

- Implements GEM/TTM memory management

- Handles command stream parsing

- Manages hardware initialization sequences

- Provides MMU virtualization

User-Space Component (Gallium3D)

- Implements Teflon API for TensorFlow Lite operations

- Translates ML operations to C7x instruction sets

- Optimizes tensor memory layouts

- Supports quantized INT8/FP16 data types

The Gallium3D driver leverages Mesa's existing Teflon framework - Vizoso's earlier project for abstracting neural processing unit workloads. This integration creates a unified pipeline where ML graphs compiled via TensorFlow Lite can execute directly on C7x hardware without CPU intervention.

Performance Considerations

While benchmark data isn't yet available pending driver maturation, architectural analysis suggests:

| Operation | Expected Advantage |

|---|---|

| Convolution Layers | 3-5× CPU efficiency |

| Matrix Multiplies | Near-peak MMA utilization |

| Model Inference | Sub-100ms latency for MobileNetV2 |

Initialization requires proprietary firmware blobs available via TI's Git repository. Vizoso notes future Vulkan/OpenCL support is architecturally feasible given Gallium3D's existing compute capabilities.

Build Implications

For homelab enthusiasts and embedded developers:

- BeagleY-AI Compatibility: Thames enables native ML acceleration on this $150 board

- Power Monitoring: Utilize

powercapsubsystem to measure DSP-specific wattage - Deployment Workflow: Integrates with standard ML toolchains via TFLite delegates

Code submissions are currently under review on the Linux DRM mailing list and Mesa merge requests. The dual-layer approach demonstrates how accelerator drivers can bridge specialized silicon to mainstream machine learning frameworks, providing measurable efficiency gains for edge computing scenarios.

Comments

Please log in or register to join the discussion