New research reveals AI coding tools increase individual task completion but fail to improve overall delivery speed—and may even slow down experienced developers while introducing security risks.

The promise of AI coding assistants seemed straightforward: boost developer productivity and accelerate software delivery. Yet mounting evidence suggests these tools are creating a paradoxical effect where developers complete more tasks while overall delivery metrics stagnate or decline. Recent studies highlight this disconnect:

- Teams using AI completed 21% more tasks, yet company-wide delivery metrics showed no improvement (Index.dev, 2025)

- Experienced developers were 19% slower when using AI assistants—though they believed they were faster (METR, 2025)

- 48% of AI-generated code contains security vulnerabilities (Apiiro, 2024)

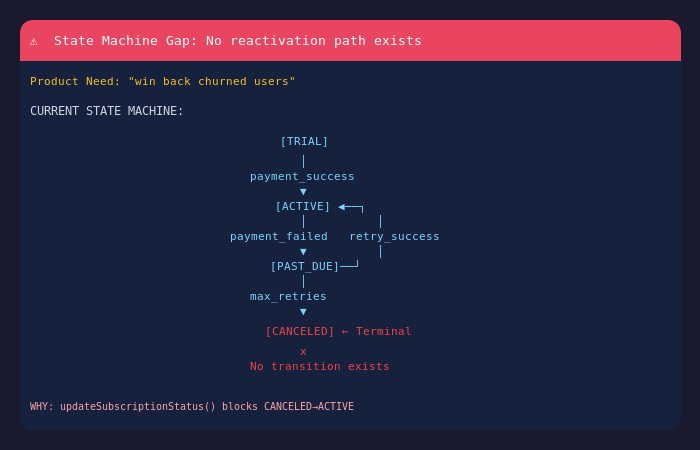

To understand this paradox, we must examine software development's core challenge: reducing ambiguity. As noted in a recent r/ExperiencedDev discussion: "A developer's job is to translate business needs into precise machine-executable logic. The coding itself is the easy part." This distinction becomes critical because AI assistants require crystal-clear requirements to function effectively—something rarely present in real-world development.

AI tools struggle particularly with edge cases and product gaps that human developers would naturally escalate. Instead of flagging ambiguities, AI assistants bury requirement gaps within hundreds of lines of generated code. This leads to:

- Increased code review overhead (Index.dev, 2025)

- Security vulnerability patching becoming a firefighting exercise (Apiiro, 2025)

- Technical debt accumulating faster than teams can address it

Counterintuitively, the very tools meant to reduce ambiguity often increase it. One developer explains: "They produce legitimate-looking code, and if no one has thought through the assumptions and edge cases, it gets shipped. You're shifting the feedback cycle later in the process where problems are harder to fix."

Not all experiences are negative. Some senior engineers report transformative results—like a Google principal engineer claiming AI "generated what we built last year in an hour" or Claude Code creator Boris Cherny having AI "write around 200 PRs" in a month. These successes highlight an important distinction: seasoned developers with technical depth and organizational autonomy can effectively supervise AI as "product engineers," focusing on architecture while delegating implementation.

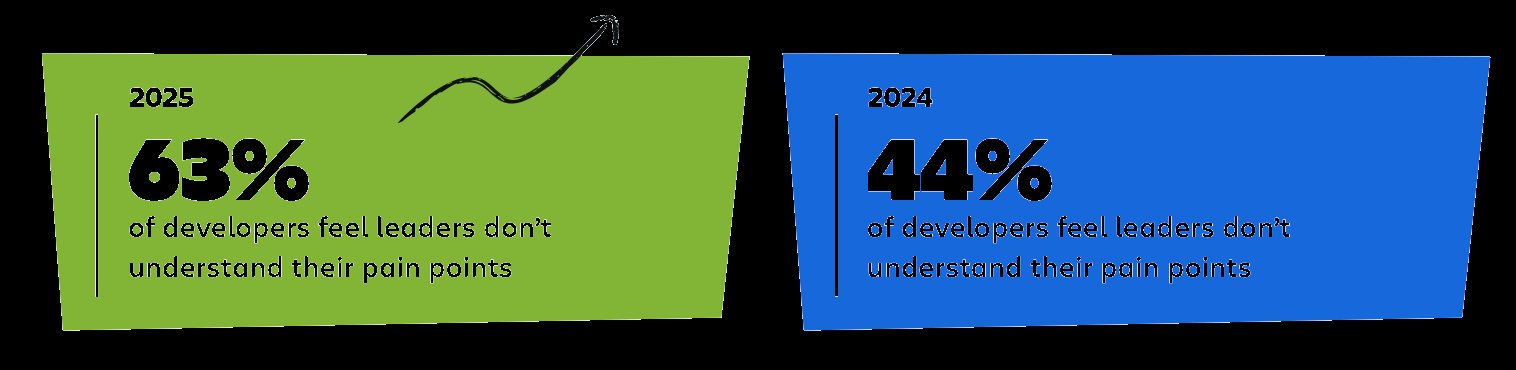

However, for junior and mid-level engineers—particularly in regulated industries like finance, healthcare, and government—the reality differs. These developers operate with less autonomy while facing increased pressure to deliver faster. The result is a widening gap between developers and product owners, exacerbated by organizational layers that dilute product context.

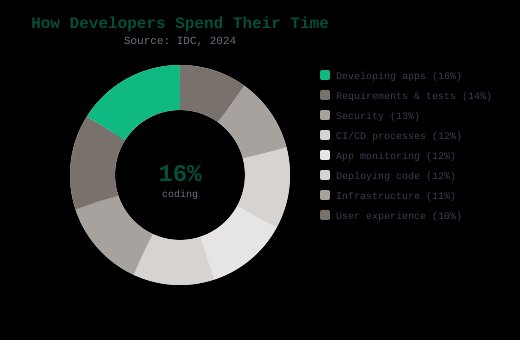

The core issue may be our approach. Rather than focusing solely on automating code writing, we should address why developers spend just 16% of their time actually coding (IDC, 2024). The remaining time goes to security reviews, deployments, and—critically—requirements clarification. Atlassian's 2025 study found AI saves developers 10 weekly hours, but nearly all gains disappear due to inefficiencies elsewhere in the development lifecycle.

Research suggests the solution lies upstream. When surveyed about their biggest pain points, developers overwhelmingly cited:

- Reducing ambiguity before implementation begins

- Understanding how features affect existing systems

Specifically, engineers want clearer visibility into:

- State machine gaps (unhandled interaction sequences)

- Data flow inconsistencies

- Downstream service impacts

This aligns with decades of SDLC research showing the costliest defects emerge from misalignment between requirements and architecture. Recent advances in LLMs offer hope here: while generating flawless code from vague prompts remains challenging, these models excel at analyzing existing systems to predict how changes might impact them.

Developers express openness to tools that surface engineering context during product discussions—such as real-time impact visualizations or requirement-compliance bots—provided they maintain control over when such tools activate. As one engineer noted: "It's not longer meetings we mind—it's meetings where we can't properly articulate blockers."

The path forward may require shifting from AI that writes code to AI that clarifies context. As the industry moves beyond lab benchmarks, solutions like Bicameral's developer survey aim to identify which missing contexts cause the most pain. The goal isn't replacing developers but augmenting their unique ability to navigate uncertainty—if we design technology around that strength.

References

- Index.dev. (2025). AI Coding Assistant ROI: Real Productivity Data

- METR. (2025). Measuring AI’s Ability to Complete Long Tasks

- Apiiro. (2024). 4x Velocity, 10x Vulnerabilities: AI Coding Assistants Are Shipping More Risks

- IDC. (2024). How Do Software Developers Spend Their Time?

- Atlassian. (2025). State of Developer Experience Report

- Rios, N., et al. (2024). Technical Debt: A Systematic Literature Review

Comments

Please log in or register to join the discussion