Social media has shifted from simple chronological feeds to addictive algorithms optimized for maximum engagement, often at the cost of user well-being. Micael Widell argues that platforms now prioritize ragebait and brainrot content, trapping users in cycles of dissatisfaction—but advances in AI could soon enable algorithms that measure and promote genuine happiness instead.

The Algorithmic Trap: How Social Media's Engagement Obsession Fuels User Despair—and Why AI Might Be the Antidote

Remember when social media felt simple? Back in the early 2010s, platforms like Instagram offered a straightforward experience: follow accounts you liked, see their posts in chronological order, and scroll until you'd caught up—often in just minutes. As photographer and tech observer Micael Widell recently highlighted, this era was "delightful" but short-lived. The rise of algorithmic feeds transformed social media into an endless vortex of content, engineered to maximize user time-on-app for ad revenue. Fast forward to 2025, and the result is a landscape dominated by addictive, often toxic experiences that leave users feeling empty and agitated.

From Chronological Bliss to Algorithmic Addiction

In Widell's analysis, the pivot from chronological feeds wasn't accidental—it was a calculated business move. Early social media's finite feeds capped engagement, limiting monetization potential. The solution? Algorithms designed to exploit human psychology by serving content that triggers strong reactions. Widell notes:

"If I read something that makes me angry or provoked, I am very likely to stop my scrolling there, and maybe even make an angry comment. I am also more likely to return to the app to check if my angry comment got a response. And all of this makes me spend a lot more time and as a result I look at more ads."

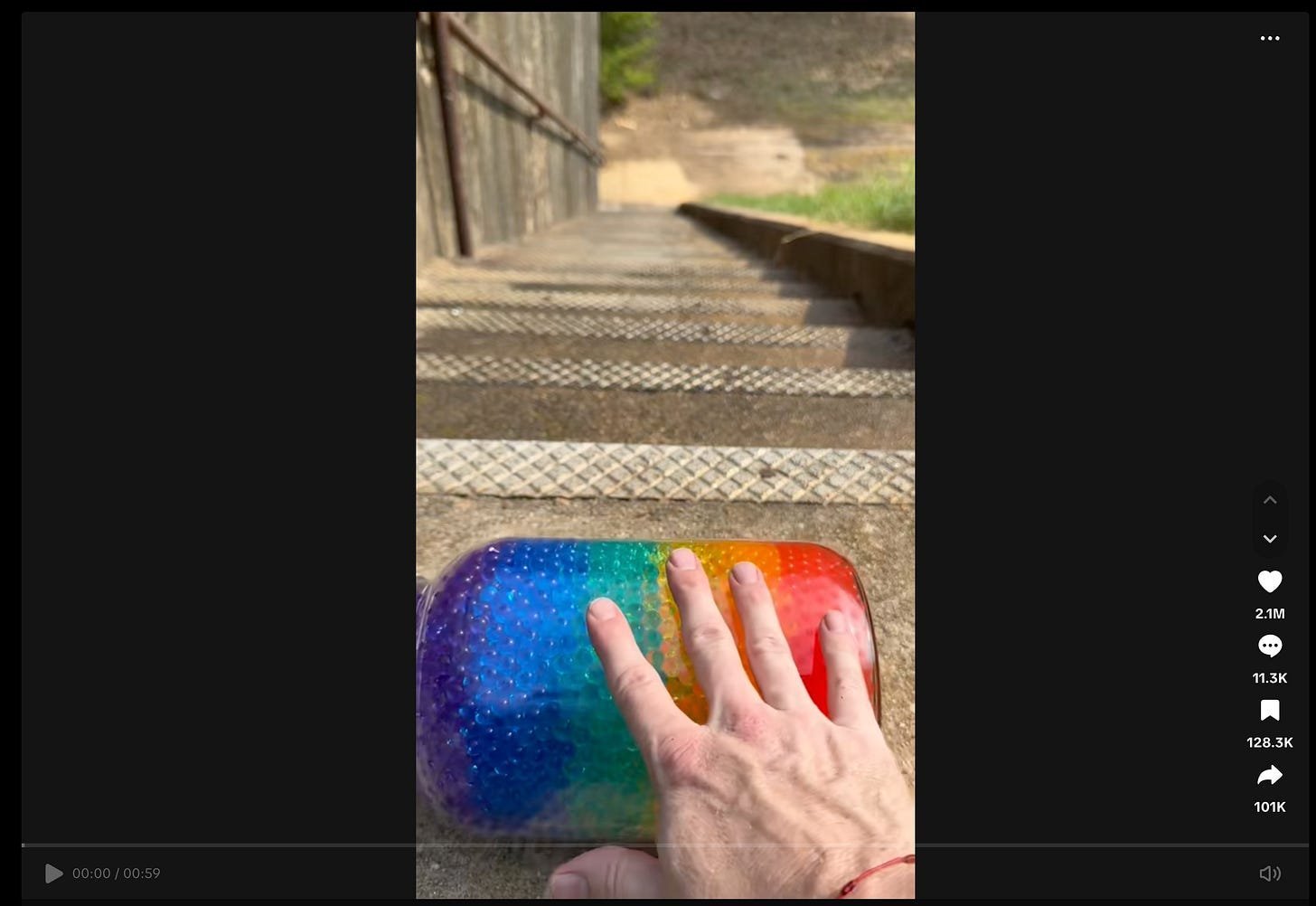

This optimization loop has turned platforms like X (formerly Twitter) and Threads into hotbeds of ragebait—content engineered to provoke outrage—and "brainrot," the mindless, low-effort media that offers fleeting distraction but no lasting satisfaction. Widell describes how this content creates a vicious cycle: it's easy for algorithms to measure clicks and time spent, but impossible to quantify the user's emotional aftermath, like emptiness or regret.

Caption: Widell's illustration of "brainrot" content, symbolizing the hollow engagement that dominates modern feeds.

Caption: Widell's illustration of "brainrot" content, symbolizing the hollow engagement that dominates modern feeds.

The Measurement Problem: Why Algorithms Can't See Your Happiness

For developers, the core issue is one of metrics. Current algorithms thrive on quantifiable signals—clicks, shares, dwell time—but ignore qualitative user states. As Widell points out, there's no malice in this; it's simply that happiness and fulfillment are devilishly hard to measure at scale. Engagement-driven models inadvertently reward negativity because anger and curiosity (e.g., checking for replies to incendiary comments) generate more data points than contentment. This has profound implications for tech ethics:

- Cybersecurity and mental health: Platforms become vectors for emotional manipulation, with algorithms amplifying divisive or addictive content that can erode user well-being.

- AI's blind spot: Machine learning models, trained on engagement data, lack the nuance to distinguish between positive and harmful virality, perpetuating a cycle of dissatisfaction.

Yet Widell remains optimistic. He believes the next breakthrough in social media lies in AI advancements that could finally capture and optimize for user happiness. Imagine algorithms that analyze sentiment, biometric feedback, or longitudinal behavior to prioritize content that leaves users feeling enriched—not drained.

YouTube: A Beacon of Hope in the Algorithmic Wasteland

Amid the gloom, Widell singles out YouTube as a rare success story. Unlike algorithm-heavy peers, YouTube excels at helping users discover niche, meaningful content through search and subscriptions, minimizing reliance on ragebait. Its exception? YouTube Shorts, which mimic the addictive patterns of TikTok. This contrast underscores a key insight: platforms that empower user choice over algorithmic enforcement foster healthier engagement. For developers, it's a blueprint—leveraging AI for discovery without sacrificing agency.

The Happiness Imperative: Why the Future Belongs to Joyful Algorithms

Widell envisions a near-future where "whoever cracks the 'user happiness' problem will quickly become the largest social media platform." With AI progressing rapidly—think multimodal models that interpret emotional cues or reinforcement learning that rewards positive outcomes—this isn't sci-fi. It's an urgent call to action for tech leaders: build algorithms that treat user well-being as the ultimate KPI. After all, as Widell quips, given the choice between equally addictive drugs, one fun and one dreadful, the better option is obvious. The race is on to make social media delightful again, and for once, technology might be the solution, not the problem.

Source: Adapted from Micael Widell's Substack article, "The Future of Social Media Feeds".

Comments

Please log in or register to join the discussion