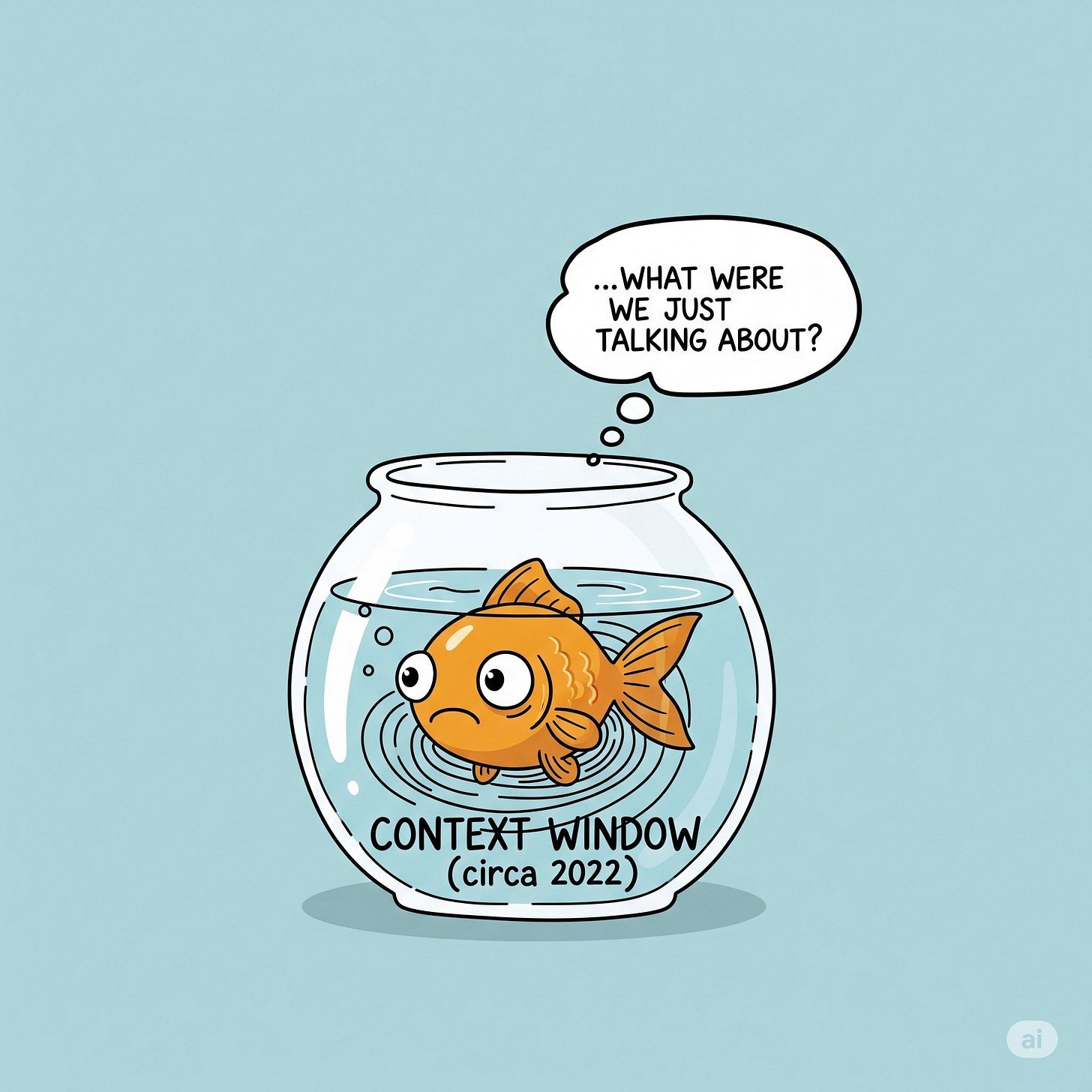

AI has evolved from goldfish-like memory constraints to elephant-scale context windows, yet faces new challenges with 'context rot' in lengthy interactions. The emergence of multimodal systems now enables AI to see, hear, and interpret real-world situations, fundamentally transforming how we collaborate with machines.

Remember explaining an inside joke to someone unfamiliar with the backstory? That awkward experience mirrors how humans interacted with early AI systems—brilliant minds trapped in goldfish-sized memory bowls. Today's AI context windows have expanded exponentially, but this breakthrough introduces surprising new complexities at the frontier of human-machine collaboration.

The Goldfish Era: Memory Constraints That Shaped Early AI

Early large language models operated with severely limited context windows—typically 1,000 tokens (about 750 words). Like a goldfish in a tiny bowl, these systems couldn't retain information beyond a few paragraphs. Developers constantly repeated key details as earlier context "spilled out" of the AI's working memory. This constraint fundamentally shaped interaction patterns:

- Conversations required tedious repetition

- Complex analysis was fragmented

- The AI couldn't build understanding across documents

The Elephant Transformation: When Context Became an Ocean

The quantum leap to 200,000-1,000,000 token windows (Claude 3.5 Sonnet and Gemini 1.5 Pro respectively) transformed AI from goldfish to elephant. Suddenly, entire technical manuals, financial reports, or codebases could be processed in single interactions. This enables:

# Example of new analysis capabilities

report = load_pdf("Q3_financials.pdf")

query = "Identify primary revenue drivers in European markets"

analysis = ai_analyze(report, query) # Processes 200+ page document instantly

The implications are profound: legal contract review accelerated from hours to seconds, developers can troubleshoot entire code repositories through conversation, and research synthesis happens at unprecedented scale.

The Muddy Middle Paradox: When More Isn't Always Better

Surprisingly, massive context introduces new challenges. Research reveals context degradation in lengthy documents—what engineers call the "muddy middle" effect. Like humans skimming a 500-page photo album, AI attention falters midway through massive inputs:

"Performance peaks at the beginning and end of long contexts, but crucial details buried in the middle often get overlooked," explains Dr. Elena Torres, NLP researcher at Stanford. "It's not a memory limit—it's an attention allocation challenge."

This phenomenon has forced engineers to develop sophisticated attention mechanisms and hierarchical processing techniques to combat information decay.

The Multimodal Leap: AI Grows Eyes and Ears

The latest evolution transcends text. Multimodal systems like GPT-4o and Gemini process visual, auditory, and textual context simultaneously:

- Visual context: Analyzing fridge contents to suggest recipes

- Audio context: Generating meeting minutes from raw recordings

- Spatial context: Diagnosing golf swings via video analysis

This represents a fundamental shift from linguistic to situational understanding. As Google's AI lead noted: "When an AI sees your wilting plant rather than just reading a description, it moves from processing data to interpreting reality."

The Road to Contextual Mastery

We stand at an inflection point where context isn't just expanding—it's layering. The next frontier involves:

- Retrieval-Augmented Generation (RAG) systems that dynamically pull relevant knowledge

- Attention optimization to solve the "muddy middle" problem

- Cross-modal understanding where sight, sound and text create holistic context

These advances promise AI that doesn't just recall information but understands why Dave's "computron" coffee order was hilarious—and maybe even laughs with you.

Source: The Product Brew

Comments

Please log in or register to join the discussion