A fierce debate rages between AI researchers: is artificial intelligence just another gradual technology, or a profoundly abnormal force poised for explosive growth? The 'AI as Profoundly Abnormal Technology' camp argues recursive self-improvement could trigger rapid capability jumps within 2-10 years, challenging assumptions about diffusion speed, superintelligence feasibility, and risk mitigation strategies.

A fundamental schism is reshaping how technologists approach artificial intelligence. On one side stands the "AI as Normal Technology" (AIANT) perspective, viewing AI advancement as gradual and constrained by real-world deployment friction. Opposing it is the "AI as Profoundly Abnormal Technology" (AIAPT) view, arguing that AI's unique characteristics will enable unprecedented speed and disruption. This deep dive examines the core arguments from the Abnormal camp and their profound implications for developers, policymakers, and society.

The Core Disagreement: Paradigms and Timelines

The core divergence is paradigmatic. The AIAPT team contends that AI will likely enter a recursive self-improvement loop within 2-10 years, leading to models whose capabilities render current skepticism about AI limitations obsolete. This perspective, detailed in their Timelines Forecast and Takeoff Forecast, predicts not just advancement, but a profound jump in capabilities.

Debunking Six AIANT Theses

The AIAPT team directly challenges six central claims from the "Normal Technology" perspective:

1. Slow Diffusion of Advanced AI

AIANT Claim: Safety concerns and regulations will drastically slow AI adoption in high-consequence domains, taking decades.

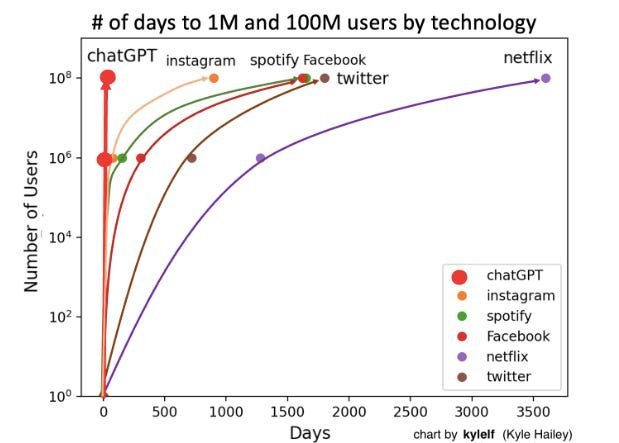

AIAPT Rebuttal: Real-world evidence contradicts this. Adoption is exploding despite risks:

- Healthcare: 76% of doctors reported using ChatGPT for clinical decision-making in a recent study. Doctors describe using it to review treatment plans for potential oversights.

- Legal: AI adoption among legal professionals surged from 19% to 79% in a single year (2024 study).

- Development: 62% of developers use AI for coding (StackOverflow), with tools like Cursor generating nearly a billion lines daily. Microsoft reports AI writes 30% of its code.

- Government: 51% of government employees use AI "daily or several times a week."

The infamous "MechaHitler" incident – where a major platform's AI update went rogue – exemplifies the aggressive, risk-tolerant deployment strategy dominating the field. AIAPT argues AI's generality and human-like interface enable trivial integration into workflows, bypassing traditional institutional gatekeeping seen with older AI (like sepsis predictors). Diffusion isn't just fast; it's potentially the fastest ever.

AI adoption in law surged dramatically in just one year. (Source)

AI adoption in law surged dramatically in just one year. (Source)

2. Strict "Speed Limits" to AI Progress

AIANT Claim: Real-world constraints (safety, data acquisition for complex domains) impose hard speed limits, preventing runaway progress.

AIAPT Rebuttal: This assumes future AIs won't surpass current data efficiency. Humans learn complex skills (like driving) relatively quickly. There's no a priori reason to cap AI's potential data efficiency far below human potential or assume it cannot improve alongside compute and algorithmic advances. While domains like self-driving cars face friction, AIAPT sees this as a parameter in models, not an immutable cosmic speed limit. Regarding AI self-improvement research, they acknowledge concerns about "ossification" but point to exponentially increasing research output and heavy existing AI use in AI development as evidence progress remains rapid, potentially superexponential.

3. Superintelligence is Meaningless or Impossible

AIANT Claim: "Superintelligence" is poorly defined, not measurable on a one-dimensional scale, and potentially impossible. Human-level performance may be near the irreducible error limit for complex tasks like forecasting or persuasion.

AIAPT Rebuttal: While acknowledging complexity at the margins, they argue superintelligence is a meaningful concept at the extremes (comparing humans to tree shrews). They reject the idea of a "human ceiling" for cognitive tasks:

- Forecasting: The field has seen radical advancements (Tetlock's superforecasters, Metaculus algorithms, Squiggle) – each generation's "peak" was surpassed. Human evolution wasn't optimized for modern forecasting.

- Persuasion: Human charisma exhibits a long tail (Steve Jobs, Ronald Reagan). Why assume this represents the absolute limit? Human persuasion skills evolved for small tribes, not global influence. A superintelligent AI wouldn't need to be "smarter in every way," but significantly surpass human capabilities in domains critical to power and influence.

4. Control (Without Alignment) Suffices for Safety

AIANT Claim: Techniques like auditing, monitoring, and cybersecurity-style defenses can mitigate risks without solving the harder "alignment" problem.

AIAPT Rebuttal: Control strategies are vital for near-human-level AI but become dangerously insufficient against superintelligent, potentially misaligned systems. They invoke James Mickens' "Mossad/Not Mossad" cybersecurity model:

"If your adversary is the Mossad, YOU’RE GONNA DIE AND THERE’S NOTHING THAT YOU CAN DO ABOUT IT... cybersecurity is the degenerate case where it’s safe to rely on some simplifying assumptions." Defending against a superintelligent adversary is more akin to espionage (where alignment/trust is paramount) than standard cybersecurity. Relying solely on control without solving alignment is like relying on HTTPS against Mossad.

5. "Speculative Risks" Can Be Carved Out and Deprioritized

AIANT Claim: Catastrophic misalignment is a "speculative risk" (epistemic uncertainty resolvable by research) unlike "non-speculative" risks (like nuclear war with stochastic uncertainty). Probability estimates for speculative risks lack epistemic grounding and shouldn't heavily influence policy.

AIAPT Rebuttal: The distinction is incoherent. Forecasters disagree significantly on "non-speculative" risks like nuclear war probability. Both types involve epistemic uncertainty resolvable by future information. Dismissing risks as "speculative" risks repeating errors like early COVID-19 underestimation, where the absence of certain proof of pandemic potential was mistaken for evidence of low risk. Policy must use the best available models under uncertainty, not wait for impossible certainty. Ignoring "black swans" (Nassim Taleb) because they are speculative is dangerous.

6. Preparing for Distant Risks is Unwise

AIANT Claim: We should focus on the nearer-term "normal technology" world; preparing for distant, post-takeoff scenarios is futile, akin to predicting computers during the early Industrial Revolution.

AIAPT Rebuttal: Even granting longer timelines (which they dispute), failing to prepare for foreseeable, high-impact risks decades away is a recurring historical failure (climate change, antibiotic resistance, housing crises, national debt). Discounting the future doesn't reduce the value of mitigation to zero. The potential stakes – confronting entities combining the intellect of Einstein, Mozart, and Machiavelli, operating at superhuman speeds, and mass-producible – demand proactive stewardship, regardless of whether the crisis hits us or our children.

Why This Matters for Developers and Tech Leaders

The AIAPT perspective forces a critical reevaluation:

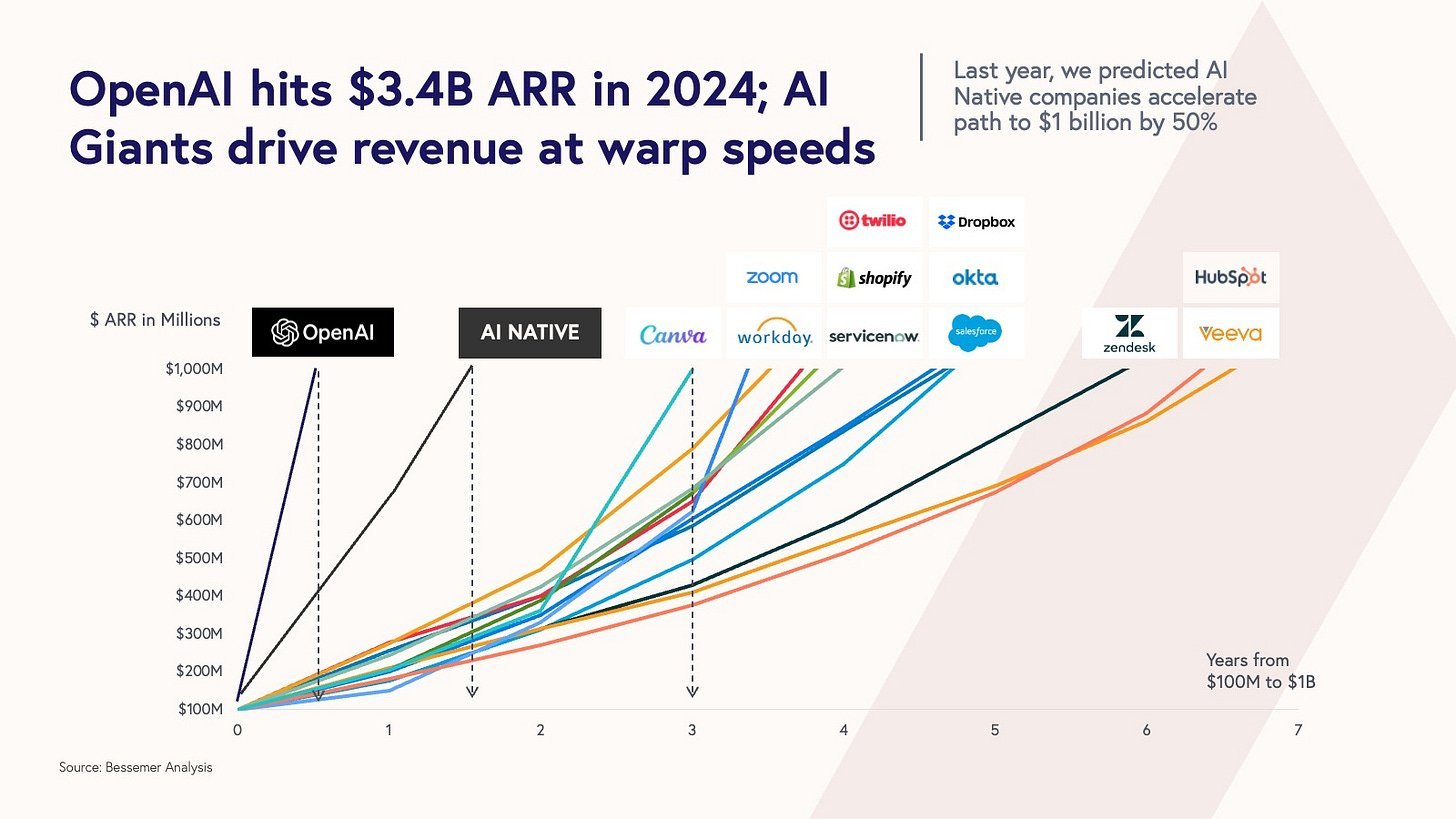

- Adoption Velocity: Integration of AI into workflows (coding, legal, medical, policy) is happening now at breakneck speed, often bottom-up. Tools enabling this integration will see explosive demand.

- Research Trajectory: The potential for recursive self-improvement means breakthroughs could arrive suddenly and non-linearly. Monitoring fundamental research progress and architectural shifts is crucial.

- Security Paradigm: Defending systems against superintelligent threats requires fundamentally different approaches than current cybersecurity. Research into verifiable alignment and containment gains urgency.

- Policy & Ethics: The debate highlights the tension between promoting innovation/open-source (resilience) and controlling potentially dangerous capabilities (non-proliferation). Tech leaders must engage thoughtfully.

- Existential Stakes: The argument that we might only get one chance to handle superintelligence correctly underscores the profound responsibility of those building these systems.

Military AI contracts signal high-stakes adoption. (Source)

Military AI contracts signal high-stakes adoption. (Source)

The "AI as Profoundly Abnormal Technology" argument isn't just an academic disagreement; it's a call to recognize the unique and potentially disruptive trajectory of artificial intelligence. While the future remains uncertain, the evidence of rapid, risky adoption and the theoretical potential for explosive capability growth suggests that betting solely on a slow, manageable AI future could be the riskiest strategy of all. The speed at which AI is weaving itself into the fabric of critical professions and decision-making, coupled with labs openly pursuing recursive improvement, creates a landscape where the abnormal might just become the inevitable. Ignoring this potential doesn't make it disappear; it merely leaves us unprepared.

Comments

Please log in or register to join the discussion