One trend this year may well be experimentation with agent orchestration and long-running agents – which is already underway. Also: Claude Code bans OpenCode while Codex embraces it, and more.

The software development landscape is witnessing a significant shift in how AI agents are being deployed and managed. While early AI coding assistants focused on single-shot code generation, the next frontier involves agents that can operate for extended periods and coordinate complex workflows. This evolution is already visible in tools like Cursor, which are pushing the boundaries of what's possible with long-running AI processes.

The Rise of Long-Running Agents

Traditional AI coding tools typically operate in a request-response pattern: you ask for a function, they generate it, and the session ends. Long-running agents break this pattern by maintaining state and context across multiple interactions. Think of it as the difference between asking a colleague for a single code snippet versus having them work alongside you for hours, continuously understanding the project's evolution.

Cursor, the AI-powered code editor built on VS Code, has been experimenting with this concept. Their agent mode doesn't just generate code; it can run tests, analyze errors, and iterate on solutions without losing the thread of the conversation. This requires maintaining a persistent context window that grows with each interaction, something that's computationally expensive but increasingly feasible with better context management techniques.

The technical challenge here is substantial. Each agent needs to:

- Maintain conversation history without hitting token limits

- Track file changes and project state across sessions

- Handle interruptions and resume work seamlessly

- Coordinate with other tools in the development environment

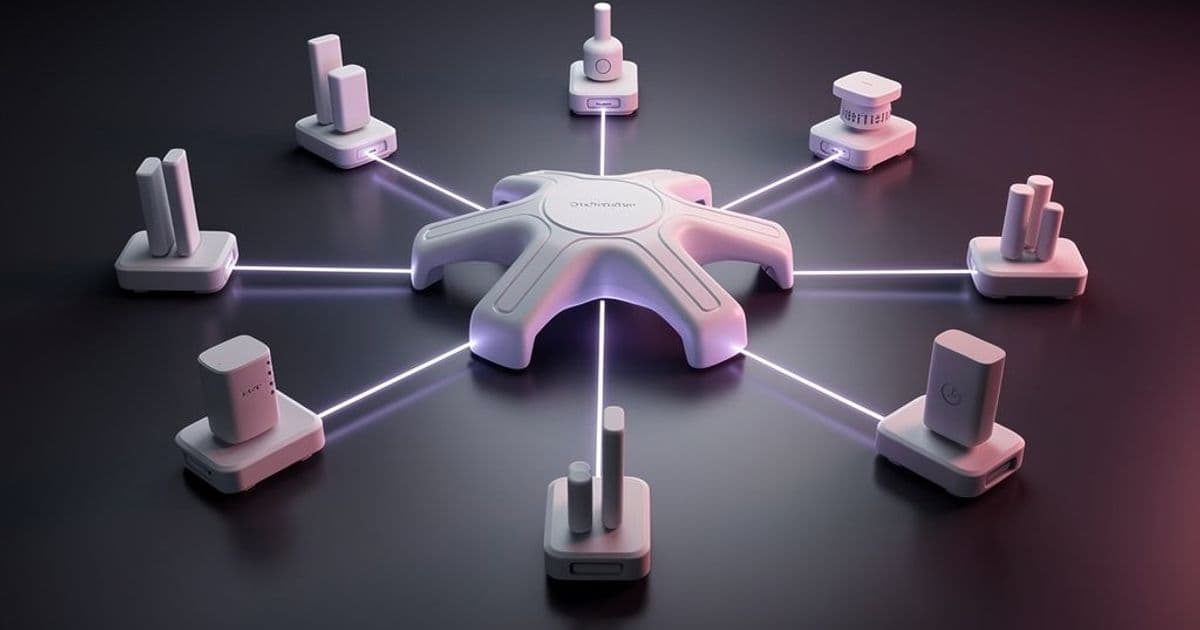

Orchestrating Multiple Agents

The next level of complexity involves coordinating multiple specialized agents. Instead of one general-purpose agent, you might have:

- A code generation agent

- A testing agent

- A deployment agent

- A monitoring agent

Orchestrating these requires a supervisor pattern where a central controller distributes tasks and aggregates results. This is similar to microservices architecture but applied to AI agents. The supervisor needs to understand each agent's capabilities, manage dependencies, and handle failures gracefully.

Early examples of this pattern are emerging in CI/CD pipelines. Instead of a single AI assistant, teams are experimenting with agent chains where one agent writes code, another reviews it, a third runs security scans, and a fourth handles deployment. The orchestration layer becomes critical for managing the workflow and ensuring agents don't step on each other's toes.

Technical Implementation Patterns

Several patterns are emerging for implementing these systems:

1. Context-Aware Session Management Tools are adopting techniques like:

- Semantic search over conversation history

- File dependency graphs to understand project structure

- Incremental context updates rather than full replays

2. Agent Communication Protocols Standardized message formats are being developed, similar to how microservices use REST or gRPC. The Model Context Protocol (MCP) is one such standard that defines how agents can share context and tools.

3. State Persistence Strategies

- Database-backed session storage for long-term memory

- Vector embeddings for semantic search across past conversations

- Differential updates to avoid reprocessing entire histories

Challenges and Trade-offs

This approach isn't without significant challenges:

Token Management: Long-running sessions quickly consume context windows. Solutions include:

- Summarization techniques that condense past interactions

- Selective context loading based on current task

- External memory systems that retrieve relevant history

Error Propagation: In multi-agent systems, errors can cascade. A bug in the code generation agent might cause the testing agent to fail, which then breaks the deployment agent. Implementing circuit breakers and validation checkpoints is crucial.

Cost: These systems are expensive to run. Each agent interaction consumes API calls, and long-running sessions multiply this cost. Teams need to balance capability with budget constraints.

Real-World Applications

Several companies are already implementing these patterns:

Cursor's Agent Mode: The editor now allows agents to work across multiple files, run commands, and iterate on solutions. Their approach maintains a project-wide context that understands relationships between different parts of the codebase.

GitHub Copilot Workspace: This takes a project-level approach where agents can understand entire repositories and make coordinated changes across multiple files.

Custom Orchestration Platforms: Companies like LangChain and AutoGen provide frameworks for building multi-agent systems, though these require significant engineering effort to implement effectively.

What's Next

The trend points toward more sophisticated agent orchestration:

Standardization: Expect more protocols and standards for agent communication, reducing the lock-in to specific platforms.

Specialization: Agents will become more specialized for specific tasks (security auditing, performance optimization, documentation generation) rather than general-purpose coding.

Human-in-the-Loop: Rather than full automation, the most effective systems will maintain human oversight, with agents handling routine tasks and escalating complex decisions.

Integration with Existing Tools: The most successful implementations will integrate seamlessly with existing development workflows rather than requiring wholesale adoption of new platforms.

Getting Started

For teams interested in experimenting with these patterns:

Start Small: Begin with a single long-running agent for a specific task before attempting orchestration.

Focus on Context Management: The biggest technical hurdle is maintaining useful context. Invest in techniques for summarization and retrieval.

Monitor Costs: Set up tracking for API usage and token consumption from day one.

Define Clear Boundaries: Each agent should have a well-defined responsibility to avoid overlap and confusion.

The shift toward long-running, orchestrated agents represents a maturation of AI in software development. While the hype cycle has focused on single-shot code generation, the real value may come from agents that can understand and work within the full context of a project over time. As these systems become more sophisticated, they promise to change not just how we write code, but how we think about the entire software development lifecycle.

Featured image: The evolution from simple code generation to complex agent orchestration

Social preview: Long-running agents and multi-agent orchestration are reshaping developer workflows

Comments

Please log in or register to join the discussion