As AI reshapes code generation, the enduring principle of Level of Detail reveals how software craftsmanship evolves from implementation to architectural discernment.

The concept of Level of Detail (LoD) in computer graphics offers more than technical optimization—it provides a fundamental lens for understanding how we navigate complexity in software systems. Originally developed to manage rendering workloads by dynamically adjusting geometric complexity based on viewer proximity, LoD embodies a universal truth: resources should concentrate where perception demands fidelity. This principle now illuminates software engineering's evolution amid generative AI's transformative wave.

Abstraction as Cognitive Rendering

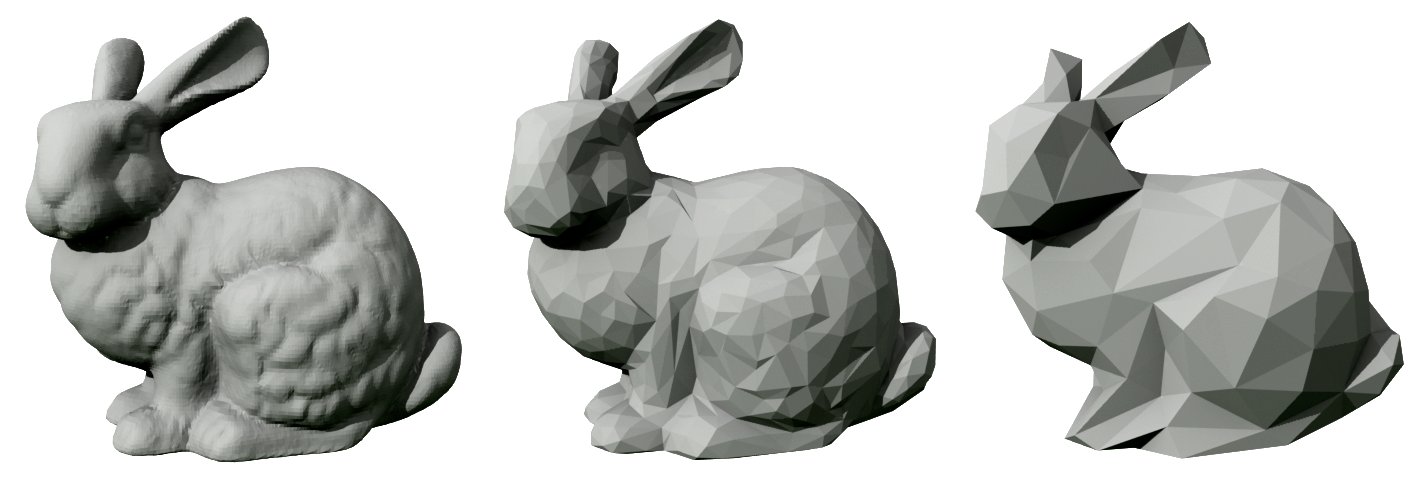

Software development operates through layered mental models. When sketching a "database" box on a whiteboard, engineers invoke a low-polygon abstraction—a silhouette hiding intricate internals like B-trees and buffer pools. Expert practitioners instinctively navigate resolution scales: zooming into implementation details during debugging, then pulling back to assess architectural coherence. The core competency isn't exhaustive system knowledge, but contextual awareness of what resolution each task demands.

Context Windows: The LLM Parallel

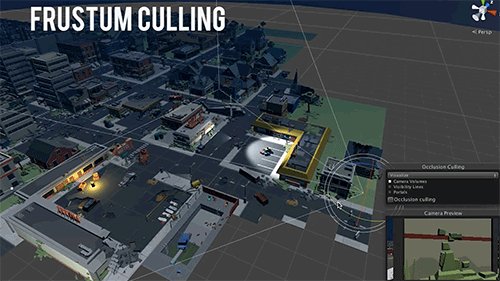

Large language models face strikingly similar constraints. Their context windows function like viewport frustums—too little context yields hallucinated polygons where assumptions fill voids; excessive context drowns signal in noise. Effective LLM collaboration requires carefully calibrated LoD: high-resolution context for active components, mid-fidelity surroundings, and minimalist broader system references. This mirrors how engineers load mental models, yet we rarely articulate it as resolution management.

Frustum culling in action—rendering only what's visible

Frustum culling in action—rendering only what's visible

via Falmouth Games Academy

The Polygon Floodgates Open

Generative AI has shattered previous constraints on code production velocity. As Adam Jacob observed after rebuilding a prototype in three days with AI assistance, some developers now generate 50,000 lines of functional code daily. This torrent prompts radical workflow shifts: principles like DRY (Don't Repeat Yourself) lose relevance when humans no longer manually trace execution paths. Jacob suggests the future belongs to architects defining structural boundaries while AI agents handle implementation.

Yet alternative perspectives emerge from teams like Oxide, where AI eliminates tedium to enable greater rigor. When Rain Paharia generated 20,000 lines and 5,000 doc tests in a day by replicating a hand-crafted pattern, the result wasn't reduced quality but enhanced feasibility for meticulous solutions. The common thread: AI shifts effort from manual implementation toward higher-order judgment.

The Carving Principle

The critical misconception lies in equating code volume with progress. As LoD teaches us, uncontrolled geometric density creates performance-killing overdraw. Similarly, proliferating code without commensurate pruning creates maintenance debt that outweighs generation savings. The emerging challenge becomes justification rather than production: if code generation is nearly free, the cost center shifts to understanding, maintaining—and strategically eliminating.

The Stanford bunny at varying LoD—complexity matched to need

The Stanford bunny at varying LoD—complexity matched to need

Rendering What Matters

Modern graphics pipelines demonstrate this evolution. Despite thousand-fold GPU improvements, photorealism relies not on brute-force rendering but sophisticated culling: frustum elimination, occlusion testing, and texture streaming. Engineers still decide what deserves rendering cycles—they just manage it through dynamic systems rather than manual LOD swaps.

This mirrors software's trajectory. AI loosens the code-generation bottleneck, but intensifies focus on existential questions: What should exist? What provides unique value? What can be discarded? The engineer's role evolves from polygon-pusher to scene composer—using AI both to generate material and identify extraneous elements.

The tools accelerate context-switching: LLMs summarize unfamiliar subsystems into usable mental models, or translate vague requirements into draft implementations. Yet the essential work remains unchanged—determining necessary resolution for each task. Like a game engine prioritizing detail for objects in the player's hands, engineers must discern where to invest finite attention. That discernment, not generative throughput, constitutes the enduring craft.

As rendering pipelines demonstrate, increased computational power doesn't eliminate the need for selective focus—it demands more sophisticated management of that selection. In software's new paradigm, we're not just generating more geometry; we're learning to sculpt it.

Comments

Please log in or register to join the discussion