An experimental project using Claude AI to decompile Nintendo 64 games achieved 75% automation before hitting fundamental limitations in handling complex graphics and math functions.

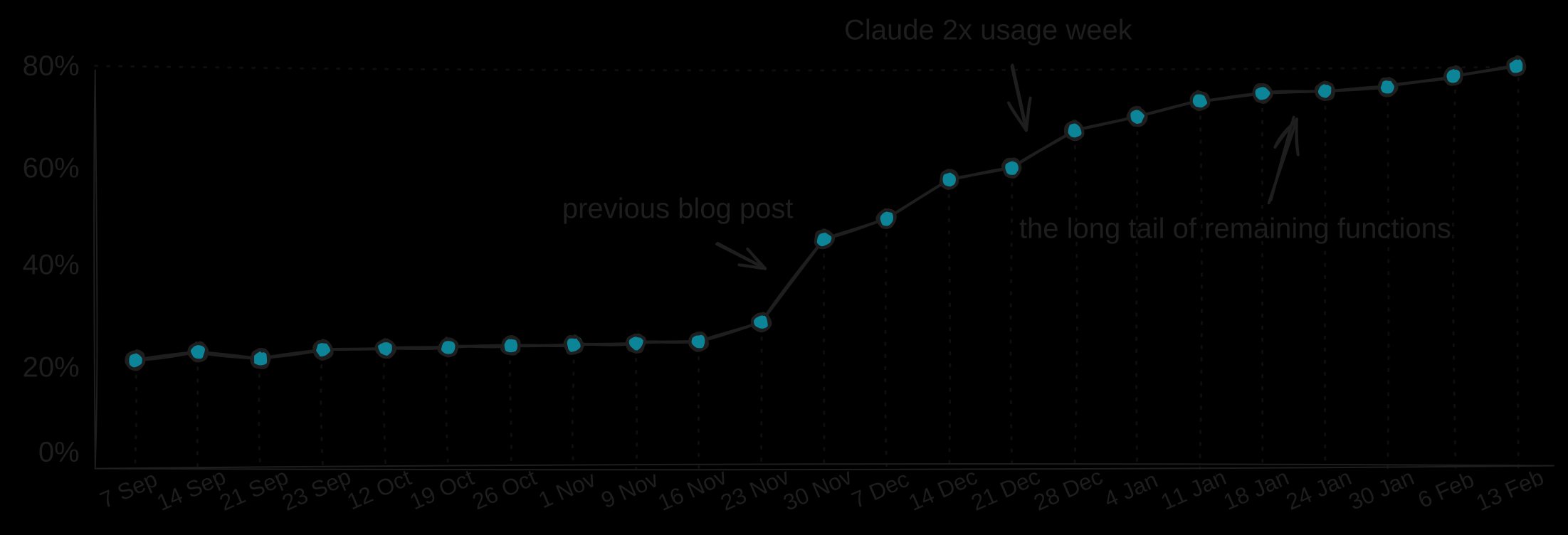

A pioneering effort to automate decompilation of classic Nintendo 64 games using large language models has revealed both the promise and limitations of current AI approaches. Chris Lewis's Snowboard Kids 2 decompilation project initially saw remarkable success, with Claude Opus automating matches that pushed decompilation from 25% to 58% completion rapidly. But as the project advanced, progress slowed dramatically despite innovative workflow adaptations that ultimately reached 75% completion before plateauing.

The Similarity Breakthrough

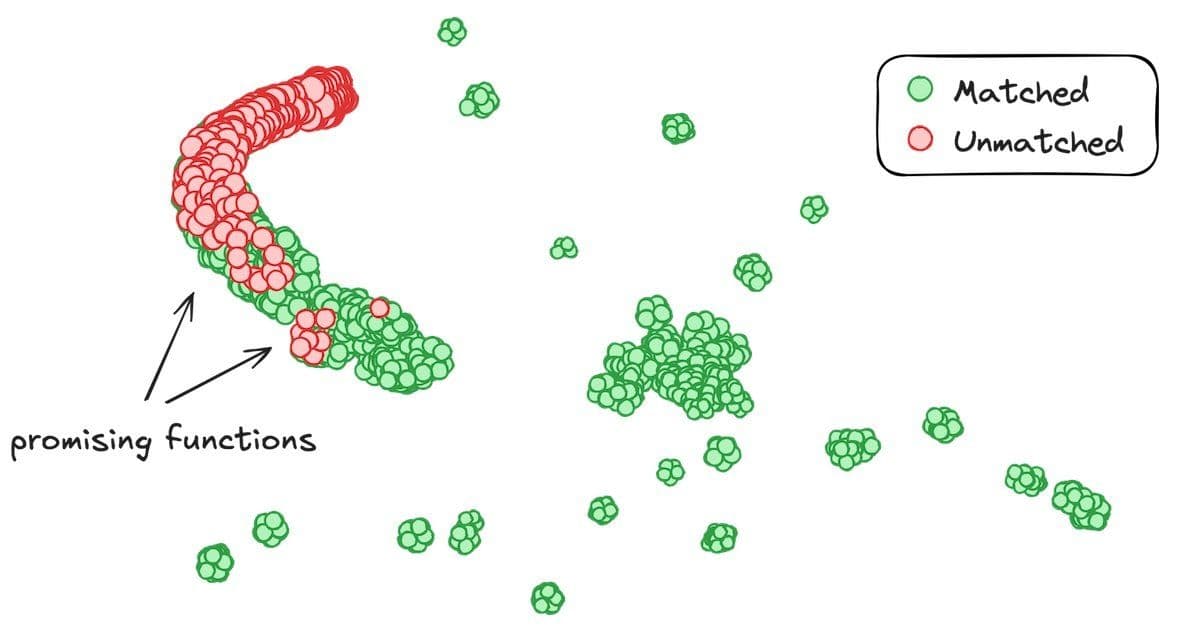

Early prioritization based on function difficulty eventually failed when remaining functions were uniformly complex. The key advancement came through function similarity analysis. By computing embeddings of assembly instructions and using tools like Coddog for precise similarity scoring, Lewis enabled Claude to reference successfully decompiled functions when attacking new targets. This approach leveraged Claude's pattern recognition capabilities far more effectively than raw instruction analysis.

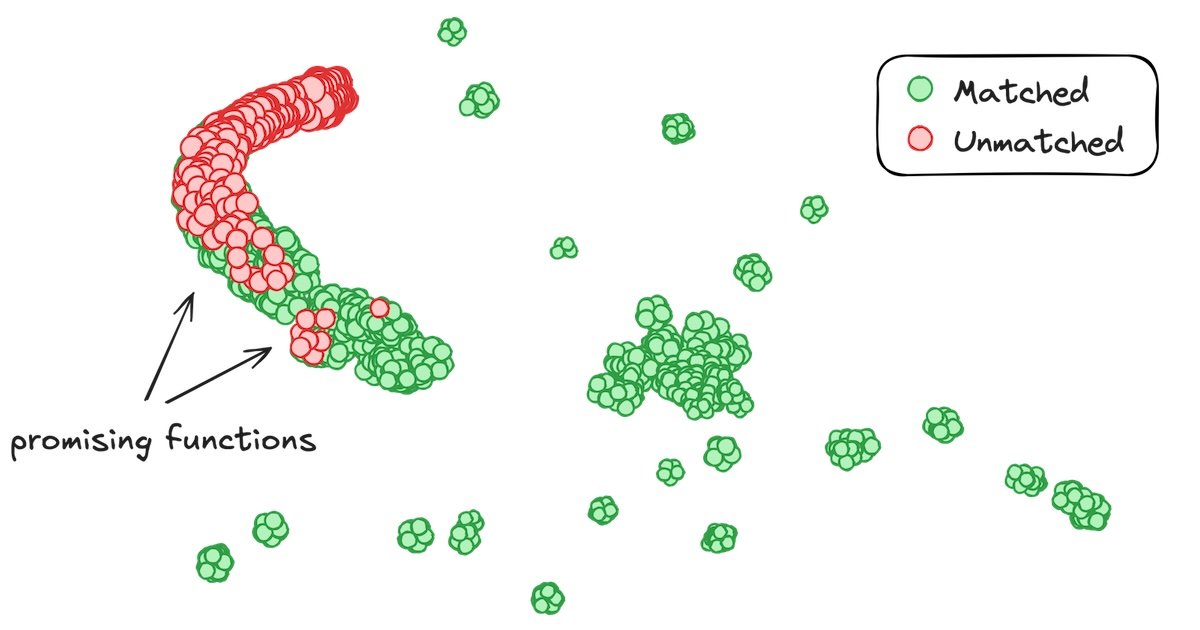

Scatter plot visualization of function embeddings used for similarity matching

Two methods proved complementary: vector embeddings enabled rapid retrieval across thousands of functions, while Coddog's Levenshtein distance calculations provided precise opcode sequence matching. Functions with high similarity scores became priority targets, yielding significantly better results as Claude reused decompilation patterns across related code segments.

Specialized Tooling: Wins and Limitations

Domain-specific tools demonstrated where LLMs excel and struggle:

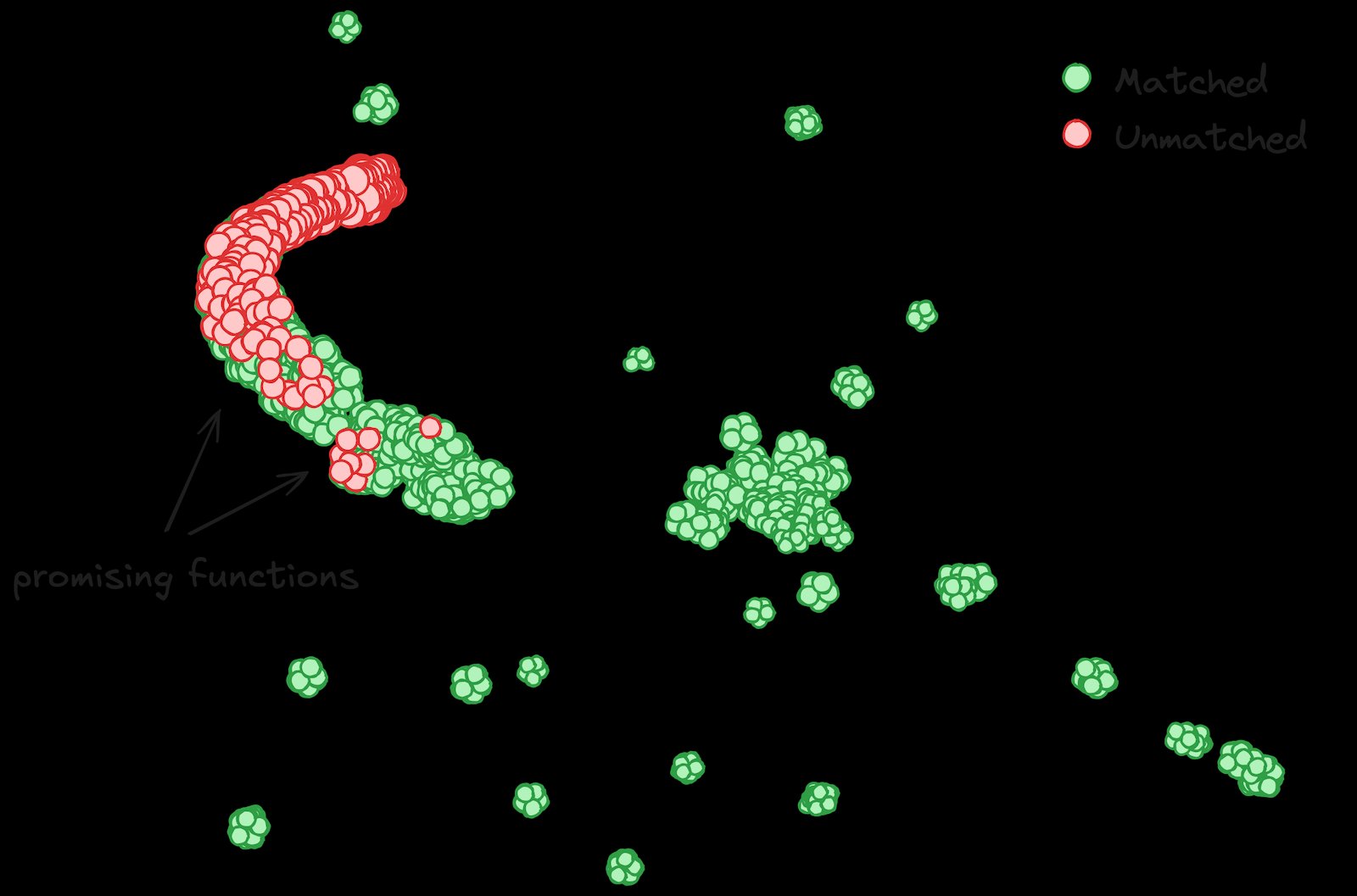

- F3Dex2 Processor: Snowboard Kids 2 uses Nintendo's F3Dex2 graphics library. Lewis created a dedicated Claude skill with disassembler tools and documentation specifically for RDP microcode. This allowed Claude to correctly interpret display list construction macros that previously caused confusion:

Example transformation from decompiled C to F3Dex2 instructions

- The Permuter Experiment: Attempts to combine Claude's reasoning with brute-force permuters (which test millions of code variations) backfired. Permuters introduced unnatural code constructs that Claude would then optimize around, creating compounding errors. Lewis ultimately abandoned this approach despite occasional successes due to excessive cleanup requirements.

Scaling Infrastructure

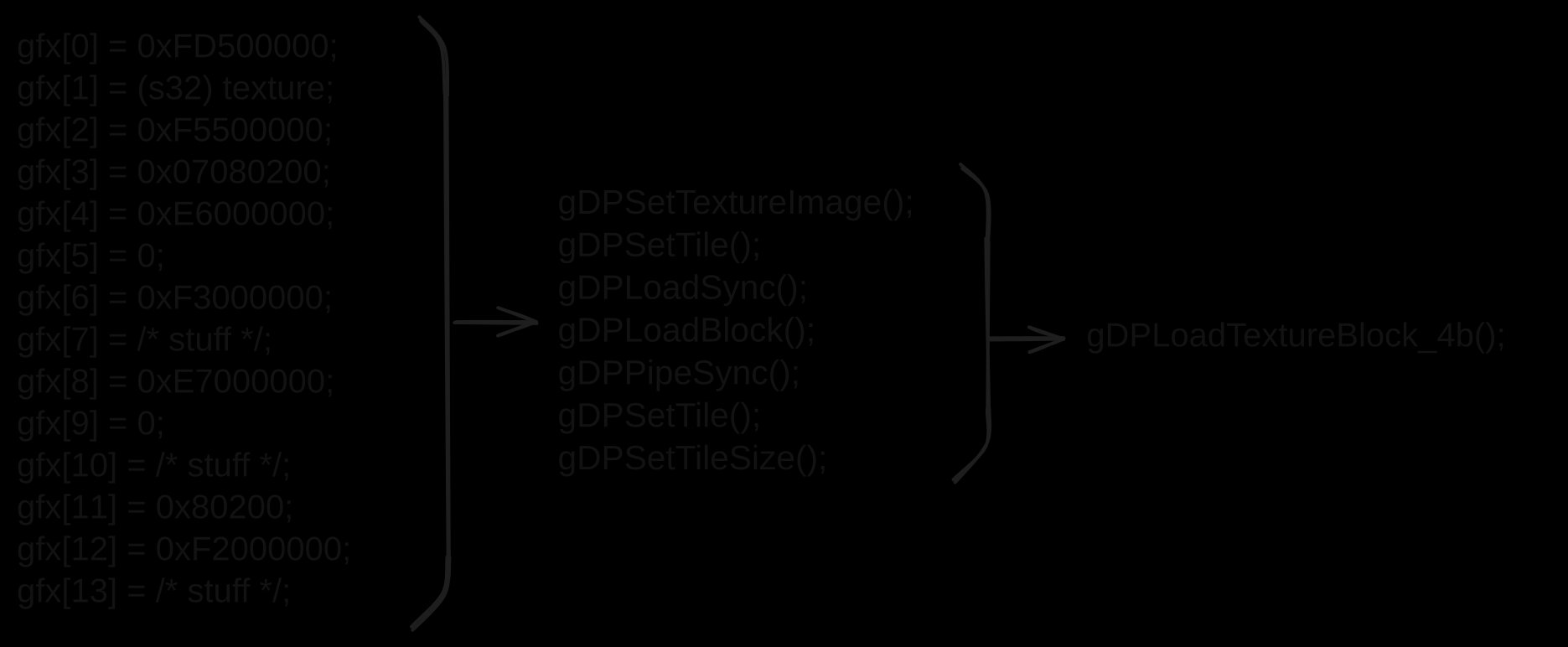

Maintaining progress required significant workflow engineering:

- Worktrees: Multiple parallel work environments prevented agent conflicts

- Guardrails: Hooks blocked destructive actions like SHA1 hash modification

- Nigel Orchestrator: A dedicated task-runner managed complex workflows with features like real-time output streaming and distributed processing

- Model Routing: GLM (via "Glaude" wrapper) handled mechanical tasks at 1/20th the cost of Claude Opus

Current workflow architecture showing task orchestration

The Hard Tail

Despite these innovations, 124 functions remain unmatched. Three categories prove particularly resistant:

- Large functions (>1,000 instructions) where Claude loses coherence

- Graphics routines with deeply nested macros

- Mathematical operations (matrix/vector transforms, inverse square roots)

Decompilation progress chart showing dramatic slowdown after initial gains

Lewis notes: "At 86 instructions, an inverse square root function seems tractable. Yet it's resisted months of attempts. The remaining functions require leaps in reasoning that current models can't consistently make."

Implications for Reverse Engineering

This project demonstrates both the transformative potential and current boundaries of LLM-assisted decompilation:

- Success case: Automating ~75% of a complex codebase significantly accelerates preservation efforts

- Clear limitations: Graphics and math operations require specialized human-AI collaboration

- Workflow lessons: Orchestration and domain-specific tooling are critical for scaling

The Snowboard Kids 2 project remains active, with Lewis hoping future model generations might tackle the remaining functions. For now, it stands as a landmark case study in practical LLM application boundaries.

Explore the Snowboard Kids 2 decompilation project or join the Discord community for preservation efforts.

Comments

Please log in or register to join the discussion