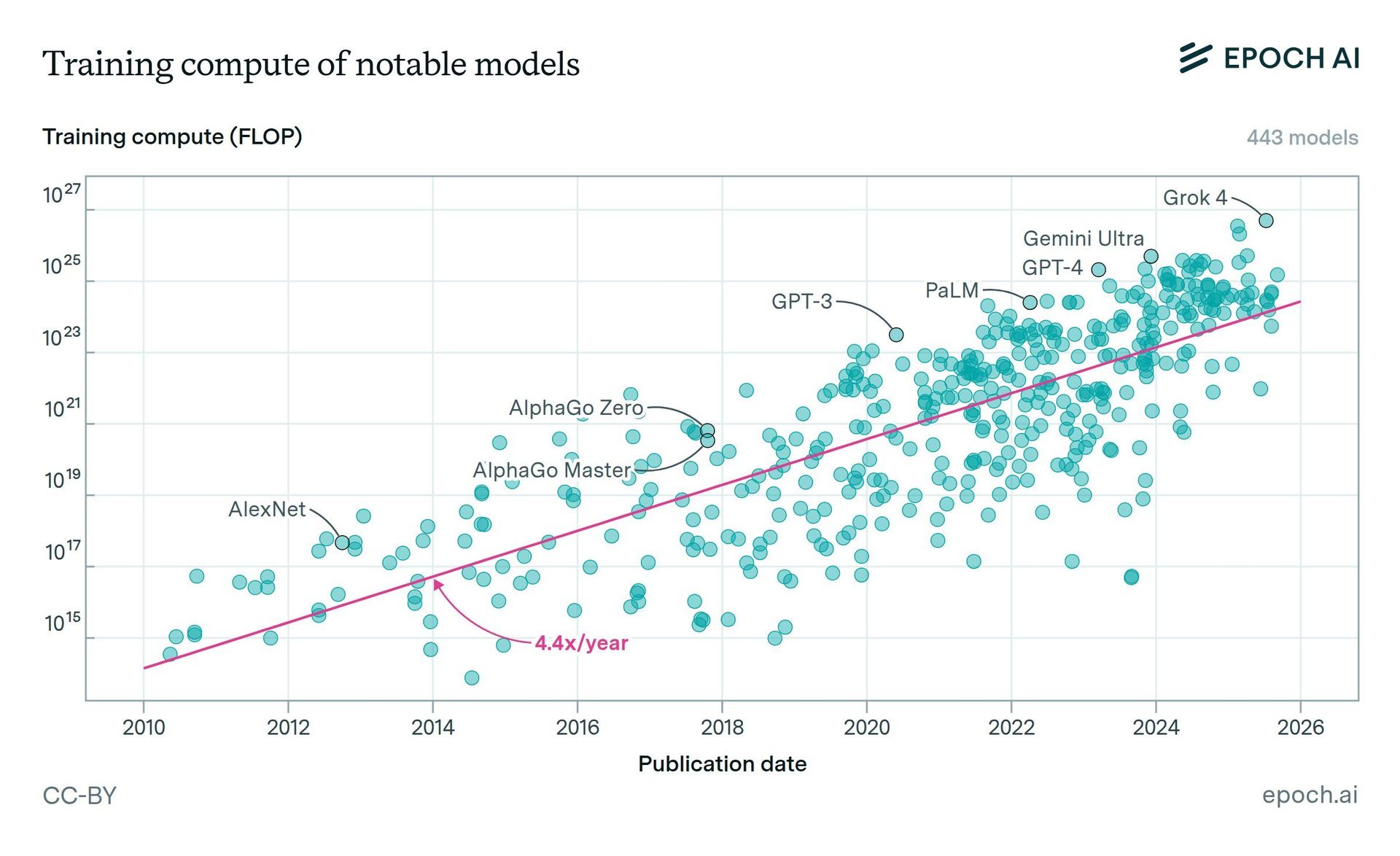

Epoch AI's latest data reveals training compute for frontier AI models is growing at 4.4x annually, with costs soaring into the hundreds of millions per model. This breakneck scaling is driven by ballooning hardware clusters and surging investment, outpacing efficiency gains and raising urgent questions about economic and environmental sustainability.

The Exponential Engine Fueling AI's Evolution

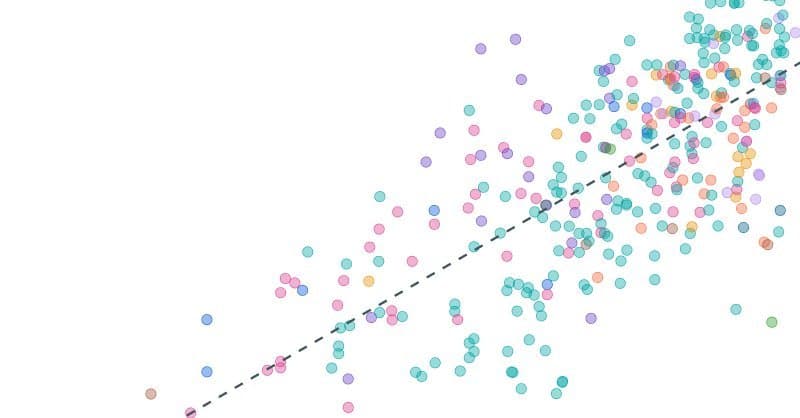

New analysis from Epoch AI's comprehensive database of over 3,000 machine learning models reveals an alarming trajectory: the computational power required to train cutting-edge AI systems has doubled approximately every five months since 2010. This 4.4x annual growth rate—documented through September 2025—signals an industry sprinting toward physical and economic limits.

Breaking Down the Compute Surge

Key drivers behind this explosive growth include:

- Hardware Proliferation: Post-2018, scaling primarily resulted from massive increases in GPU cluster sizes. Training runs now utilize tens of thousands of chips simultaneously.

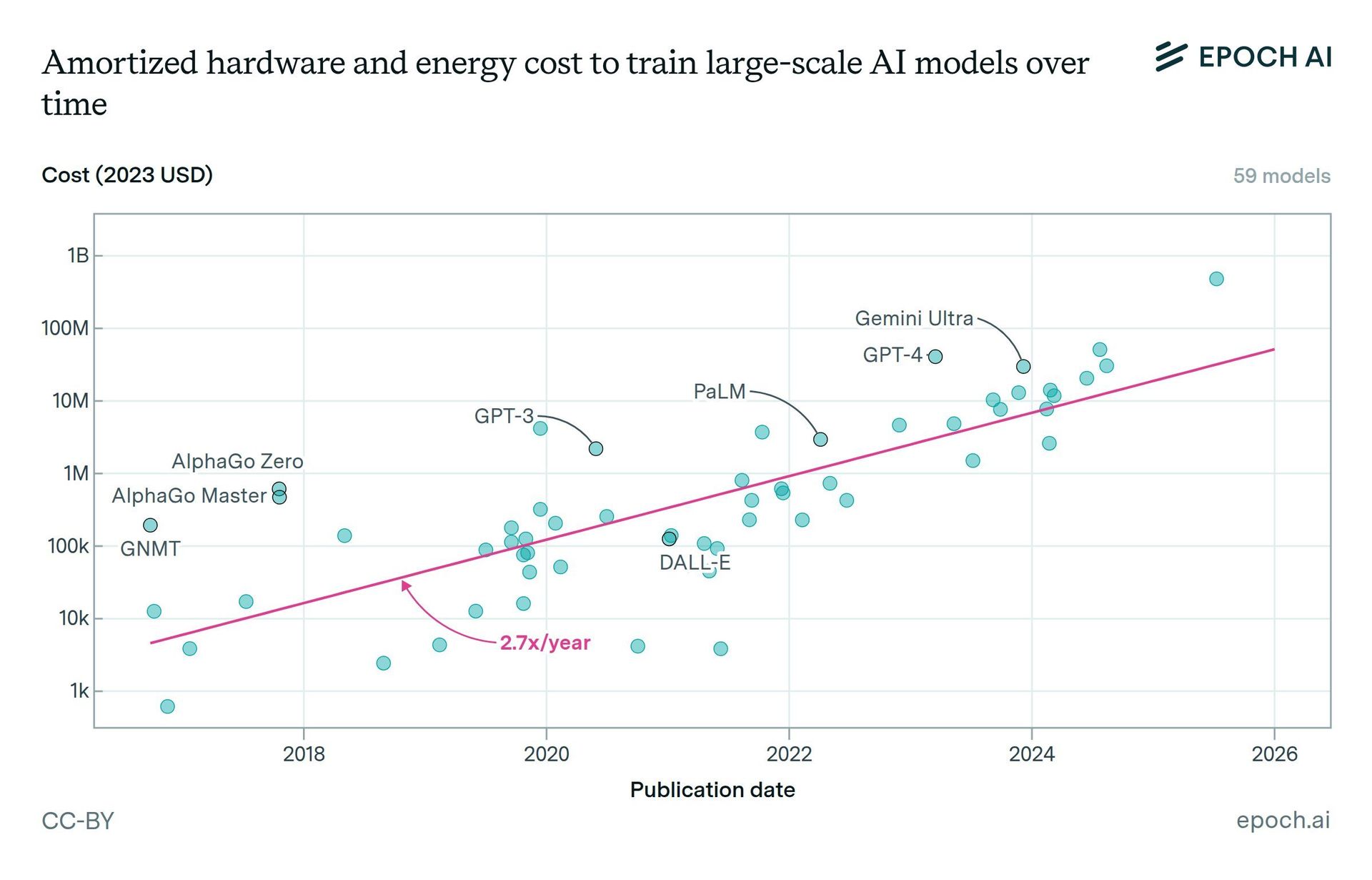

- Investment Tsunami: AI R&D budgets expand 2-3x yearly, enabling unprecedented infrastructure. "Spending on large-scale model training grows at 2.4x annually, with frontier models now costing hundreds of millions," the report states.

- Longer Training Cycles: Training durations have increased 4x, keeping clusters active for months.

The Efficiency Paradox

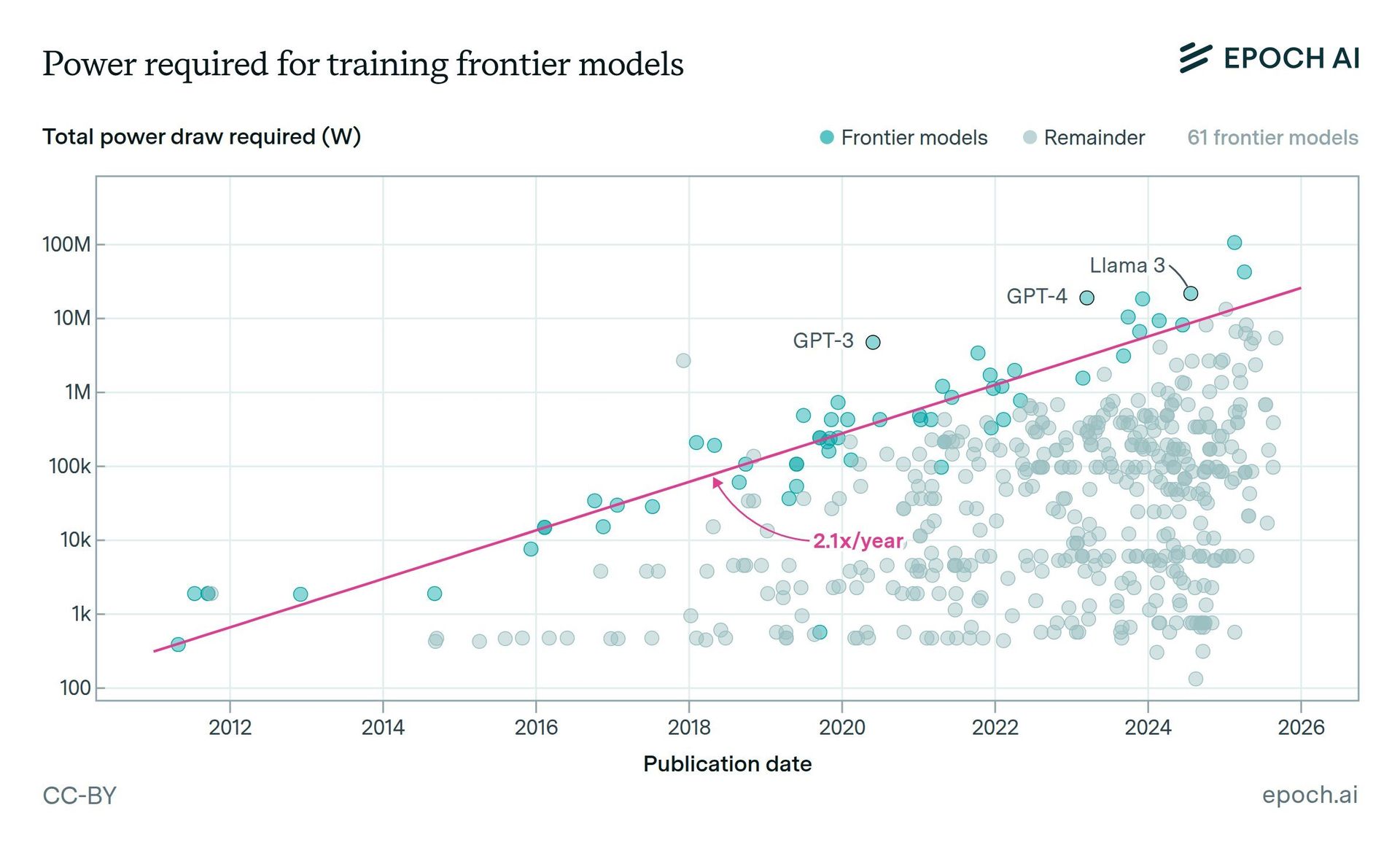

While hardware efficiency improved 12x over the past decade and lower-precision formats delivered 8x gains, these advances only partially offset compute demands:

"Hardware efficiency and precision improvements account for a roughly 2x/year decrease in power requirements relative to training compute. But compute itself grows at 4x/year—meaning net power consumption still rises dramatically."

Frontier Models: Proliferation at Scale

The threshold for "frontier models" (top 10 by compute at release) now exceeds 10^25 FLOPs—a milestone first reached by GPT-4. Astonishingly, over 30 models have crossed this threshold as of mid-2025, with two new entrants appearing monthly during 2024. Regulatory implications loom large, as EU AI Act requirements will soon mandate special scrutiny for models at this scale.

The Hidden Cost: Energy and Economics

Training these behemoths creates staggering energy footprints:

- GPU clusters dominate ~50% of training costs

- Power draw per GPU increases steadily (~few %/year)

- Total cluster energy demands surge despite efficiency gains

Methodology and Caveats

Epoch's database—free under CC-BY license—relies on published data and estimation where details are scarce. Confidence levels range from "Confident" (3x accuracy) to "Speculative" (30x accuracy), with question marks denoting higher uncertainty. The team actively solicits corrections at [email protected].

When Does the Curve Break?

With costs ballooning exponentially and power demands defying mitigation, the AI industry faces critical questions: Can algorithmic breakthroughs replace brute-force scaling? Will market forces or regulation cap compute growth? As models grow more costly than skyscrapers and energy footprints rival small nations, the pursuit of larger models appears increasingly untenable. The next era of AI may be defined not by scaling up, but by scaling smart.

Source: Epoch AI's AI Models Database (September 2025 Update)

Comments

Please log in or register to join the discussion