A new header-only C library called vdb provides lightweight vector database capabilities for resource-constrained environments, featuring zero dependencies, optional multithreading, and cross-platform persistence.

Developers working with embedded systems and performance-critical applications now have a new tool for handling vector embeddings. vdb, an Apache 2.0-licensed header-only C library created by Abdirahman Moallim, delivers vector database functionality in a single-file implementation that's portable enough to run on everything from microcontrollers to high-performance servers.

Unlike heavyweight vector databases that require complex infrastructure, vdb's design philosophy prioritizes minimalism and portability. The library implements core vector database operations—including nearest neighbor search with multiple distance metrics (cosine, Euclidean, dot product)—in under 1,000 lines of C code. Its header-only nature means integration is as simple as dropping vdb.h into a project.

"What makes vdb stand out is its intentional constraints," explains Moallim. "By enforcing fixed dimensionality at database creation and avoiding dynamic resizing, we eliminate significant complexity while maintaining real-world usability for common embedding workflows."

Technical highlights include:

- Zero dependencies beyond standard libraries (pthreads optional)

- Thread-safe operations when compiled with

-DVDB_MULTITHREADED - Custom memory allocators for integration with specialized environments

- Binary persistence format with versioned header (magic number

0x56444230) - Python bindings via

vdb.pyfor hybrid applications

Memory management deserves particular attention. Developers can override the default allocators by defining VDB_MALLOC, VDB_FREE, and VDB_REALLOC before including the header—enabling integration with arena allocators, RTOS memory pools, or garbage-collected environments.

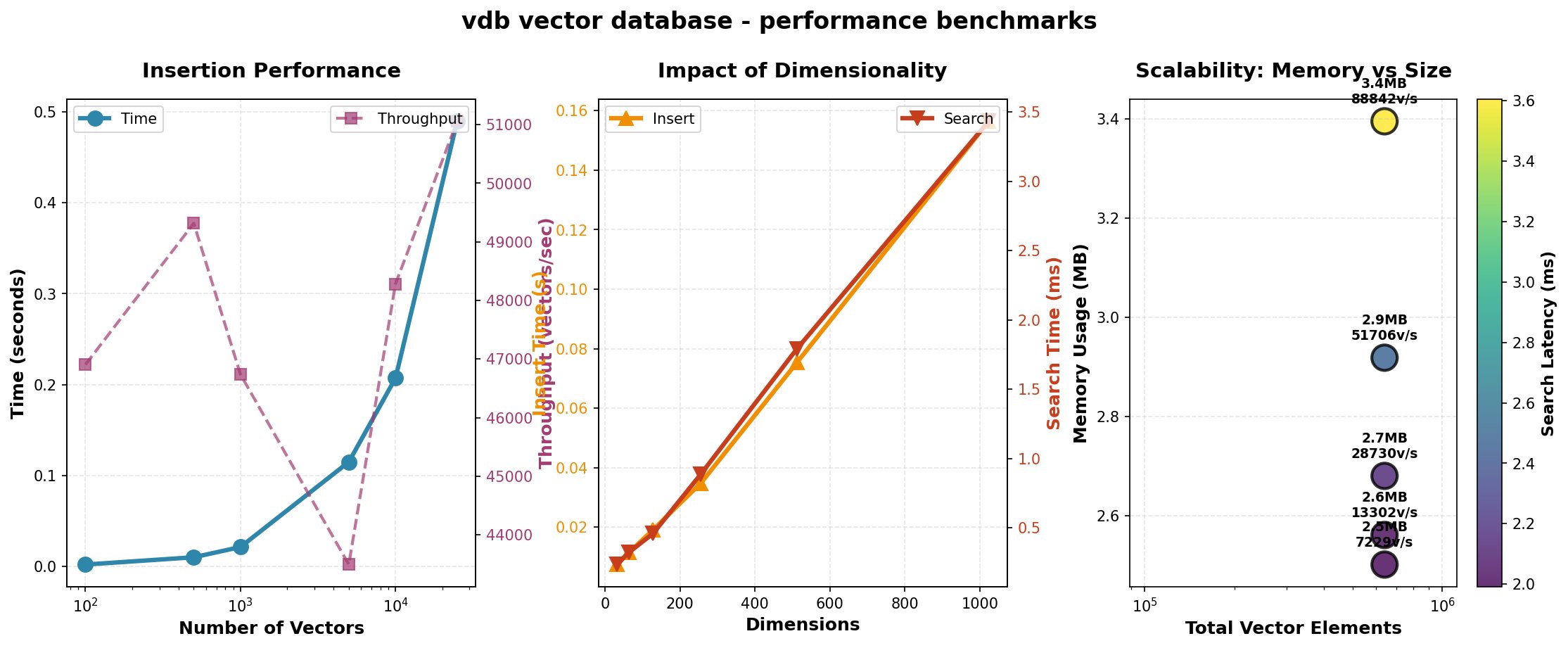

Benchmark results showing vdb's performance characteristics across different configurations

Benchmark results showing vdb's performance characteristics across different configurations

Performance-wise, vdb employs a brute-force search approach optimized with compiler intrinsics. While this limits scalability to millions of vectors, it provides predictable latency for smaller datasets (under 100K vectors) common in edge computing scenarios. The benchmark image shows how enabling multithreading significantly accelerates searches on multi-core systems.

Practical applications include:

- Embedded ML: On-device similarity search for microcontroller-based AI

- Game development: NPC memory systems using vectorized context

- Research prototyping: Rapid experimentation without infrastructure dependencies

- Database extensions: Adding vector search to embedded databases like SQLite

The library's persistence format uses a compact binary representation that stores vectors alongside their string IDs—though notably omitting metadata to maintain simplicity. This enables models to be trained offline and deployed to devices with persistent vector storage.

vdb joins a growing ecosystem of lightweight AI infrastructure components challenging the notion that vector databases require cloud-scale resources. For developers needing vector search in constrained environments, it represents a compelling new option that favors portability over petabytes.

Resources:

Comments

Please log in or register to join the discussion