New research reveals 93% of developers use AI coding assistants, yet productivity improvements have plateaued at just 10% despite AI writing over a quarter of production code.

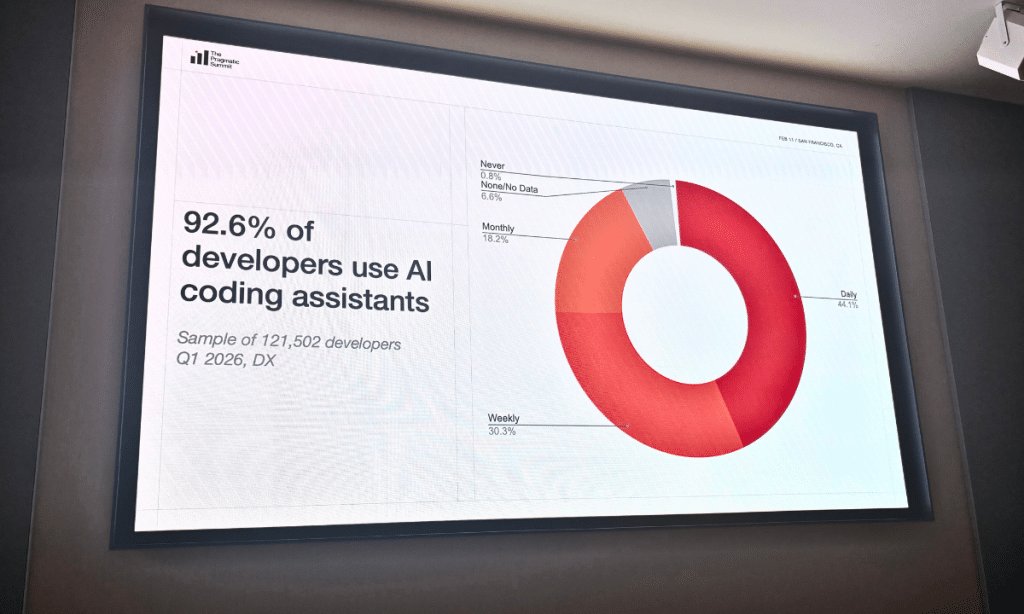

When Laura Tacho took the stage at this year's Pragmatic Summit, she brought data that challenged the prevailing narrative about AI coding assistants. The numbers were striking: 92.6% of developers now use AI coding tools at least monthly, with 75% using them weekly. Yet despite this near-universal adoption, productivity gains have stubbornly plateaued at around 10%.

{{IMAGE:2}}

The research, based on data from 121,000 developers across 450+ companies, paints a picture of widespread AI integration that hasn't delivered the transformative productivity gains many expected. Developers report saving about 4 hours per week with AI assistance – essentially unchanged since Q2 2025. The productivity bump that initially hit 10% when AI tools first emerged has remained stuck at that level.

What's particularly fascinating is where that AI-generated code is actually going. According to data tracking 4.2 million developers from November 2025 to February 2026, AI-authored code now comprises 26.9% of all production code – up from 22% just last quarter. For daily AI users, nearly a third of the code they merge into production is written by AI with minimal human intervention.

This shift is having a measurable impact on developer onboarding. The time to complete a developer's 10th pull request – a key indicator of successful onboarding – has been cut in half since Q1 2024. Laura sees this as AI's most immediate benefit: reducing the mental load of getting up to speed in complex codebases, whether for new hires, engineers switching projects, or even non-engineers entering technical workflows.

But the organizational impact is far from uniform. When analyzing data from 67,000 developers, the research revealed a stark divide: some companies experienced twice as many customer-facing incidents, while others saw a 50% reduction. The difference wasn't in the tools themselves, but in how organizations were structured to use them.

"In well-structured organizations, AI acts as a force multiplier," Laura explained. "It helps teams move faster, scale with higher quality, and boost reliability. In struggling organizations, AI tends to highlight existing flaws rather than fix them."

This observation cuts to the heart of why productivity gains have stalled. The research suggests that AI adoption alone doesn't guarantee results – it merely exposes organizational weaknesses that were already there. Companies that were ready to abandon their cloud or agile transformations are now giving up on AI transformation too.

The problem, according to Laura, is fundamentally a management challenge. "The hype made it sound like just trying AI would automatically pay off," she noted. "But so far, most tools have been used for individual coding tasks. To see real impact, we need to use AI at the organizational level, not just for single tasks."

She's particularly skeptical of technology's promise to improve performance without addressing underlying constraints. "If we don't solve our systemic issues, we'll just carry them into space with us," she warned. "The real question isn't how to colonize Mars, but how to achieve actual organizational impact."

Among the AI tools gaining traction, Codex stands out. The desktop app launched February 2 and hit one million downloads within weeks, growing 60% in just the last week. Inside OpenAI, 95% of developers use Codex, and those users submit roughly 60% more pull requests weekly. Cisco provides a compelling case study: 18,000 engineers use Codex daily for complex migrations and code reviews, cutting their code review time in half.

So what separates the organizations seeing real benefits from those stuck at the 10% plateau? Laura identified three key factors:

- Clear goals and measurement of results

- Recognition that Developer Experience (DevEx) matters more than ever

- AI success depends on fast Continuous Integration, clear documentation, and well-defined services

The research makes clear that the barriers aren't technical – they're about change management and leadership support. Organizations treating AI as a company-wide challenge rather than just another tool adoption are the ones seeing meaningful results.

"Successful organizations experiment by tackling real customer problems," Laura concluded. "Exploring Mars sounds exciting, but it's not sustainable – it's expensive and distracts from the core business. Focus your experiments on the customer to drive meaningful results."

Somewhere, something incredible is waiting to be discovered – but it might not be in the code AI writes. It might be in how we organize to use it.

Comments

Please log in or register to join the discussion