A simple experiment using Nano Banana Pro-generated images exposes critical vulnerabilities in AI detection tools, raising concerns about their reliability in legal and journalistic contexts.

The case of Melissa Sims demonstrated how dangerous unchecked AI-generated content can be in legal proceedings. As AI image generators proliferate, the tech industry has proposed detection tools as safeguards—software designed to identify machine-generated imagery through algorithmic analysis. However, a controlled experiment using Nano Banana Pro images reveals these detection systems fail catastrophically against basic image manipulation techniques.

The Testing Methodology

We challenged six leading AI detection services using images created by Google Gemini-powered Nano Banana Pro. The prompt was deliberately simple: "A woman striking a fighting pose holding a banana against the sky. Background typical cityscape with uninterested people." This newer generator provided an inherent advantage since detection tools primarily train on established models like Midjourney and DALL-E 3.

Original Nano Banana Pro output featuring visible watermark

Original Nano Banana Pro output featuring visible watermark

Round 1: Failure Despite Obvious Watermarks

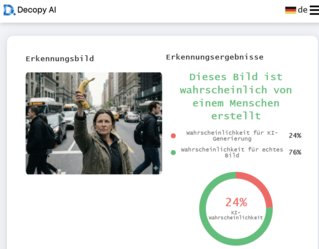

Initial testing used the raw JPG conversion of the Nano Banana Pro output, complete with Gemini watermarking. Shockingly, two of six detectors classified this clearly AI-generated image as "low probability" for artificial origin. Services like Illuminarty and Decopy delivered inconsistent results despite the explicit branding.

Illuminarty's analysis of original watermarked image

Illuminarty's analysis of original watermarked image

Decopy's assessment of the same image

Decopy's assessment of the same image

Round 2: Watermark Removal

Using Windows Photos' built-in eraser tool, we removed the watermark in seconds—a realistic forgery scenario. This simple edit deceived a third detector, dropping its AI probability assessment below 30%. Interestingly, Illuminarty increased its suspicion rating post-edit, while MyDetector joined the growing number of tools failing the test.

Detection results after watermark removal

Detection results after watermark removal

Round 3: Adding Artificial Imperfections

The decisive test involved adding photographic realism through Cyberlink PhotoDirector:

- Lens distortion correction

- Artificial chromatic aberration

- Contrast adjustments

- Realistic noise overlay

After this five-minute post-processing, all six detectors capitulated. Every service—including Copyleaks—rated AI probability below 5%. The manipulated image now passed as unquestionably human-captured photography.

Critical Implications

This experiment demonstrates that current AI detection tools:

- Fail against newer generators not in their training data

- Can be defeated by basic editing tools available to amateurs

- Create dangerous false confidence in legal/journalistic contexts

As Nano Banana Pro and similar tools evolve, detection services remain fundamentally reactive. Until these systems can analyze compositional artifacts beyond surface-level patterns, they provide illusory protection against AI deception. For courts and newsrooms requiring content verification, human expertise combined with metadata analysis remains essential—algorithmic detectors alone create more risk than security.

Tested detection services:

Comments

Please log in or register to join the discussion