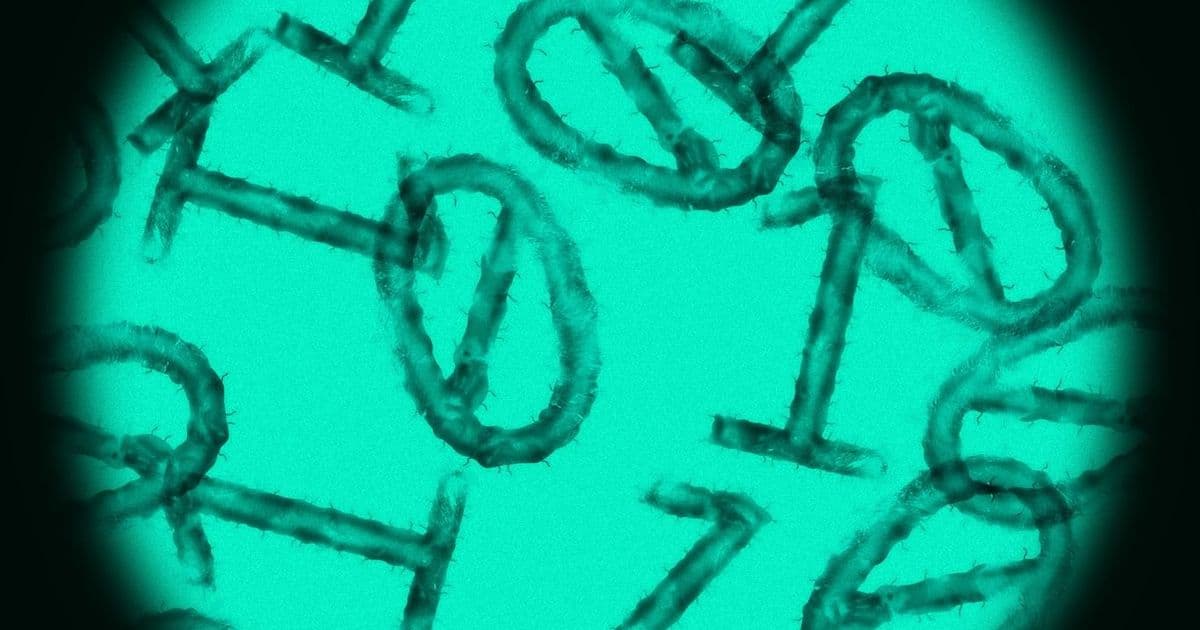

Over 100 researchers from Johns Hopkins, Oxford, and other institutions are calling for guardrails on infectious disease datasets that could enable AI systems to design deadly viruses, raising urgent biosecurity concerns.

A coalition of more than 100 researchers from leading institutions including Johns Hopkins and Oxford has issued a stark warning about the potential misuse of infectious disease datasets in AI development, calling for immediate guardrails to prevent the creation of deadly biological weapons.

The Growing Biosecurity Threat

The researchers' concerns center on the White House's Genesis Mission, announced in late 2025, which aims to build AI systems trained on massive scientific datasets to accelerate research breakthroughs. While the initiative promises to revolutionize medical research, the coalition warns that the same datasets could be exploited to design highly pathogenic viruses.

"The convergence of AI capabilities and biological data represents an unprecedented dual-use challenge," said one researcher involved in the coalition. "We're essentially providing the tools to both cure and create pandemics."

What's at Stake

The datasets in question contain detailed information about:

- Viral genomes and protein structures

- Transmission mechanisms

- Host-pathogen interactions

- Immune system responses

- Drug resistance patterns

When combined with advanced AI models, this information could theoretically be used to engineer viruses with enhanced transmissibility, virulence, or resistance to existing treatments.

The Research Community's Response

The coalition is proposing several key safeguards:

- Access Controls: Implementing tiered access systems for sensitive datasets

- Audit Trails: Maintaining detailed logs of who accesses what data and for what purpose

- Ethical Review Boards: Establishing specialized committees to evaluate research proposals

- International Cooperation: Creating global frameworks for data governance

- Technical Safeguards: Developing AI systems that can detect and prevent malicious use

Historical Context

This isn't the first time the scientific community has grappled with dual-use research concerns. The 2011 controversy over H5N1 influenza research, where scientists created more transmissible strains of bird flu, led to temporary research moratoriums and new oversight mechanisms.

However, the current situation is uniquely challenging because AI systems can potentially bypass traditional safeguards. Unlike human researchers who require physical access to laboratories, AI models could theoretically design pathogens without ever handling biological materials.

The AI Development Timeline

Recent advances in AI capabilities have accelerated these concerns. Models like OpenAI's o3 and DeepSeek's R1 have demonstrated increasingly sophisticated reasoning abilities, raising questions about when AI systems might be capable of independent biological design.

Industry experts note that while current AI systems still require significant human oversight for biological applications, the trajectory suggests this could change within years rather than decades.

Policy Implications

The researchers' call for guardrails comes amid broader debates about AI regulation. The Biden administration's recent executive order on AI included provisions for biosecurity, but implementation details remain unclear.

Some policymakers are pushing for more aggressive action. Senator Maria Cantwell has introduced legislation that would create a new federal office specifically tasked with overseeing AI-bio convergence research.

The Scientific Community Divided

Not all researchers agree with the coalition's approach. Some argue that overly restrictive measures could hamper legitimate research that could save millions of lives.

"We can't let fear paralyze scientific progress," said Dr. Sarah Chen, an infectious disease researcher not involved in the coalition. "The same datasets that could be misused are also essential for developing vaccines and treatments."

Looking Forward

The coalition plans to present their recommendations at the upcoming Biological Weapons Convention meeting in Geneva. They're also working with AI companies to develop technical solutions that could prevent misuse while preserving research utility.

As AI capabilities continue to advance, the tension between innovation and security will only intensify. The outcome of this debate could shape not just the future of AI development, but the future of global biosecurity itself.

The Bottom Line: The scientific community faces an unprecedented challenge in balancing the transformative potential of AI-powered biological research against the catastrophic risks of misuse. The next few years will be critical in establishing frameworks that can harness AI's benefits while preventing its weaponization.

Comments

Please log in or register to join the discussion