Anthropic confirms a viral screenshot claiming Claude banned a user and reported them to authorities is fabricated. The company states such fake messages periodically circulate and don't reflect actual enforcement actions.

A viral screenshot purportedly showing Claude banning a user and threatening to report them to authorities is entirely fabricated, according to Anthropic. The AI developer confirmed to BleepingComputer that the alarming message doesn't match any legitimate enforcement actions taken by their systems.

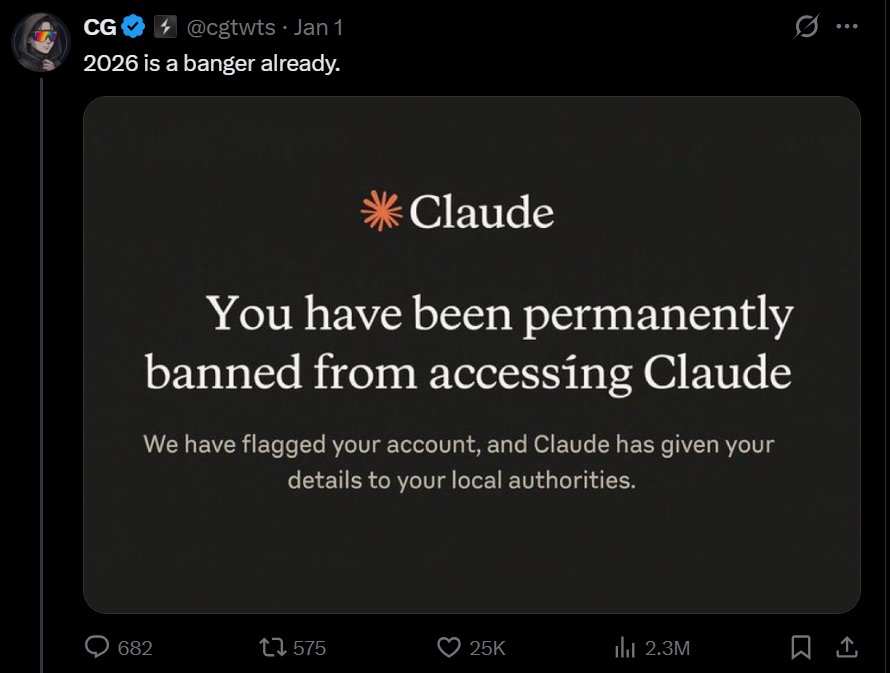

The fake message, which circulated widely on social media platform X, displayed a notification stating: "Your account has been permanently banned... Details of your account and activity will be shared with local authorities." Anthropic clarified this language doesn't appear in their system and emphasized such manipulated images resurface periodically despite being inaccurate.

Above: The fabricated screenshot falsely attributed to Claude's enforcement system

While confirming the specific screenshot is fake, Anthropic noted legitimate account restrictions do occur when users violate their Acceptable Use Policy. These policies prohibit activities including:

- Generating content for illegal activities (e.g., weapon creation)

- Harassment campaigns or non-consensual content

- Automated attacks against third-party services

- Attempts to circumvent security measures

According to AI ethics researcher Dr. Elena Torres, "Fabricated screenshots exploit public anxiety about AI monitoring. Reputable companies like Anthropic typically issue warnings before bans and don't involve law enforcement unless legally compelled."

For users concerned about account security:

- Verify unusual messages: Authentic enforcement notifications reference specific policy violations and appear in Claude's message history

- Avoid policy violations: Never prompt for illegal activities or automated attacks

- Report suspicious content: Forward questionable screenshots to Anthropic's support team for verification

- Maintain transparency: Anthropic documents all enforcement actions in their Transparency Reports

Anthropic's clarification comes amid increased scrutiny of AI moderation systems. Unlike the fabricated screenshot, legitimate restrictions typically involve progressive enforcement starting with warnings before escalating to temporary suspensions. Permanent bans are reserved for severe or repeated violations after human review.

As AI assistants become integral to development workflows, verifying sources remains critical. Developers should rely on official communications channels rather than unverified social media posts when assessing platform policies.

Comments

Please log in or register to join the discussion