A public exchange between a developer and Anthropic staff highlights growing tension around how AI models can be integrated into third-party tools, with legal terms potentially conflicting with common development practices.

A recent exchange on X has surfaced tensions between Anthropic's stated developer-friendly posture and the actual legal constraints around using Claude in developer tools. The conversation began when Thariq (@trq212) referenced a statement about supported usage, prompting a response from SIGKITTEN pointing out potential contradictions with Anthropic's consumer terms.

What Was Claimed

Thariq's original statement positioned Anthropic as welcoming of developers building on Claude, specifically mentioning "other coding agents and harnesses" as legitimate use cases. The message emphasized that Anthropic understands developers have "broad preferences for different tool ergonomics" and framed API access as the "supported way" to integrate Claude into custom tools.

The Contradiction

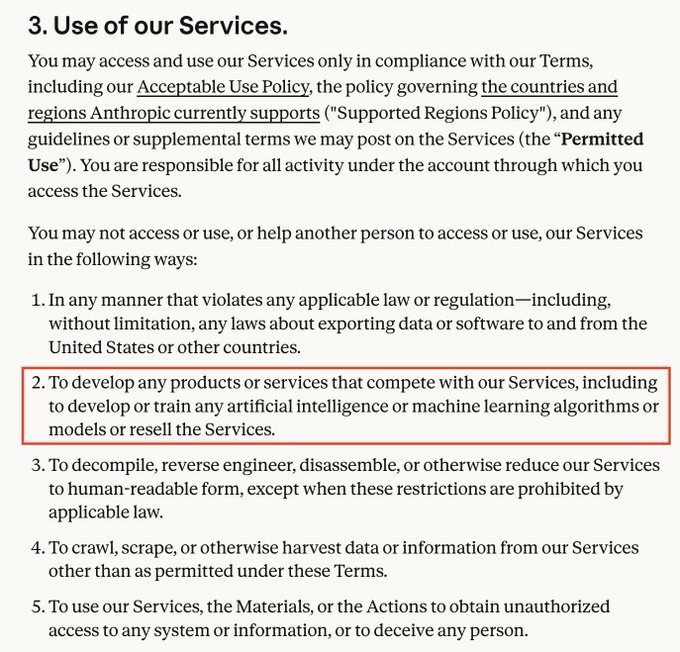

SIGKITTEN's response suggests that this positioning may conflict with Anthropic's actual terms of service for consumer accounts. The core issue appears to be whether developers using Claude through consumer-facing interfaces (as opposed to pure API calls) are operating within acceptable bounds, even when building legitimate developer tooling.

This matters because many developers prefer working with existing Claude interfaces rather than implementing full API integrations, especially for prototyping or smaller-scale tools. The friction point is whether such usage violates terms that might restrict automated access, scraping, or programmatic interaction with the consumer product.

Why This Matters

The exchange highlights a broader pattern in AI platform governance: the gap between "developer-friendly" marketing and the actual legal frameworks governing usage. For developers building AI-enhanced tools, clarity on permitted use cases is critical.

Anthropic's position appears to be that API access is the only officially supported path for integration. This creates several practical implications:

- Tool developers must commit to API implementation, which may involve costs, rate limits, and infrastructure decisions

- Smaller projects that might work with consumer interfaces face uncertainty about terms compliance

- Cross-platform compatibility becomes more complex when different AI providers have different integration policies

The Technical Reality

From a practitioner's standpoint, the distinction between "API" and "consumer interface" usage is increasingly blurry in modern AI systems. Many tools that appear to be simple user-facing applications actually involve programmatic interaction under the hood.

The core question becomes: where does legitimate developer tooling end and prohibited automated usage begin? Anthropic's answer seems to be "use the API," but this doesn't account for the spectrum of developer needs and preferences.

Current Status

As of the exchange on January 9, 2026, there's been no official clarification from Anthropic addressing the specific contradiction raised. The conversation has generated significant attention (27K views), suggesting this is a pain point for many developers.

For developers currently building tools that interact with Claude, the safest path appears to be:

- Review Anthropic's current terms of service for both consumer and API products

- Consider whether your usage pattern would be considered "automated" or "programmatic"

- Evaluate the cost and complexity of migrating to official API access

- Monitor for official clarification from Anthropic on this specific issue

Broader Context

This situation mirrors similar tensions we've seen with other AI platforms. The push toward API-only integration creates a barrier to entry for smaller developers and experimental projects while potentially stifling innovation in tooling that might emerge from the community.

It also raises questions about platform lock-in. If developers must commit to specific API implementations rather than being able to work with models through various interfaces, it reduces flexibility and increases switching costs.

What Developers Should Watch

- Official clarification from Anthropic on the scope of their consumer terms

- Whether other AI providers (OpenAI, Google, Meta) have similar restrictions

- The emergence of third-party tools that navigate these constraints

- Potential for standardized cross-provider integration patterns

The core tension remains: Anthropic wants developers to build on Claude, but the legal framework may not match the technical possibilities or developer preferences. Until there's clear guidance on what constitutes acceptable integration beyond pure API calls, developers building tools face uncertainty about their compliance status.

For teams considering Claude integration, the pragmatic approach is to architect around the API from the start, even if that means accepting the constraints of rate limits, costs, and implementation complexity that come with it. The alternative—working with consumer interfaces—carries legal risk that most organizations can't accept.

This isn't just about Anthropic. It's about how AI platforms balance openness with control, and whether the current model of API-centric integration serves the diverse needs of the developer community building the next generation of AI-enhanced tools.

Comments

Please log in or register to join the discussion