Anthropic has launched Claude Opus 4.1, its most advanced AI model yet, showcasing significant improvements in real-world coding, agentic tasks, and complex reasoning. Available now for paid users via API and major cloud platforms, it outperforms Claude Opus 4 and rivals like GPT-4 and Gemini 1.5 Pro in critical benchmarks. This release intensifies the generative AI arms race as the industry anticipates OpenAI's GPT-5.

Just three months after declaring Claude Opus 4 the "best coding model in the world," Anthropic has raised the bar again with Claude Opus 4.1. Released today, this upgraded model demonstrates measurable improvements in handling complex reasoning, software engineering challenges, and agentic workflows – timing its arrival perfectly as speculation about OpenAI's GPT-5 reaches fever pitch.

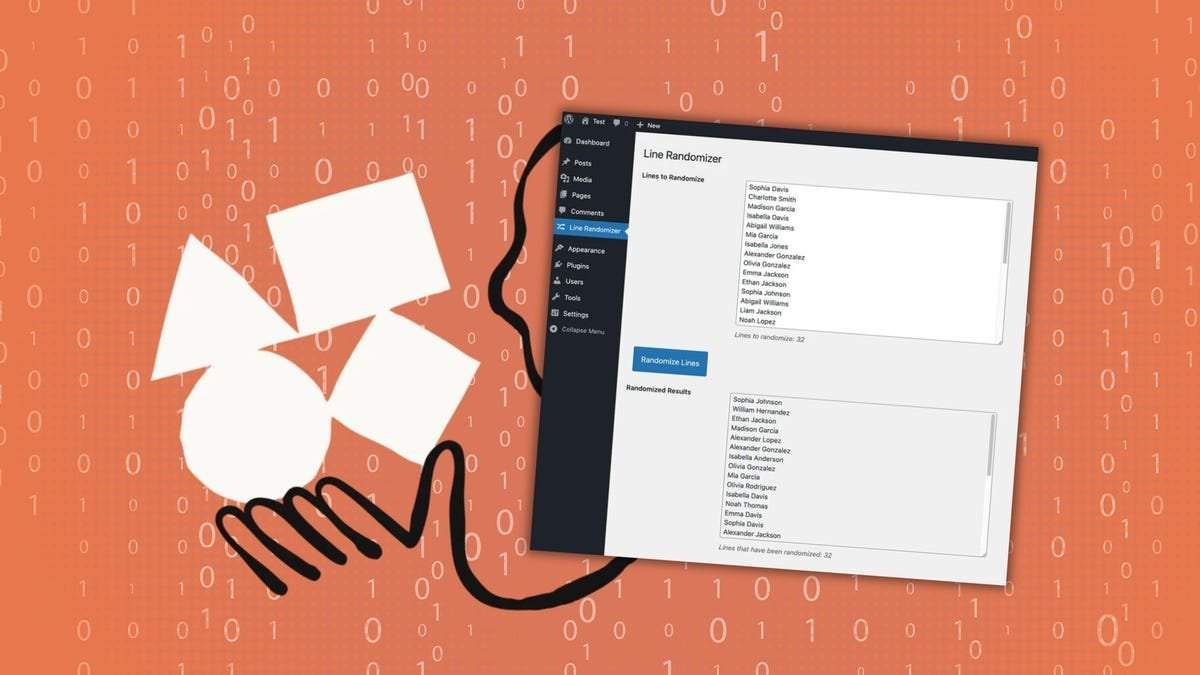

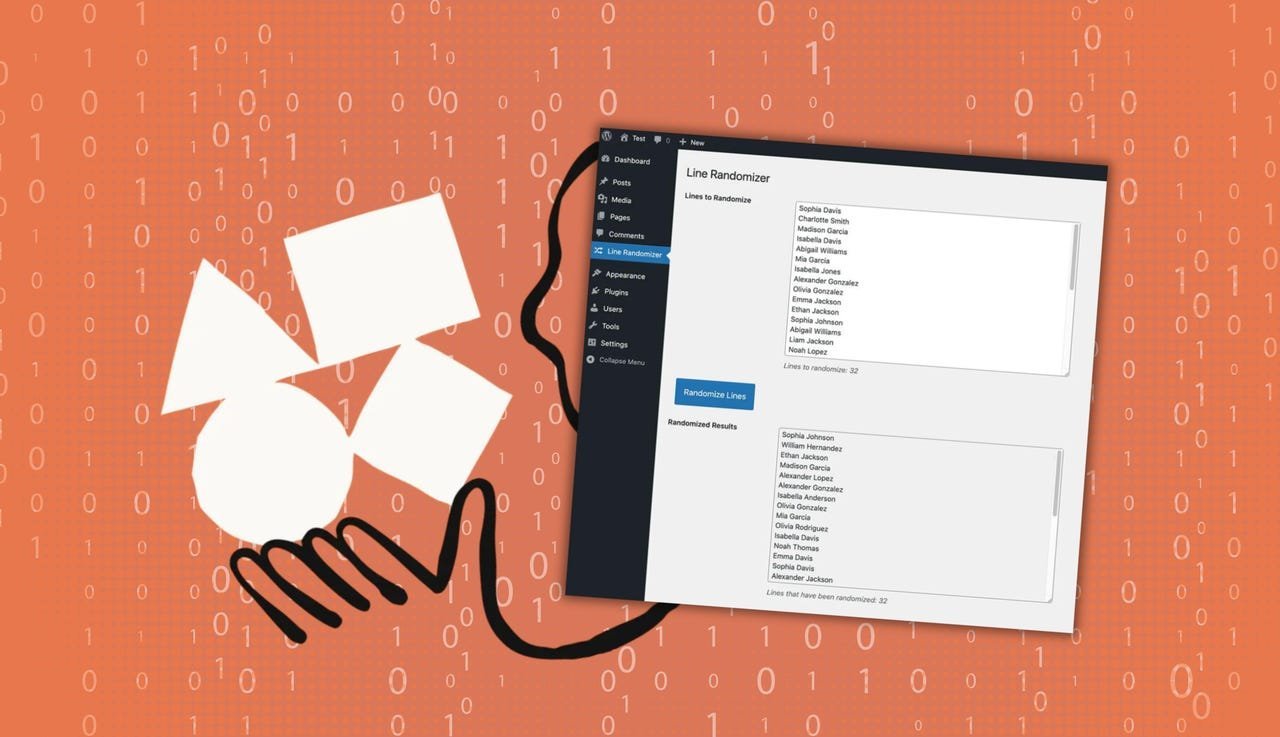

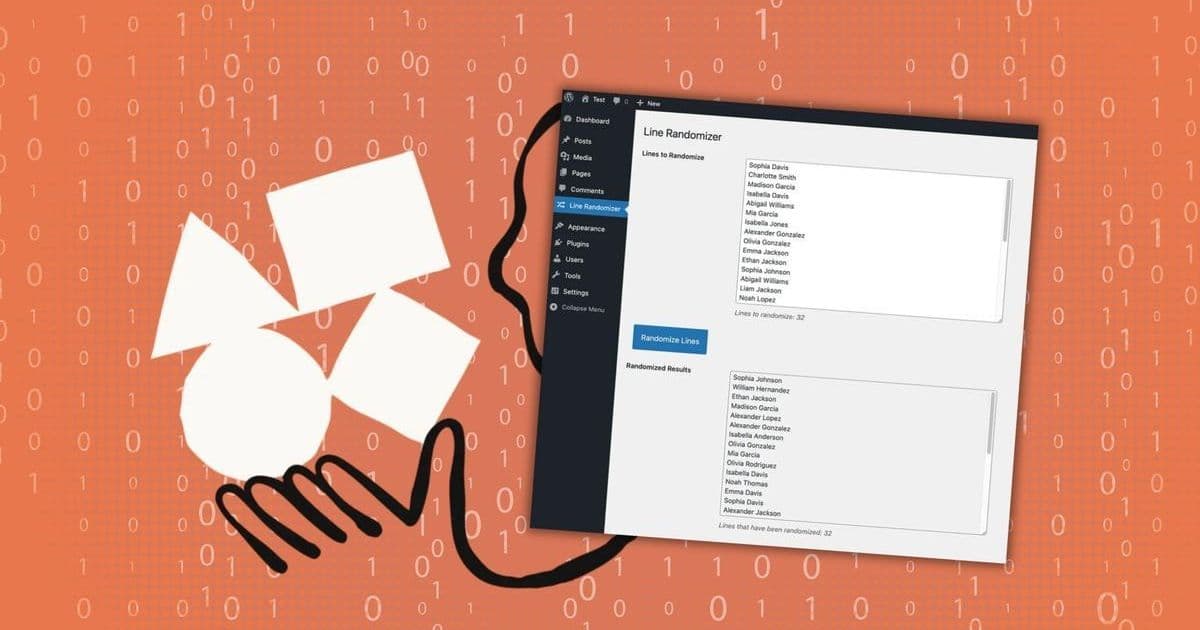

Image: Anthropic / ZDNET - Claude Opus 4.1 demonstrates enhanced coding capabilities.

Image: Anthropic / ZDNET - Claude Opus 4.1 demonstrates enhanced coding capabilities.

Benchmark Dominance and Technical Leap

Anthropic's claims are backed by rigorous testing. Opus 4.1 significantly outperforms its predecessor on the SWE-bench Verified, a demanding benchmark using real-world GitHub issues to test an AI's ability to resolve software engineering tasks. This solidifies its position as a premier tool for developers seeking AI-assisted coding. Beyond coding, Opus 4.1 achieved superior results across a suite of benchmarks:

- MMMLU: Testing multilingual understanding

- AIME 2025: Gauging advanced mathematical reasoning

- GPQA: Evaluating graduate-level knowledge and reasoning

- Agentic Tasks: Excelling in complex, multi-step workflows

Crucially, Anthropic states Opus 4.1 also outperforms competitors like OpenAI's GPT-4 and Google's Gemini 1.5 Pro in several key areas, particularly the SWE-bench Verified, offering developers a potentially more powerful tool for real-world application development.

Under the Hood: Safety and Access

Accompanying the release is a detailed 22-page System Card. This transparency report confirms Opus 4.1 is deployed under Anthropic's AI Safety Level 3 (ASL-3) Standard within their Responsible Scaling Policy (RSP). While acknowledging persistent vulnerabilities common to large language models (like potential for misinformation or bias), the documentation outlines the safety assessments performed.

For developers and enterprises eager to integrate this power:

- Access Paths: Claude Pro ($20/month), Claude Max ($100/month), Claude Code, Anthropic API, Amazon Bedrock, Google Cloud Vertex AI.

- Target Users: Developers requiring advanced code generation/debugging, researchers tackling complex reasoning problems, businesses building sophisticated AI agents.

Why This Release Matters: The Generative AI Arms Race Intensifies

The rapid iteration from Opus 4 to Opus 4.1 highlights the frenetic pace of advancement in the foundation model space. Anthropic isn't just iterating; it's demonstrating significant performance jumps in critical areas within a remarkably short timeframe. This release serves as a strategic counter to the imminent arrival of OpenAI's GPT-5, signaling that Anthropic is committed to maintaining leadership in raw capability, especially for technical users.

For the developer community, Opus 4.1 translates to more reliable code suggestions, better handling of intricate technical documentation, and more capable AI agents that can execute complex sequences of actions. However, the System Card serves as a necessary reminder: even the most advanced models require careful implementation and output vetting due to inherent limitations.

Anthropic's move sets a high bar. The pressure is now squarely on competitors to demonstrate not just new features, but tangible, benchmark-verified improvements in core capabilities that matter to builders pushing the boundaries of what AI can achieve. The race for AI supremacy just entered a new, faster lap.

Source: Anthropic announcement and benchmark data, ZDNET reporting (Sabrina Ortiz, August 5, 2025).

Comments

Please log in or register to join the discussion