A new GitHub project combines OpenAI, Suno AI, and Runware APIs to automatically generate music videos from text descriptions, dramatically lowering barriers for content creators. This full-stack implementation showcases how modern AI services can integrate to create complex multimedia outputs with minimal human intervention.

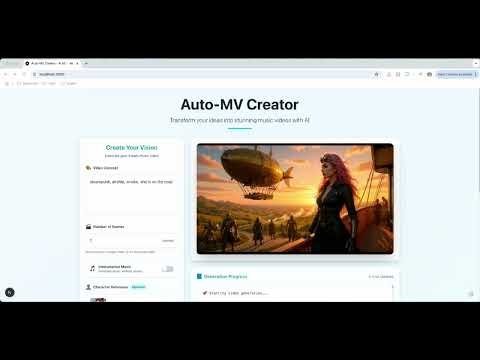

The dream of typing a description and instantly generating a custom music video just took a leap forward with Auto-MV Creator, an open-source project that stitches together several cutting-edge AI services into a seamless creative pipeline. This isn't theoretical—it's a working implementation where users input a concept (like "cyberpunk city at night"), and the system autonomously generates scenes, composes original music, synchronizes visuals to audio, and outputs a complete music video. For developers, it represents a fascinating case study in API orchestration and the practical challenges of multimedia AI workflows.

How the Magic Happens

At its core, Auto-MV Creator functions as a conductor coordinating four specialized AI performers:

- OpenAI's API dissects text prompts into sequential visual scenes

- Runware generates images for each scene description

- Suno API composes original music matching the narrative mood

- FFmpeg stitches everything into video format, synchronized to the beat

All generated assets flow through Supabase for storage and state management, while a Vercel-hosted webhook handles asynchronous music generation callbacks—a critical design choice since music synthesis can take minutes. The entire stack runs on a React-based frontend, making it accessible as a web application.

Why Developers Should Pay Attention

This project highlights several emerging paradigms in applied AI:

- Asynchronous Orchestration: The webhook architecture elegantly handles Suno API's long-running tasks without blocking the UI

- State Management: Supabase tracks complex multi-step generation processes (images + music + assembly)

- Accessibility Trade-offs: While lowering creative barriers, it requires API keys for four services and local FFmpeg installation—showcasing current limitations in fully democratized AI media creation

Developers can experiment with the public GitHub repo, though be prepared: you'll need approximately $50 in API credits across services to generate significant output. The setup process reveals fascinating implementation details, like using database triggers to manage Suno callback events.

Real Output Samples

Here are frames from actual generated videos using this stack:

A dystopian cityscape generated from text prompts

A dystopian cityscape generated from text prompts

Abstract visuals synchronized with AI-composed electronic music

Abstract visuals synchronized with AI-composed electronic music

The Bigger Picture

While AI-generated music videos won't replace professional production soon, projects like Auto-MV Creator demonstrate how rapidly the creative stack is evolving. For developers, it underscores that the biggest innovations often come from combining specialized AI tools rather than waiting for monolithic solutions. As multimodal models improve, expect similar pipelines to power everything from marketing content to personalized entertainment—all initiated by a simple text prompt.

The real genius lies not in any single component, but in the architectural vision connecting them. That's where human ingenuity still leads the dance.

Comments

Please log in or register to join the discussion