A 33-year-old critique of AI 'copilots' by computing pioneer Mark Weiser is gaining new relevance. This article explores why HUD-like interfaces that enhance human perception, rather than virtual assistants, might be the key to unlocking AI's potential in complex tasks.

Beyond Copilots: The Case for AI Head-Up Displays

In the rush to embed generative AI into every software interface, the dominant paradigm has been the "copilot": a conversational agent that users instruct, question, and delegate tasks to. But what if this approach is fundamentally limiting? A provocative critique from computing history, recently resurfaced by software researcher Geoffrey Litt, suggests we might be missing a more powerful model: the Head-Up Display (HUD).

Weiser's Timeless Warning

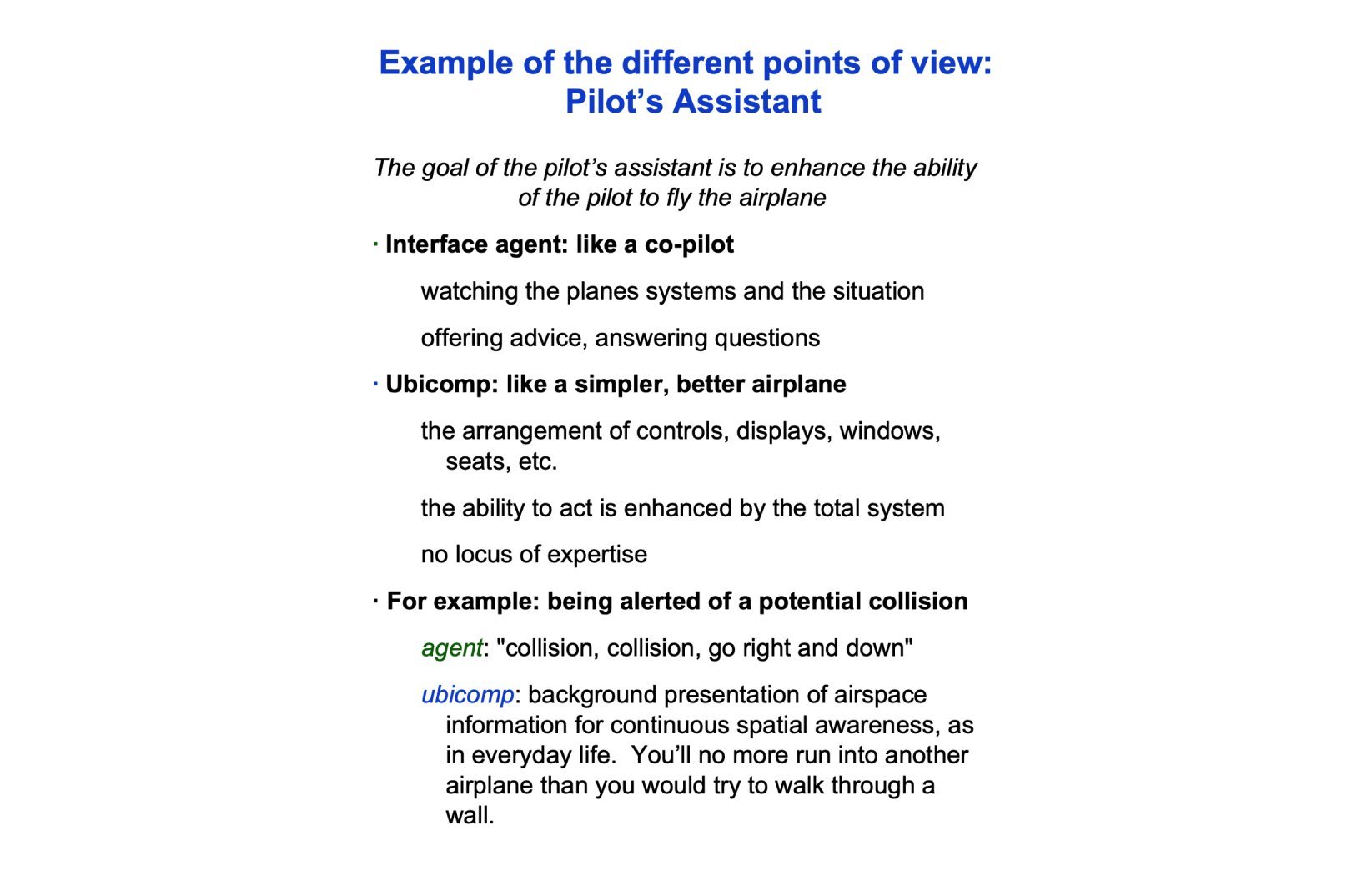

Litt's analysis revisits a 1992 MIT Media Lab talk by Mark Weiser—father of ubiquitous computing—who railed against anthropomorphic "interface agents." Weiser used aviation as a metaphor:

"The agentic option is a 'copilot'—a virtual human who you talk with to get help flying the plane. If you’re about to run into another plane it might yell 'collision, go right and down!'"

Weiser’s 1992 slide contrasting copilot intervention (top) with ambient awareness (bottom). (Source: Geoffrey Litt, via Mark Weiser)

Weiser championed an alternative: cockpit designs that make critical information ambient. Like physical intuition preventing someone from walking through walls, pilots should feel their environment. His vision? Technology that becomes "an extension of [your] body"—invisible until needed.

The HUD Philosophy in Modern Software

Modern aviation HUDs realize this ideal—overlaying altitude, horizon lines, and threats directly onto the windshield. No conversation needed; awareness becomes instinctive. Litt argues this model applies powerfully to software:

- Spellcheck as Proto-HUD: Red squiggles under misspelled words require no chat interface. They create instant visual intuition—a "sense" for language errors.

Spellcheck exemplifies ambient augmentation without assistant interfaces. (Source: Geoffrey Litt)

- AI-Powered Debugging HUDs: Instead of asking a copilot to "fix this bug," Litt built a custom Prolog interpreter debugger visualizing program flow. This transformed debugging from delegation to augmented understanding:

"I have new senses. I can see how my program runs... spotting new problems and opportunities ambiently."

When Copilots Fail, HUDs Soar

Litt clarifies this isn’t about eliminating copilots but choosing the right tool:

| Approach | Best For | Real-World Analog |

|---|---|---|

| Copilot (Agent) | Routine, predictable tasks | Autopilot for steady flight |

| HUD (Augmentation) | Complex, high-stakes work | Landing a damaged plane manually with enhanced instruments |

Copilots excel at delegation but risk disempowering users. HUDs preserve human agency while expanding cognitive bandwidth—critical for extraordinary outcomes like emergency landings or innovative coding.

The Invisible Revolution

Weiser’s 33-year-old critique feels urgent today. As Litt concludes, for AI to truly augment humanity, we must move beyond chat interfaces. HUD-like designs—whether visualizing data flows, highlighting security risks, or mapping dependencies—could unlock deeper mastery by making AI an extension of our senses rather than a separate entity. The future belongs to interfaces that don’t just assist but transform human perception.

Further Exploration:

- Using AI to Augment Human Intelligence (Nielsen & Carter)

- Is Chat a Good UI for AI? (Matuschak)

- Malleable Software in the Age of LLMs (Litt)

Source: Adapted from Geoffrey Litt's "Enough AI copilots! We need AI HUDs" (July 2025).

Comments

Please log in or register to join the discussion